Executive Summary

Scientists’ use of flawed statistics and editors’ complaisant practices both contribute to the mass production and publication of irreproducible research in a wide range of scientific disciplines. Far too many researchers use unsound scientific practices. This crisis poses serious questions for policymakers. How many federal regulations reflect irreproducible, flawed, and unsound research? How many grant dollars have funded irreproducible research? How widespread are research integrity violations? Most importantly, how many government regulations based on irreproducible science harm the common good?

The National Association of Scholars’ (NAS) project Shifting Sands: Unsound Science and Unsafe Regulation examines how irreproducible science negatively affects select areas of government policy and regulation governed by different federal agencies. We also seek to demonstrate procedures which can detect irreproducible research. This second policy paper in the Shifting Sands project focuses on irreproducible research in the field of nutritional epidemiology, which informs much of the U.S. Food and Drug Administration’s (FDA) nutrition policy.

The scientific (academic) world’s professional incentives reward exciting research with new positive (statistically significant) claims—but not reproducible research. This encourages researchers, wittingly or negligently, to use different flawed statistical practices to produce positive, but likely false, claims. Our report applies Multiple Testing and Multiple Modeling (MTMM) to assess whether a body of research indeed has been affected by such flawed practices.

MTMM controls for experiment-wise error—the probability that at least one individual claim will register a false positive when multiple statistical tests are conducted. Conducting large numbers of statistical tests in a study produces many false positives by chance alone. We counted the number of statistical tests and used a novel statistical technique—p-value plotting—as a severe test to diagnose specific claims made about relationships between i) consumption of red and processed meats and health outcomes such as mortality, cancers, and diabetes; and ii) soy protein and lipid (cholesterol) markers as surrogates for cardiovascular disease risk reduction.

We found persuasive circumstantial evidence that the scientific literature (in general) and statistical practices (specifically) affecting the nutritional epidemiology of red and processed meats and negative health outcomes, and soy protein and cardiovascular disease risk reduction, are untrustworthy. All of these flawed statistical practices center around the use of the semi-quantitative Food Frequency Questionnaire (FFQ) – a self-administered dietary assessment instrument. FDA nutritional policies on red and processed meats and soy protein might have been very different had they applied more rigorous scientific reproducibility requirements to research that they used to justify their policies.

We offer 12 recommendations that are intended to bring FDA methodologies up to the level of best available science, as per the mandate of The Information Quality Act (sometimes called The Data Quality Act):

- Adopt resampling methods (Multiple Testing and Multiple Modeling) as part of the standard battery of tests applied to nutritional epidemiology research.

- Take greater account of difficulties associated with subgroup analysis in nutritional research – which increases the possibility of producing false positive relationships.

- Require all studies that do not correct for MTMM to be labeled “exploratory.”

- Rely exclusively on meta-analyses that use tests to take account of endemic HARKing, p-hacking, and other questionable research procedures.

- For all research that informs FDA approval of nutritional health claims:

- require the FDA in its assessments of scientific studies to take account of endemic HARKing, p-hacking and other questionable research procedures, e.g. require p-value plot analysis for all FFQ meta-analysis studies used to inform regulations;

- require preregistration and registered reports for observational studies as well as for randomized clinical trials;

- require public access to all relevant data sets;

- place greater weight on reproducible research;

- consider more far-reaching reforms, such as funding data set building and data set analysis separately; and

- take account of the irreproducibility crisis in the use of the “weight of evidence” standard to assess both base studies and meta-analyses.

- Do not fund or rely on research of other organizations such as the World Health Organization (WHO) until these organizations adopt sound statistical practices.

- Establish systematic procedures to inhibit research integrity violations.

We have subjected the science underpinning nutritional health claims in relation to red and processed meat and soy protein to serious scrutiny. We believe the FDA should take account of our methods as it considers food health claims. Yet we care even more about reforming the procedures the FDA uses in general to assess nutritional science.

The government should use the very best science—whatever the regulatory consequences. Scientists should use the very best research procedures—whatever result they find. Those principles are the twin keynotes of this report. The very best science and research procedures involve building evidence on the solid rock of transparent, reproducible, and actual reproduced scientific inquiry, not on shifting sands.

Preface and Acknowledgments

Peter W. Wood

President,

National Association of Scholars

An irreproducibility crisis afflicts a wide range of scientific and social-scientific disciplines, from epidemiology to social psychology. Improper research techniques, a lack of accountability, disciplinary and political groupthink, and a scientific culture biased toward producing positive results contribute to this plight. Other factors include inadequate or compromised peer review, secrecy, conflicts of interest, ideological commitments, and outright dishonesty.

Science has always had a layer of untrustworthy results published in respectable places and “experts” who were eventually shown to have been sloppy, mistaken, or untruthful in their reported findings. Irreproducibility itself is nothing new. Science advances, in part, by learning how to discard false hypotheses, which sometimes means dismissing reported data that does not stand the test of independent reproduction.

But the irreproducibility crisis is something new. The magnitude of false (or simply irreproducible) results reported as authoritative in journals of record appears to have dramatically increased. “Appears” is a word of caution, since we do not know with any precision how much unreliable reporting occurred in the sciences in previous eras. Today, given the vast scale of modern science, even if the percentage of unreliable reports has remained fairly constant over the decades, the sheer number of irreproducible studies has grown vastly. Moreover, the contemporary practice of science, which depends on a regular flow of large governmental expenditures, means that the public is, in effect, buying a product rife with defects. On top of this, the regulatory state frequently builds both its cases for regulation and the substance of its regulations on the basis of unproven, unreliable, and sometimes false scientific claims.

In short, many supposedly scientific results cannot be reproduced reliably in subsequent investigations and offer no trustworthy insight into the way the world works. A majority of modern research findings in many disciplines may well be wrong.

That was how the National Association of Scholars summarized matters in our report The Irreproducibility Crisis of Modern Science: Causes, Consequences, and the Road to Reform (2018).1 Since then we have continued our work to press for reproducibility reform by several different avenues. In February 2020, we co-sponsored with the Independent Institute an interdisciplinary conference on Fixing Science: Practical Solutions for the Irreproducibility Crisis, to publicize the irreproducibility crisis, exchange information across disciplinary lines, and canvass (as the title of the conference suggests) practical solutions for the irreproducibility crisis.2 We have also provided a series of public comments in support of the Environmental Protection Agency’s rule Strengthening Transparency in Pivotal Science Underlying Significant Regulatory Actions and Influential Scientific Information.3 We have publicized different aspects of the irreproducibility crisis by way of podcasts and short articles.4

And we have begun work on our Shifting Sands project. In May 2021 we published Shifting Sands: Report I Keeping Count of Government Science: P-Value Plotting, P-Hacking, and PM2.5 Regulation.5 This report, Flimsy Food Findings: Food Frequency Questionnaires, False Positives, and Fallacious Procedures in Nutritional Epidemiology, is the second of four that will appear as part of Shifting Sands, each of which will address the role of the irreproducibility crisis in different areas of federal regulatory policy. In these reports we address a central question that arose after we published The Irreproducibility Crisis.

You’ve shown that a great deal of science hasn’t been reproduced properly and may well be irreproducible. How much government regulation is actually built on irreproducible science? What has been the actual effect on government policy of irreproducible science? How much money has been wasted to comply with regulations that were founded on science that turned out to be junk?

This is the $64 trillion dollar question. It is not easy to answer. Because the irreproducibility crisis has so many components, each of which could affect the research that is used to inform regulatory policy, we are faced with a maze of possible sources of misdirection.

The authors of Shifting Sands include these just to begin with:

- malleable research plans;

- legally inaccessible data sets;

- opaque methodology and algorithms;

- undocumented data cleansing;

- inadequate or non-existent data archiving;

- flawed statistical methods, including p-hacking;

- publication bias that hides negative results; and

- political or disciplinary groupthink.

Each of these could have far-reaching effects on government regulatory policy—and for each of these, the critique, if well-argued, would most likely prove that a given piece of research had not been reproduced properly—not that it actually had failed to reproduce. (Studies can be made to “reproduce,” even if they don’t really.) To answer the question thoroughly, one would need to reproduce, multiple times, to modern reproducibility standards, every piece of research that informs governmental regulatory policy.

This should be done. But it is not within our means to do so.

What the authors of Shifting Sands did instead was to reframe the question more narrowly. Governmental regulation is meant to clear a high barrier of proof. Regulations should be based on a very large body of scientific research, the combined evidence of which provides sufficient certainty to justify reducing Americans’ liberty with a government regulation. What is at issue is not any particular piece of scientific research, but rather whether the entire body of research provides so great a degree of certainty as to justify regulation. If the government issues a regulation based on a body of research that has been affected by the irreproducibility crisis so as to create the false impression of collective certainty (or extremely high probability), then, yes, the irreproducibility crisis has affected government policy by providing a spurious level of certainty to a body of research that justifies a government regulation.

The justifiers of regulations based on flimsy or inadequate research often cite a version of what is known as the “precautionary principle.” This means that, rather than basing a regulation on science that has withstood rigorous tests of reproducibility, they base the regulation on the possibility that a scientific claim is accurate. They do this with the logic that it is too dangerous to wait for the actual validation of a hypothesis, and that a lower standard of reliability is necessary when dealing with matters that might involve severely adverse outcomes if no action is taken.

This report does not deal with the precautionary principle, since it summons a conclusiveness that lies beyond the realm of actual science. We note, however, that invocation of the precautionary principle is not only non-scientific, but is also an inducement to accepting meretricious scientific practice and even fraud.

The authors of Shifting Sands addressed the more narrowly framed question posed above. They applied a straightforward statistical test, Multiple Testing and Multiple Modeling (MTMM), and applied it to a body of meta-analyses used to justify government research. MTMM provides a simple way to assess whether any body of research has been affected by publication bias, p-hacking, and/or HARKing (Hypothesizing After the Results were Known)—central components of the irreproducibility crisis. In this second report, the authors applied this MTMM method to portions of the research underlying the Food and Drug Agency’s (FDA) labeling requirements for health claims that characterize the relationship between a substance (e.g., a food or food component) and a health benefit, a disease (e.g., cancer or cardiovascular disease), or a health condition (e.g., high blood pressure). The scientific literature (in general) and statistical practices (specifically) of nutritional epidemiology of red and processed meats and negative health outcomes and soy protein and cardiovascular disease risk reduction are untrustworthy. All of these flawed statistical practices center around the use of the semi-quantitative Food Frequency Questionnaire (FFQ) – a self-administered dietary assessment instrument. U.S. FDA nutrition policies on red and processed meats and soy protein might have been very different had they applied more rigorous scientific reproducibility requirements to research that they used to justify their policies.

That’s the headline conclusion. But it leads to further questions. Why didn’t the FDA use this statistical technique long ago? How exactly does regulatory policy assess scientific research? What precise policy reforms does this research conclusion therefore suggest?

The broadest answer to why the FDA hasn’t adopted this statistical technique for assessing health claims is that the entire discipline of nutritional epidemiology depends upon a series of assumptions and procedures, many of which give pause to professionals in different fields—and which should give pause to the layman as well.

- Nutritional epidemiology relies predominantly on observational data and associations, which researchers generally judge to be less reliable than experimental data and interventions. FDA Guidance Documents acknowledge the shortcomings of food consumption surveys, including FFQs, and usually note that observational studies are less reliable than intervention studies—but still allow FFQs to inform FDA regulation.

- Nutritional epidemiology particularly relies on Food Frequency Questionnaires (FFQs), which have become the most common method by which scientists measure dietary intake in large observational study populations. Scholars have noted for decades that an FFQ is an unreliable source of data, since it relies on subjects’ ability both to remember accurately what they have consumed and to report with equal accuracy. FFQ association studies also frequently gloss over the complexities of digestion. Individuals consume thousands of chemicals in millions of possible daily combinations and it therefore is challenging, if not impossible, to disentangle the association of a single dietary (food) component with a single disease.

- FFQs possess data for dozens or hundreds of substances and health outcomes, and therefore are extremely susceptible to multiple testing and the manufacture of false positive results. FFQs, unless corrected for Multiple Testing and Multiple Modeling (MTMM), are virtually guaranteed to produce a spurious correlation between some food and some disease. Researchers can use multiple testing and multiple modelling until they find an exciting result to submit to the editors and referees of a professional journal.

- At the most fundamental statistical level, nutritional epidemiology has not taken into account the recent challenges posed to the very concept of statistical significance, or the procedures of probability of causation.6 The Shifting Sands authors confined their critique to much narrower grounds, but readers should be aware that the statistical foundations underlying nutritional epidemiology are by no means secure.

- Most relevantly for Shifting Sands, nutritional epidemiology as a discipline has rejected the need to adjust results for multiple comparisons. The entire discipline of nutritional epidemiology uses procedures that are guaranteed to produce false positives and rejects using well-established corrective procedures. MTMM tests have been available for decades. Genetic epidemiologists adopted them long ago. Nutritional epidemiology rejects MTMM tests as a discipline—and because it does, the FDA can say it is simply following professional judgment.

These are serious flaws—and I don’t mean by highlighting them to suggest that nutritional epidemiologists haven’t done serious and successful work to keep themselves on the statistical straight-and-narrow. The discipline does a great deal correctly, for which it should be commended. But the discipline isn’t perfect. It possesses blind-spots that amount to disciplinary groupthink. Americans must not simply defer to nutritional epidemiology’s “professional consensus.”

Yet that is what the FDA does—and, indeed, the federal government as a whole. The intention here was sensible—that government should seek to base its views on disinterested experts as the best way to provide authoritative information on which it should act. Yet there are several deep-rooted flaws in this system, which have become increasingly apparent in the decades since the government first developed an extensive scientific-regulatory complex.

- Government regulations do not account for disciplinary group-think.

- Government regulations do not account for the possibility that a group of scientists and governmental regulators, working unconsciously or consciously, might act to skew the consideration of which scientific studies should be used to inform regulation.

- Government regulations define “best available science” by the “weight of evidence” standard. This is an arbitrary standard, subject to conscious or unconscious manipulation by government regulators. It facilitates the effects of groupthink and the skewed consideration of evidence.

- Governmental regulations have failed to address fully the challenge of the irreproducibility crisis, which requires a much higher standard of transparency and rigor than was previously considered “best acceptable science.”

- The entire framework of seeking out disinterested expertise fails to take into account the inevitable effects of using scientific research to justify regulations that affect policy, have real-world effect, and become the subject of political debate and action. The political consequences have unavoidably had the effect of tempting political activists to skew both scientific research and the governmental means of weighing scientific research. Put another way, any formal system of assessment inevitably invites attempts to game it.

- To all this we may add the distorting effects of massive government funding of scientific research. The United States federal government is the largest single funder of scientific research in the world; its expectations affect not only the research it directly funds but also all research done in hopes of receiving federal funding. Government experts therefore have it in their power to create a skewed body of research, which they can then use to justify regulation.

Shifting Sands casts a critical eye on the procedures of the field of nutritional epidemiology, but it also casts a critical eye on governmental regulatory procedure, which has provided no check to the flaws of the nutritional epidemiology discipline, and which is susceptible to great abuse. Shifting Sands is doing work that nutritional epidemiologists and governmental regulators should have done decades ago. Their failure to do so is in itself substantial evidence of the need for widespread reform, both among nutritional epidemiologists and among governmental regulators.

Before I go further, I should make clear the stakes of the “skew” in science that feeds regulation.

A vast amount of government regulation is based on scientific research affected by the irreproducibility crisis. This research includes such salient topics as racial disparity, implicit bias, climate change, and pollution regulation—and every aspect of science and social science that uses statistics. Climate change is the most fiercely debated subject, but the EPA’s pollution regulations are a close second—not least because American businesses must pay extraordinary amounts of money to comply with them. A 2020 report prepared for the Natural Resource Defense Council estimates that American air pollution regulations cost $120 billion per year—and we may take the estimate provided to an environmental advocacy group to be the lowest plausible number.7 The economic consequences carry with them correspondingly weighty political corollaries: the EPA’s pollution regulations constitute a large proportion of the total power available to the federal government. The economic and political consequences of the EPA’s regulations are why we devoted our first Shifting Sands report to PM2.5 regulation.

The consequences of FDA regulation are at least as consequential, for they affect the food and drink consumed by every American. So therefore are the consequences of FDA mis-regulation. Inaccurate labels can mislead consumers, not least by encouraging them to adopt fad diets that present health risks. Furthermore, every company in the food sector, which involved $6.22 trillion dollars in annual sales in 2020, depends for its livelihood on accurate labeling of food products. Mislabeling health benefits can give a company a larger market share than it deserves.

To take a more concrete example, the Code of Federal Regulations declares that “The scientific evidence establishes that diets high in saturated fat and cholesterol are associated with increased levels of blood total- and LDL-cholesterol and, thus, with increased risk of coronary heart disease,” and allows companies to make corollary health claims about reducing the risk of heart disease.8 The FDA duly notes on its Interactive Nutrition Facts Label that “Diets higher in saturated fat are associated with an increased risk of developing cardiovascular disease.”9 Yet recent research concludes that “Numerous meta-analyses and systematic reviews of both the historical and current literature reveals that the saturated-fat diet-heart hypothesis was not, and still is not, supported by the evidence. There appears to be no consistent benefit to all-cause or CVD mortality from the reduction of dietary saturated fat.”10 The law rather than the FDA’s approach to statistics was at issue here, but the financial consequences have been enormous: consumers have redirected billions of dollars toward producers of foods with less saturated fats, for a diet that has no discernible health benefit.

Shifting Sands strengthens the case for policy reforms that would strengthen the FDA’s procedures to assess nutritional epidemiology research results. The authors and I believe that this is the logical corollary of the current state of statistically informed science. I trust that we would favor the rigorous use of MTMM tests no matter what policy result they indicated, and I will endeavor to make good on that principle if MTMM tests emerge that argue against my preferred policies. Those are the policy stakes of Shifting Sands. I hope that its scientific claims will be judged without reference to its likely policy consequences. The possible policy consequences have not pre-determined the report’s findings. We claim those findings are true, regardless of the consequences, and we invite others to reproduce our work.

This report puts into layman’s language the results of several technical studies by members of the Shifting Studies team of researchers, S. Stanley Young and Warren Kindzierski. Some of these studies have been accepted by peer-reviewed journals; others have been submitted and are under review. As part of NAS’s own institutional commitment to reproducibility, Young and Kindzierski pre-registered the methods of their technical studies. And, of course, NAS’s support for these researchers explicitly guaranteed their scholarly autonomy and the expectation that these scholars would publish freely, according to the demands of data, scientific rigor, and conscience.

This report is the second of four scheduled reports, each critiquing different aspects of the scientific foundations of federal regulatory policy. We intend to publish these reports separately and then as one long report, which will eliminate some necessary duplication in the material of each individual report. The NAS intends these four reports collectively to provide a substantive, wide-ranging answer to the question What has been the actual effect on government policy of irreproducible science?

I am deeply grateful for the support of many individuals who made Shifting Sands possible. The Arthur N. Rupe Foundation provided Shifting Sands’ funding—and, within the Rupe Foundation, Mark Henrie’s support and goodwill got this project off the ground and kept it flying. Three readers invested considerable time and thought to improve this report with their comments: David C. Bryant, Douglas Hawkins, and Richard Williams. David Randall, NAS’s Director of Research, provided staff coordination of Shifting Sands—and, of course, Stanley Young has served as Director of the Shifting Sands Project. Reports such as these rely on a multitude of individual, extraordinary talents.

Introduction

How Food Regulations Get Made

The Food and Drug Administration (FDA) now requires that foods (except for meat from livestock, poultry and some egg products, which are regulated by the U.S. Department of Agriculture) be safe, wholesome, sanitary, and properly labeled.11 The FDA’s labeling requirements include mention of health claims that characterize the relationship between a substance (e.g., a food or food component) and a health benefit, a disease (e.g., cancer or cardiovascular disease), or a health condition (e.g., high blood pressure).

The discipline of nutritional epidemiology plays a vital role in the FDA’s labeling requirements. The FDA uses nutritional epidemiology to provide compelling scientific information to support its nutrition recommendations and more coercive regulations.12 Nutritional epidemiology applies epidemiological methods to the study at the population level of the effect of diet on health and disease in humans. Nutritional epidemiologists base most of their inferences about the role of diet (i.e., foods and nutrients) in causing or preventing chronic diseases on observational studies.

From the 1980s onward, the increase of computing capabilities facilitated the application of essentially retrospective self-administered dietary assessment instruments the semi-quantitative food frequency questionnaire (FFQ).13 FFQs, which are easy to use, place low burdens on participants, and allegedly capture long-term dietary intake, have become the most common method by which scientists measure dietary intake in large observational study populations.14

A longstanding criticism of using nutritional epidemiology to determine causality is that it relies predominantly on observational data and associations, which are generally judged to be less reliable than experimental data and interventions.15 FDA Guidance Documents acknowledge the shortcomings of food consumption surveys, including FFQs, and usually note that observational studies are less reliable than intervention studies—but still allow FFQs to inform FDA regulation.16

The FDA does not take sufficient account of nutritional epidemiology’s frail foundations.

Nutritional Epidemiology’s Frail Foundations

Food Frequency Questionnaires

You are what you eat, the proverb goes. Certainly, some illnesses can proceed from diet, such as anemia.17 Most Americans have health problems at some point in their lives and they conclude frequently that poor diet has caused their poor health. They think so more than ever because of the invention and the spread of the Food Frequency Questionnaire (FFQ).

Walter Willett is Scientist Zero of the FFQ. He devised and publicized the FFQ in his “Reproducibility and validity of a semi-quantitative food frequency questionnaire” (1985); as of 2021 that article had been cited more than 4,000 times.18 A FFQ distributes a structured food list and a frequency response section to study participants, who indicate from memory their usual frequency of intake of each food and beverage over a set period of time, usually a day or a week.19 After some lapse of time, typically years, the subjects self-report on their health conditions. Willett, and all his followers, thus have data by which to propose an association between a particular food or diet and a particular health condition.

Scientists conduct statistical comparisons to establish the association between FFQ dietary data items and health outcomes to produce multiple research papers. They then conduct further statistical analyses using meta-analyses of the individual research papers, which combine data from multiple published studies that address a common research question, such as the association between a particular food and a particular disease.20 For example, one meta-analysis combines data of all published studies that examine the claim that high salt intake is associated with gastric cancer.21

Scholars have noted for decades that an FFQ is an unreliable source of data, since it relies on subjects’ ability both to remember accurately what they have consumed and to report with equal accuracy.22

Scholars have noted for decades that an FFQ is an unreliable source of data, since it relies on subjects’ ability both to remember accurately what they have consumed and to report with equal accuracy.

FFQ association studies also frequently gloss over the complexities of digestion. Individuals consume thousands of chemicals in millions of possible daily combinations and it therefore is challenging, if not impossible, to disentangle the association of a single dietary (food) component with a single disease.23 To label a single food component a “cause” of disease in any case glosses over the fact that true biochemical interactions frequently involve actual causative agents (i.e., chemicals or microbes in food), the intermediary products of digestion, and human disease.24 A proper analysis needs to disentangle ultimate causes and proximate causative agents—a task for which FFQs are ill equipped.

Researchers are aware of the unreliability of FFQs, and are working diligently to find alternative research tools,25 yet many scientists continue to engage in a cottage industry of FFQ research. FFQ studies, after all, are relatively inexpensive to conduct and relatively sure to find a positive result. (We will explore below why FFQs find positive results so frequently.) Therefore, they are attractive to the great majority of researchers, who must both work on a budget and secure a steady stream of academic publications. FFQs' known flaws have not prevented them from flourishing wildly. (See Figure 1.)

Figure 1: FFQ Citations Since 198526

|

Years |

FFQ Citations |

|

1981-85 |

777 |

|

1986-90 |

980 |

|

1991-95 |

1,650 |

|

1996-2000 |

2,400 |

|

2001-05 |

4,450 |

|

2006-10 |

8,650 |

|

2011-15 |

14,900 |

|

2016-20 |

15,400 |

|

2021 |

4,640 |

|

Total |

53,847 |

Since 2001, scholars have published about 2,300 FFQ studies annually.27 A 2021 Google Scholar search using “FFQ” and “meta-analysis” returned 22,800 citations.28

And most cohort studies use FFQs.

Cohort Studies

Scientists have conducted cohort studies with increasing frequency since the 1950s. Cohort studies start with hundreds to thousands of people and follow them over an extended period of time—often many years.29 Researchers measure study participants’ initial attributes, including by means of an FFQ, and then collect health results over a succeeding period of time. Cohort studies often require substantial start-up costs, but it costs relatively little to examine more attributes of an already assembled group.

A cohort study can take on a life of its own. The Life Project in England, which examined children born within a small period of time, has become a generations-long cohort study about human development that has provided data for innumerable professional articles in a range of social science and health disciplines. Researchers have published 2,500 papers on the 1958 cohort alone.30

Cohort studies make it easy for scientists to publish multiple papers using the same data set. But to engage in multiple testing creates a serious and scarcely acknowledged statistical problem, which affects the entire field of cohort studies.

Cohort studies make it easy for scientists to publish multiple papers using the same data set.

Multiple Testing and the Manufacture of False Positive Results

Scientists who conduct cohort studies generally use a simple statistical analysis strategy on the data they collect—which causes or risk factors are associated with which outcomes (i.e., health conditions). This procedure allows researchers to analyze an extraordinarily large number of possible relationships.

If a data set contains “C” causes and “O” outcomes, then scientists can investigate C x O possible relationships. They can also examine how yes/no adjustment factors “A”, such as parental age, income, or education, can modify each of the C x O relationships.

We can approximate the number of possible questions that one can examine in a cohort study with the following formula:

(C) x (O) x (2 raised to the power of A) = CO2^A

The number of possible questions at issue can increase extraordinarily rapidly in a cohort study. Consider this hypothetical cohort study of the relationship between a food substance and a disease or health-related condition:

● The number of possible questions in a cohort study with survey data for 61 foods from an FFQ,31 10 possible outcomes (diseases) of interest, and 5 yes/no adjustment factors (e.g., age, sex, marital status, ethnicity, education level) can be approximated as (61) x (10) x (2^5) = 19,520.

● The number of possible questions in a cohort study with survey data for 61 foods from an FFQ, 20 possible outcomes (diseases) of interest, and 10 yes/no adjustment factors (e.g., age, sex, marital status, ethnicity, education level, body mass index, smoking status, total energy intake, physical activity level, sleep duration) can be approximated as (61) x (20) x (2^10) = 1,249,280.32

Researchers doing cohort studies who examine these C x O x 2^A possible models can correct their work to take account of Multiple Testing and Multiple Modeling (MTMM).33 (For a longer explanation of Multiple Testing Multiple Modeling, see Appendix 1.) If they do not, and mostly they don’t, they can produce large numbers of false positive results—and quickly, given the spread through the scientific community of cheap, fast computer hardware and statistical software.34

Given that the conventional threshold for statistical significance (and hence of professional publication) in most disciplines is a p-value of less than 0.05, a false positive result should occur 5% of the time by chance alone.35 (For a longer discussion of statistical significance, see Appendix 2.) In our first hypothetical example, we should expect 976 false positive results (5% of 19,520). In our second hypothetical example, we should expect 62,464 false results (5% of 1,249,280).

Scientists generally are at least theoretically aware of this danger, albeit nutritional epidemiologists have done far too little to correct their professional practices.36 Schoenfeld and Ioannidis put it pungently:

In this survey of published literature regarding the relation between food ingredients and malignancy, we found that 80% of ingredients from randomly selected recipes had been studied in relation to malignancy and the large majority of these studies were interpreted by their authors as offering evidence for increased or decreased risk of cancer. However, the vast majority of these claims were based on weak statistical evidence. Many statistically insignificant “negative” and weak results were relegated to the full text rather than to the study abstract. Individual studies reported larger effect sizes than did the meta-analyses. There was no standardized, consistent selection of exposure contrasts for the reported risks. A minority of associations had more than weak support in meta-analyses, and summary effects in meta-analyses were consistent with a null average and relatively limited variance.37

Scientists also have warned their peers about the particular dangers of multiple testing of cohort studies. Bolland and Grey commented in 2014 on research pertaining to the Nurses’ Health Study (NHS) that:

Investigators have published more than 1000 articles on the NHS, at a rate of more than 50 papers/year for the last 10 years. … To date, more than 2000 hypotheses have been tested in these papers, and it seems likely that the number of statistical tests carried out would be in the tens of thousands. … Given the volume of hypotheses assessed and statistical tests undertaken, it seems likely that many results reported in NHS publications are false positives, and that the use of a threshold of P=0.05 for statistical significance is inappropriate without consideration of multiple statistical testing.

We suggest that authors of observational studies should report how many hypotheses have been tested previously in their cohort study, together with an estimate of the total number of statistical tests undertaken.38

Methods to adjust for MTMM have existed for decades. The Bonferroni method simply adjusts the p-value by multiplying the p-value by the number of tests. Westfall and Young provide a simulation-based method for correcting an analysis for MTMM.39 In practice, however, far too much "research" simply ignores the danger.

Researchers can use multiple testing and multiple modeling until they find an exciting result to submit to the editors and referees of a professional journal—in other words, they can p-hack.40 Editors and referees have an incentive to trust, with too much complacence, that researchers have done due statistical diligence, so they can publish exciting papers and have their journal recognized in the mass media.41 Some editors are part of the problem.42

FFQs and cohort studies, in other words, have been afflicted by the irreproducibility crisis of modern science.

The Irreproducibility Crisis of Modern Science

Nutritional epidemiology’s combination of sloppy statistics and complaisant editorial practices is a component of the larger irreproducibility crisis, which has led to the mass production and publication of irreproducible research.43 Many improper scientific practices contribute to the irreproducibility crisis, including poor applied statistical methodology, bias in data reporting, publication bias (the skew toward publishing exciting, positive results), fitting the hypotheses to the data, and endemic groupthink.44 Far too many scientists use improper scientific practices, including an unfortunate portion who commit deliberate data falsification.45 The entire incentive structure of the modern complex of scientific research and regulation now promotes the mass production of irreproducible research.46 (For a longer discussion of the irreproducibility crisis, see Appendix 3.)

Many scientists themselves have lost overall confidence in the body of claims made in scientific literature.47 The ultimately arbitrary decision to declare p<0.05 as the standard of “statistical significance” has contributed extraordinarily to this crisis. Most cogently, Boos and Stefanski have shown that an initial result likely will not replicate at p<0.05 unless it possesses a p-value below 0.01, or even 0.001.48 Numerous other critiques about the p<0.05 problem have been published.49 Many scientists now advocate changing the definition of statistical significance to p<0.005.50 But even here, these authors assume only one statistical test and near perfect study methods.

Researchers themselves have become increasingly skeptical of the reliability of claims made in contemporary published research.51 A 2016 survey found that 90% of surveyed researchers believed that research was subject to either a major (52%) or a minor (38%) crisis in reliability.52 Begley reported in Nature that 47 of 53 research results in experimental biology could not be replicated.53 A coalescing consensus of scientific professionals realizes that a large portion of “statistically significant” claims in scientific publications, perhaps even a majority in some disciplines, are false—and certainly should not be trusted until they are reproduced.54

Yet federal regulatory agencies are too credulous—including the FDA.

Reforming Government Regulatory Policy: The Shifting Sands Project

The National Association of Scholars’ (NAS) project Shifting Sands: Unsound Science and Unsafe Regulationexamines how irreproducible science negatively affects select areas of government policy and regulation governed by different federal agencies.55 We also aim to demonstrate procedures which can detect irreproducible research. We believe government agencies should incorporate these procedures as they determine what constitutes “best available science”—the standard that judges which research should inform government regulation.56

Shifting Sands aims to demonstrate that the irreproducibility crisis has affected so broad a range of government regulation and policy that government agencies should now engage in thoroughgoing modernization of the procedures by which they judge “best available science.” Agency regulations should address all aspects of irreproducible research, including the inability to reproduce:

● the research processes of investigations;

● the results of investigations; and

● the interpretation of results.57

In Shifting Sands we use a single analysis strategy for all of our policy papers⸺p-value plotting (a visual form of Multiple Testing and Multiple Modeling analysis)⸺as a way to demonstrate weaknesses in different agencies’ use of meta-analyses. Our common approach supports a comparative analysis across different subject areas, while allowing for a focused examination of one dimension of the impact of the irreproducibility crisis on government agencies’ policies and regulations.

Our first Shifting Sands policy paper, Keeping Count of Government Science: P-Value Plotting, P-Hacking, and PM2.5Regulation, focused on irreproducible research in the field of environmental epidemiology that informs the Environmental Protection Agency’s (EPA) policies and regulations.58

This second policy paper in the Shifting Sands project focuses on irreproducible research in the field of nutritional epidemiology, which informs much of the U.S. Food and Drug Administration’s (FDA) nutrition policy. Our report builds upon the existing professional critique of nutritional epidemiology, which has concluded that the discipline does not impose rigorous controls upon its analytical procedures.59 A nutrition researcher can modify an analysis strategy after he has begun to examine data, examine multiple outcomes, use multiple variables as predictors, and further adjust an analysis by deciding whether to include multiple covariates in his model. Nutrition research scarcely ever uses a preregistered, written protocol.60 The discipline consists largely of exploratory research—even though it uses methodologies that ought to be confined to confirmatory research.61

Flimsy Food Findings applies the methodology of p-value plotting and simple counting to critique:

- a meta-analysis of the relationship between red and processed meats and health outcomes such as mortality, cancers and diabetes;62 and

- a meta-analysis of the relationship between soy protein intake and lipid markers (LDL cholesterol and other cholesterol markers) as surrogates for cardiovascular disease (CVD) risk reduction.63

In addition to this section, which draws on two technical studies that have been submitted for professional publication,64 other sections in this report include:

1) background on the U.S. Food and Drug Administration;

2) methods;

3) results;

4) discussion (including research integrity violations);

5) our recommendations for policy changes; and

6) methodological appendices, drawn both from material presented in the first Shifting Sands report and from new research.

Our policy recommendations include both specific technical recommendations directly following from our technical analyses, and broader policy recommendations to address the larger effects of the irreproducibility crisis on nutritional epidemiology, the scientific disciplines as a whole, and federal regulatory policy.

The FDA: Best Existing Practice in the Government?

Our analysis includes a case study of soy protein, currently under consideration by the FDA. The FDA’s initial determination is to remove the claim that soy protein provides a health benefit. Our research supports the FDA’s initial determination. We believe FDA procedures still need to be improved,65 but we note that our methodology in this case lends further support to a federal regulatory decision. We are glad that the evidence indicates that in this case the FDA is headed toward a correct decision.

Until such time as government agencies radically change their procedures to address the irreproducibility crisis, they should at least adopt Best Existing Practices within the government. These may well be the FDA’s.

U.S. Food and Drug Administration (FDA)

History and Role of the U.S. Food and Drug Administration

The administrative origin of the United States Food and Drug Administration (FDA) traces back to 1862, when the Department of Agriculture instituted a new Bureau of Chemistry. Successive acts of legislation, including the Food and Drugs Act (1906), the Federal Food, Drug, and Cosmetic Act (1938), the Kefauver-Harris Drug Amendments (1962), the Nutrition Labeling and Education Act (1990), the Dietary Supplement Health and Education Act (1994), and theFood and Drug Administration Modernization Act (1997), have expanded its remit and modernized the regulatory tools at its disposal.66 The FDA now regulates about 78% of the food ingested by Americans.67

The FDA now regulates about 78% of the food ingested by Americans.

After World War II, and particularly in the aftermath of the Thalidomide scandal of the later 1950s and early 1960s, the FDA’s mandate to enforce drug safety prompted it to adopt rigorous requirements for study design, centered upon the gold standard of the randomized clinical trial, and equally rigorous requirements for statistical analyses of the data. It adopted these techniques to fulfil the somewhat vague legislative mandate to assess “substantial evidence” by means of “adequate and well-controlled studies.” The FDA chose these techniques partly for their technical efficacy and partly because they would pass judicial muster when private manufacturers submitted legal challenges to the scientific validity of FDA regulations.68

Since the 1960s, the FDA has reviewed its study design and statistical analysis standards regularly. In collaboration with private industry and academic researchers, it has updated them to match the evolving best practices of scientific research.69

The FDA, as indicated by its name, also has been concerned with food safety for more than a century. Its continuingremit to protect American public health includes ensuring safety of the food supply.70 Both the U.S. Federal Food, Drug and Cosmetic Act (1938) and the Food and Drug Administration Modernization Act (1997) authorize the FDA to regulate health claims—any claim made on the label or in the labeling of a food that expressly or by implication characterizes the relationship of any substance to a disease or health condition.71 The FDA considers any statements on labels judged false or misleading by a significant scientific agreement among qualified experts to be “misbranding”.

The 1990s wave of legislation (the Nutrition Labeling and Education Act (1990), the Dietary Supplement Health and Education Act (1994), and the Food and Drug Administration Modernization Act (1997)) were intended to improve consumers’ health and well-being by providing them scientifically solid information about the foods they eat. They highlighted the salience of study design and statistical analysis, pioneered for drug regulation, and for the more broad and intensive food regulation required by these laws.

The FDA now requires that foods (except for meat from livestock, poultry and some egg products, which are regulated by the U.S. Department of Agriculture) be safe, wholesome, sanitary, and properly labeled.72 The FDA’s labeling requirements include mention of health claims that characterize the relationship between a substance (e.g., a food or food component) and a health benefit, a disease (e.g., cancer or cardiovascular disease), or a health condition (e.g., high blood pressure).73

These health claims, whether for good or for ill, must survive an FDA assessment based on rigorous study design and valid statistical analysis. (So too must nutrient content claims and structure/function claims.) The FDA articulates its regulatory requirements by means of a lengthy catalog of highly detailed Guidance Documents.74

Some research suggests that even scientifically accurate labels can be misleading and have limited ability to improve consumer health.75 But the general frailties of the discipline of nutritional epidemiology mean that not all labels are accurate, or even relevant.

Nutritional Epidemiology

FDA regulatory requirements require that evidence to support a health claim should be based on studies in humans.76 The randomized controlled trial (RCT), especially the randomized, placebo-controlled, double-blind intervention study, provides the strongest evidence among studies in humans.77 The best RCT certainly trumps the best observational study—and one might argue that a very indifferent RCT is still superior to the best observational study.78 Yet not all intervention studies on food and food components are RCTs, and frequently an RCT is unavailable and/or impractical. In these cases, the FDA must rely on lower-quality observational studies. It relies especially on cohort studies, dependent on dietary assessments based on FFQ analyses, and now pervasive in nutritional epidemiology.79

The discipline of nutritional epidemiology plays a vital role in the FDA’s labeling requirements. The FDA uses nutritional epidemiology to provide compelling scientific information to support its dietary recommendations and more coercive regulations.80 Nutritional epidemiologists, other nutritional scientists, and food-policy analysts from the food industries, academia, and government, are all involved at some level in funding, approving, or conducting nutrition studies aimed at developing, supporting, and/or assessing health claims.81

Nutritional epidemiology applies epidemiological methods to the study at the population level of how diet affects health and disease in humans. Nutritional epidemiologists base most of their inferences about the role of diet (i.e., foods and nutrients) in causing or preventing chronic diseases on observational studies. Since the 1980s, food frequency questionnaires (FFQs), which are easy to use, place low burdens on participants, and aspire to capture long-term dietary intake, have become the most common method by which nutritional epidemiologists measure dietary intake in large observational study populations.82

Nutritional epidemiology suffers many weaknesses. Critics have long noted that nutritional epidemiology relies predominantly on observational data, which researchers generally judge to be less reliable than experimental data, and that this generally weakens its ability to establish causality.83 The discipline’s research findings are also afflicted by frequent alterations of study design, data acquisition methods, statistical analysis techiques, and reporting of results.84 Selective reporting proliferates in published observational studies; researchers routinely test many questions and models during a study, and then only report results that are interesting (i.e., statistically significant).85

Nutritional epidemiology’s research findings are also afflicted by systematic alteration of study design, data acquisition, statistical analysis, and reporting of results.

Additional problems that limit the ability of nutritional epidemiology to substantiate claims of causal associations include:

- causal associations are difficult to prove in so complex a process as dietary intake, which includes interactions and synergies across different dietary components;

- researcher flexibility allows estimates of food to be analyzed and presented in several ways—as individual food frequencies, food groups, nutrient indexes, and food-group-specific nutrient indexes;

- researcher flexibility also allows dietary assessments to be presented with or without various adjustment factors, including other correlated foods and nutrients;

- researcher flexibility allows scientists to choose among the many nutrient−disease hypotheses that could be tested; and

- classic criteria for causation are often not met by nutritional epidemiologic studies, in large part because many dietary factors are weak and do not show linear dose-response relationships with disease risk within the range of exposures commonly found in the population.86

FDA Guidance Documents acknowledge the shortcomings of food consumption surveys, including FFQs, and generally note that observational studies are less reliable than intervention studies—but still allow FFQs to inform FDA regulations.87 And even published assessments of shortcomings in nutritional epidemiology procedures88 usually overlook the problems posed by multiple analysis.

Lack of Proper Multiplicity Control

The FDA does acknowledge some dangers from multiplicity analysis, notably in its Multiple Endpoints in Clinical Trials Guidance for Industry.89 Yet nutritional epidemiology suffers from the type of flawed statistical analysis that predictably and chronically inflates claims of statistical significance by failing to adjust for MTMM and by allowing researchers to search for results that are "statistically significant". Scientists have made these points repeatedly in professional and popular venues.90

The FDA’s health claim reviews examine factors including whether studies are controlled for bias and confounding variables, appropriateness of a study population, soundness of the experimental design and analysis, use of appropriate statistical analysis, and estimates of intake.91 These “reviews” do not address the MTMM problem. Nor do they compare the given analysis to a protocol analysis.

Consequences

Inaccurate labels can mislead consumers, not least by encouraging them to adopt fad diets that present health risks.92 Furthermore, every company in the food sector, which involved $6.22 trillion dollars in annual sales in 2020,93 depends for its livelihood on accurate labeling of food products. Mislabeling health benefits can give a company a larger market share than it deserves.

To take a more concrete example, the Code of Federal Regulations declares that “The scientific evidence establishes that diets high in saturated fat and cholesterol are associated with increased levels of blood total- and LDL-cholesterol and, thus, with increased risk of coronary heart disease,” and allows companies to make corollary health claims about reducing the risk of heart disease.94 The FDA duly notes on its Interactive Nutrition Facts Label that “Diets higher in saturated fat are associated with an increased risk of developing cardiovascular disease.”95

Yet recent research concludes that “Numerous meta-analyses and systematic reviews of both the historical and current literature reveals that the saturated-fat diet-heart hypothesis was not, and still is not, supported by the evidence. There appears to be no consistent benefit to all-cause or CVD mortality from the reduction of dietary saturated fat.”96 The law rather than the FDA’s approach to statistics was at issue here, but the financial consequences have been enormous: consumers have redirected billions of dollars toward producers of foods with less saturated fats, for a diet that may have no discernible health benefit.97

Case Studies

Our report uses p-value plotting, a method that has the potential to aid the FDA in reviewing nutritional health claims. We now demonstrate how this method works by applying the methodology of p-value plotting to critique:

- a meta-analysis of the relationship between red and processed meats and health outcomes such as mortality, cancers and diabetes;98 and

- a meta-analysis of the relationship between soy protein intake and lipid markers (LDL cholesterol and other cholesterol markers) as surrogates for cardiovascular disease (CVD) risk reduction.99

The second case study analyzes a topic currently under review by the FDA. The FDA has permitted soy protein products to display a heart health label based on soy protein’s claimed ability to lower cholesterol. The FDA now is considering whether to revoke the claim, originally allowed in 1999, due to a perceived lack of consistent low-density lipoprotein (LDL) cholesterol reduction in randomized controlled trials.100

Methods

General Approach for Study Analysis

The general approach that we used in our technical studies parallels the work of scholars such as Peace et al.101 We investigated the statistical reliability of methods used in nutritional epidemiology meta-analyses that utilize FFQ studies on cohort populations. Meta-analysis is a systematic procedure for statistically combining data from multiple studies that address a common research question, such as whether a particular food has an association with a disease (e.g., cancer).102

Peace et al. (2018) evaluated 10 published studies (base study papers) included in a meta-analysis of the association between ingestion of sugar-sweetened beverages and the risk of metabolic syndrome and type 2 diabetes.103 Peace et al. observed that the number of foods ranged from 60 to 165 across the 10 base study papers, and that none of the base study papers corrected for multiple testing or multiple modeling (MTMM) to account for chance findings.

The estimated number of statistical tests (or question asked on a same data set) can be referred to as “counts” or “analysis search space”.104 Counts/analysis search space for papers used in the Peace evaluation ranged from 3,072 to over 117 million.105 Again, we point out that five percent of questions asked in these studies works out to large numbers of signals of surprise (chance) findings! Peace et al. noted that paired with every p-value was an estimated effect. Any effect value from a base paper used in meta-analysis could well be a chance finding; the resulting meta-analytic statistic could equally well be biased.106

Consider the following example. If students are arranged from tallest to shortest and their heights recorded in the same order, we have a set of order statistics. We now consider more deeply the consequence of using order statistics such as a largest effect value (the largest order statistic), the expected values of order statistics, and their relation to p-values as a function of the number of observations in a sample (i.e., sample size). If we take a random sample from a population, and order the objects from smallest to largest, we denominate the reordered objects as “order statistics.” The value of the largest order statistic in the random sample is the largest number in the sample. The larger the sample size, N, taken from a population, the larger the deviation from the population mean the largest object's expected value will be. (See Figure 2.)

Figure 2: Expected value of largest order statistics and corresponding P-value for a sample size N from a normal distribution with a standard deviation of one107

|

N |

Expected deviation |

P-value |

|

30 |

2.043 |

0.04952 |

|

60 |

2.319 |

0.02709 |

|

100 |

2.508 |

0.01720 |

|

200 |

2.746 |

0.00919 |

|

350 |

2.927 |

0.00551 |

|

400 |

2.968 |

0.00487 |

|

1000 |

3.241 |

0.00119 |

|

5000 |

3.678 |

0.00024 |

This table shows, for instance, that if 1,000 different objects are drawn from the target population, the largest order statistic, on average, will lie 3.241 standard deviations away from the population mean, and will be extremely “significantly different” (p = .00119) from the population mean. A misinterpretation occurs in thinking that the largest order statistic can be used to represent an average of a group characteristic (i.e., the population mean). It does not.

Researchers who select “statistically significant” results from a multitude of possibilities essentially use an order statistic from a study to make a research claim. Meta-analysts in turn mistakenly take the order statistic to be a reliable number, which substantially affects the results of their meta-analysis. Unless allowance is made for the large sample space (i.e., MTMM corrections), misleading results are virtually certain to occur. We view meta-analysis computations as not robust. We believe our work is the first to highlight just how seriously a few p-hacked base studies can distort meta-analysis computations.108

We chose one meta-analysis of red meat and processed meat that used observational base studies109 and one meta-analysis of soy protein that used RCT base studies110 as representative of nutritional epidemiologic work in this area. We also chose the soy protein study because the FDA is currently considering policy in this area. We believe that problems with these studies likely plague most nutritional meta-analysis studies.

P-value Plots

Epidemiologists traditionally use confidence intervals instead of p-values from a hypothesis test to demonstrate or interpret statistical significance. Since researchers construct both confidence intervals and p-values from the same data, the one can be calculated from the other.111 We first calculated p-values from confidence intervals for all data used by Vernooij et al. (red and processed meats) and by Blanco Mejia et al. (soy protein, FDA case study).

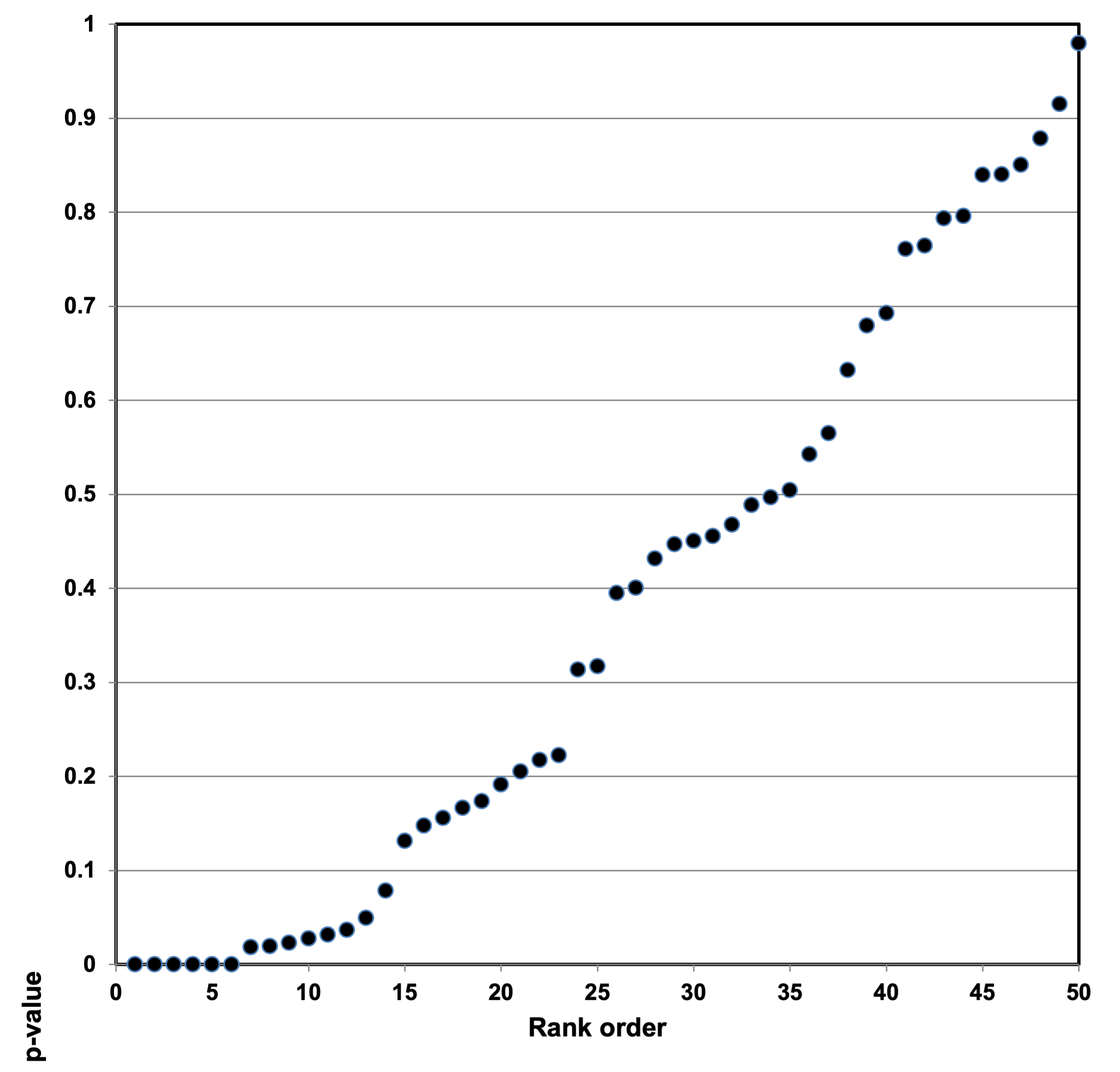

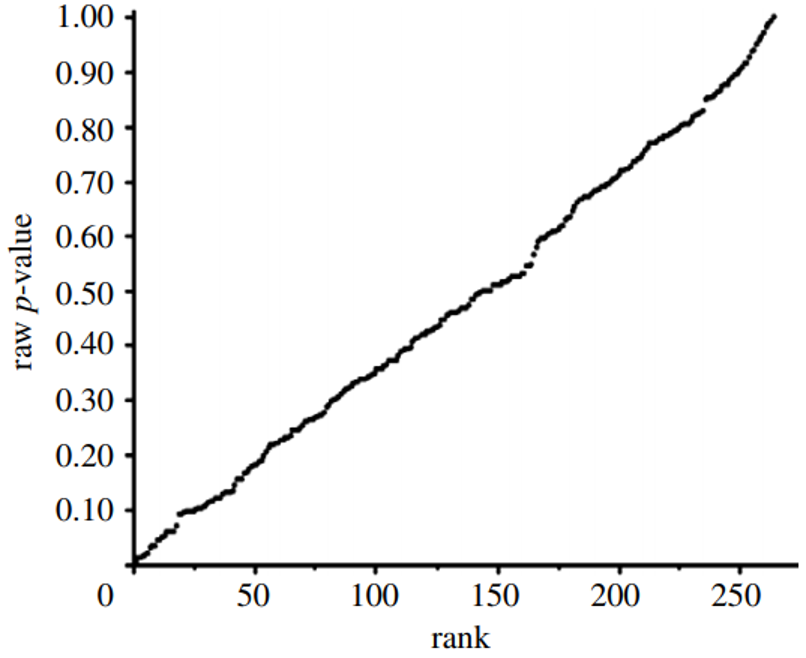

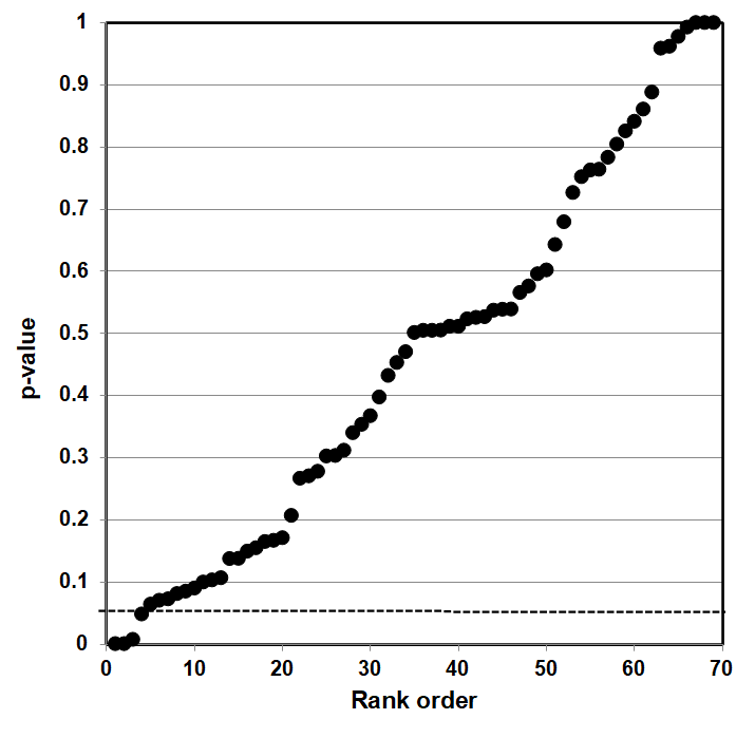

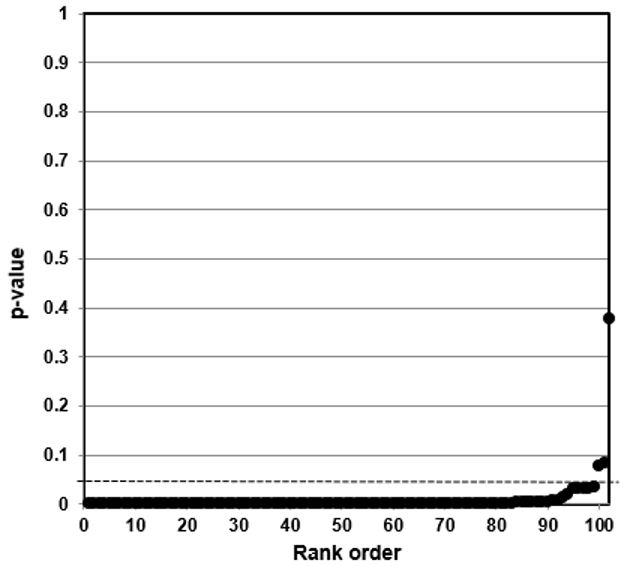

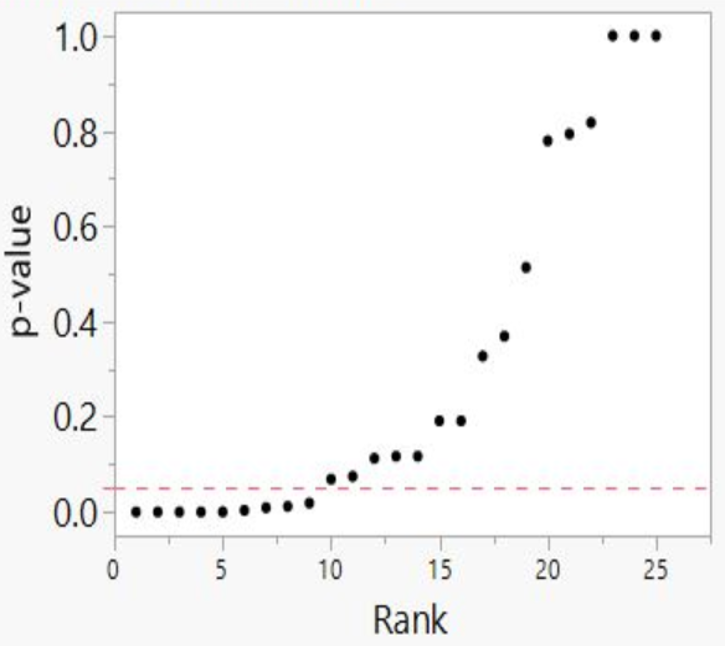

We then developed p-value plots, a method for correcting Multiple Testing and Multiple Modeling (MTMM), to inspect the distribution of the set of p-values.112 (For a longer discussion of p-value plots, see Appendix 4.) The p-value is a random variable derived from a distribution of the test statistic used to analyze data and to test a null hypothesis. In a well-designed study, the p-value is distributed uniformly over the interval 0 to 1 regardless of sample size under the null hypothesis and the distribution of true null hypothesis points in a p-value plot should form a straight line.113

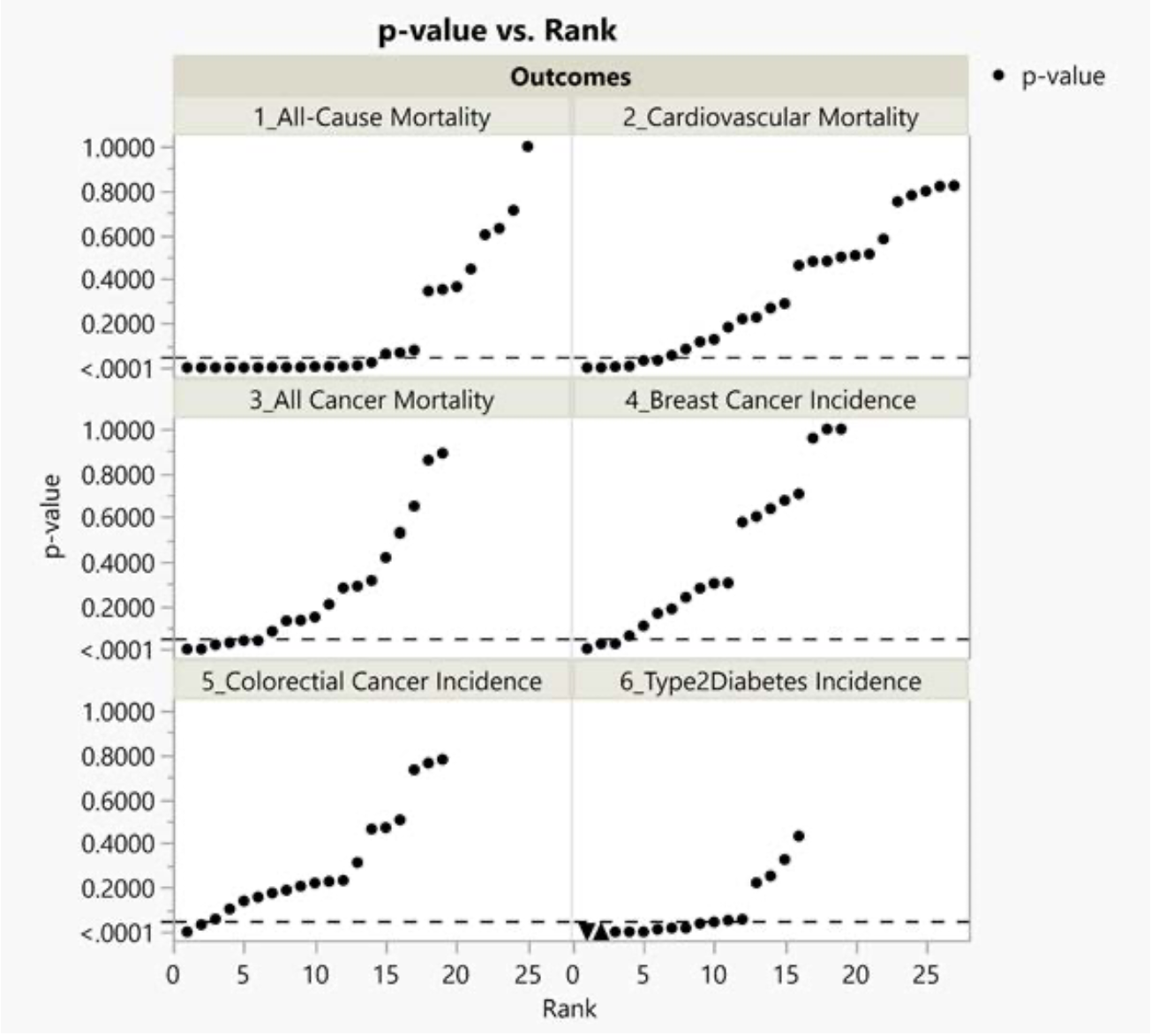

A plot of p-values corresponding to a true null hypothesis, when sorted and plotted against their ranks, should conform to a near 45-degree line. Researchers can use the plot to assess the reliability of base study papers used in meta-analyses. (For a longer discussion of meta-analyses, see Appendix 5.)

We constructed and interpreted p-value plots as follows:

● We computed and ordered p-values from smallest to largest and plotted them against the integers, 1, 2, 3, …

● If the points on the plot followed an approximate 45-degree line, we concluded that the p-values resulted from a random (chance) process, and that the data therefore supported the null hypothesis of no significant association.

● If the points on the plot followed approximately a line with a flat/shallow slope, where most of the p-values were small (less than 0.05), then the p-values provided evidence for a real (statistically significant) association.

● If the points on the plot exhibited a bilinear shape (divided into two lines), then the p-values used for meta-analysis are consistent with a two-component mixture and a general (over-all) claim is not supported; in addition, the p-value reported for the overall claim in the meta-analysis paper cannot be taken as valid.114

P-value plotting is not itself a cure-all. P-value plotting cannot detect every form of systematic error. P-hacking, research integrity violations, and publication bias will alter a p-value plot. But it is a useful tool which allows us to detect a strong likelihood that questionable research procedures, such as HARKing and p-hacking, have corrupted base studies used in meta-analysis and therefore rendered the meta-analysis unreliable. We may also use it to plot “missing papers” in a body of research, and thus to infer that publication bias has affected a body of literature.

We may also use p-value plotting to plot “missing papers” in a body of research, and thus to infer that publication bias has affected a body of literature.

To HARK is to hypothesize after the results are known—to look at the data first and then come up with a hypothesis that has a statistically significant result.115 (For a longer discussion of HARKing, see Appendix 6.)

P-hacking involves the relentless search for statistical significance and comes in many forms, including multiple testing and multiple modeling without appropriate statistical correction.116 It enables researchers to find nominally statistically significant results even when there is no real effect; to convert a fluke, false positive into a "statistically significant" result.117

Irreproducible research hypotheses produced by HARKing and p-hacking send whole disciplines chasing down rabbit holes. It allows scientists to pretend their “follow-up” research is confirmatory research; but in reality, their researchproduces nothing more than another highly tentative piece of exploratory research.118 In effect, bad techniques can lead to bad (irreproducible) claims.

P-value plotting is not the only means available by which to detect questionable research procedures. Scientists have come up with a broad variety of statistical tests to account for frailties in base studies as they compute meta-analyses. Unfortunately, questionable research procedures in base studies severely degrade the utility of the existing means of detection.119 We proffer p-value plotting not as the first means to detect HARKing and p-hacking in meta-analysis, but as a better means than alternatives which have proven ineffective.

Counting

Initially, we want to give readers a general understanding of how commonly FFQ data are used by researchers investigating health outcomes in the literature. The problem, as we have partially explained previously, is that researchers using FFQs—which are typically used in cohort studies—can subject their data to MTMM120 and produce large numbers of false positive results. To understand how commonly FFQ data are used, we used a Google Scholar (GS) search of the literature to estimate the number of citations with the exact phrase “food frequency questionnaire” and a particular “health outcome” (explained below).

We chose 18 health outcomes for this search component, including: obesity, inflammation, depression, mental health, all-cause mortality, high blood pressure, lung and other cancers, metabolic disorders, low birth weight, pneumonia, autism, suicide, COPD (i.e., chronic obstructive pulmonary disease), ADHD (i.e., attention-deficit/hyperactivity disorder), miscarriage, atopic dermatitis, reproductive outcomes, and erectile dysfunction.

Secondly, it is important to get some sense of the number of research questions under consideration in any given cohort study. It is time-consuming and expensive to set up and follow a cohort. But it is relatively inexpensive to add new measurements and research questions to an existing cohort. For those reasons, it is typical to have many research questions under consideration with a given cohort study. Any single paper coming from a cohort study might appear only focused on one question. However, there are almost always many questions at issue: the same cohort can be used repeatedly for different research purposes. When scientists produce many papers from the data of a single cohort study, and do not take explicit notice of their procedures and the necessary statistical corrections, it strongly suggests they have not corrected for MTMM.

We have focused on counting three categories that are central concerns of the Vernooij et al. and Blanco Mejia meta-analyses:

● Number of foods listed in food frequency questionnaires (FFQ) used in the base study papers. Very often a FFQ is part of a cohort study. People in the cohort are asked which foods they consumed, and often also asked the quantity consumed. A FFQ usually lists more than 60 foods, sometimes hundreds. If there are many foods and many health outcomes of interest, we should expect many claims at issue and many resulting papers. So, if a particular paper reports only one outcome and one cause, we are likely only seeing a small fraction of the number of claims under consideration.

● Number of questions considered in base study papers. For this, as we explained previously, we counted the causes (C), outcomes (O), and yes/no adjustment factors (A); where the number of questions = C × O × 2^A.

● Number of published papers for each cohort study used in the base study papers. We used a Google Scholar search to estimate the number of papers that contain the data set used by the cohort study. We preferred to be conservative in this estimate, so, for some data sets, we restricted the Google Scholar search to the paper’s title.

Figure 3: Google Scholar Search of Health Effects' Association with Foods121

|

RowID |

Outcome |

# of citations |

|

1 |

obesity |

42,600 |

|

2 |

inflammation |

23,100 |

|

3 |

depression |

18,000 |

|

4 |

mental health |

10,900 |

|

5 |

all-cause mortality |

10,700 |

|

6 |

high blood pressure |

9,470 |

|

7 |

lung and other cancers |

7,180 |

|

8 |

metabolic disorders |

5,480 |

|

9 |

low birth weight |

4,630 |

|

10 |

pneumonia |

2,140 |

|

11 |

autism |

2,080 |

|

12 |

suicide |

1,840 |

|

13 |

COPD |

1,800 |

|

14 |

ADHD |

1,370 |

|

15 |

miscarriage |

1,240 |

|

16 |

atopic dermatitis |

938 |

|

17 |

reproductive outcomes |

537 |

|

18 |

erectile dysfunction |

359 |

Figure 3 shows how frequently researchers use FFQ data to investigate 18 separate health outcomes. Scientists appear particularly interested in investigating the association between obesity and particular foods, but they also investigate more unexpected topics, such as the association between particular foods and erectile dysfunction. They are, as a group, thorough in seeking out possible associations.

Case Study #1: Red and Processed Meats

Introduction

The Johnston research group (Vernooij et al.) recently published a systematic review and meta-analysis of cohort studies pertaining to food health claims of red and processed meat.122 We selected 6 of 30 health outcomes that they reported on for further investigation: all-cause mortality, cancer mortality and incidence, cardiovascular mortality, nonfatal coronary heart disease, fatal and nonfatal myocardial infarction, and type 2 diabetes. We chose the 6 health outcomes studied most frequently in the base study papers.

Upon our request, the Johnston research group generously provided the data that we used for this report. We then used analysis search space counting123 and p-value plots124 to assess the claims about the health effects of red meat and processed meat.

Data Sets

The Johnston research group’s (Vernooij et al.) systematic review and meta-analysis reviewed 1,501 papers and selected 105 primary papers for further analysis. The data set included 70 study cohorts.125 The researchers used GRADE (Grading of Recommendations Assessment, Development and Evaluation) criteria126—which do not assess MTMM—to assess the reliability of the papers drawn from published literature and to select papers for their meta-analysis. Their study complied with the recommendations of PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses).127

Vernooij et al. stated that the base study papers used in their meta-analysis, which were observational studies, provided low- or very-low-certainty evidence according to the GRADE criteria. Vernooij et al. concluded that, “Low- or very-low-certainty evidence suggests that dietary patterns with less red and processed meat intake may result in very small reductions in adverse cardiometabolic and cancer outcomes.”128 In other words, their meta-analysis ascribed little confidence to the claim that decreased consumption of red meat or processed meat improves health.129

Results

Below we present results of our technical investigation about the association between red and processed meat with six health outcomes reported by Vernooij et al.130 We present a summary of the characteristics of the 15 base study papers we randomly selected from 105 Vernooij et al. base study papers in Figure 4.

Counting

We randomly selected 15 of the 105 base study papers (14%) for counting purposes. A 5—20% sample from a population whose characteristics are known is considered acceptable for most research purposes as it provides an ability to make generalizations for the population.131 We accepted Vernooij et al.’s judgment that their screening procedures selected 105 base study papers with sufficiently consistent characteristics for use in meta-analysis.

Figure 4: Characteristics of 15 Randomly Selected Papers from Vernooij et al.132

|

Citation# |

Base Paper 1st Author |

Year |

Foods |

Outcomes |

Causes (Predictors) |

Yes/no Adjustment Factors (Covariates) |

Tests |

Models |

Search Space |

|

8 |

Dixon |

2004 |

51 |

3 |

51 |

17 |

153 |

131,072 |

20,054,016 |

|

31 |

McNaughton |

2009 |

127 |

1 |

22 |

3 |

22 |

8 |

176 |

|

34 |

Panagiotakos |

2009 |

156 |

3 |

15 |

11 |

45 |

2,048 |

92,160 |

|

38 |

Héroux |

2010 |

18 |

32 |

18 |

9 |

576 |

512 |

294,912 |

|

47 |

Akbaraly |

2013 |

127 |

5 |

4 |

5 |

20 |

32 |

640 |

|

48 |

Chan |

2013 |

280 |

1 |

34 |

10 |

34 |

1,024 |

34,816 |

|

49 |

Chen |

2013 |

39 |

4 |

12 |

5 |

48 |

32 |

1,536 |

|

53 |

Maruyama |

2013 |

40 |

6 |

30 |

11 |

180 |

2,048 |

368,640 |

|

56 |

George |

2014 |

122 |

3 |

20 |

13 |

60 |

8,192 |

491,520 |

|

57 |

Kumagai |

2014 |

40 |

3 |

12 |

8 |

36 |

256 |

9,216 |

|

59 |

Pastorino |

2016 |

45 |

1 |

10 |

6 |

10 |

64 |

640 |

|

65 |

Lacoppidan |

2015 |

192 |

1 |

6 |

16 |

6 |

65,536 |

393,216 |

|

80 |

Lv |

2017 |

12 |

3 |

27 |

8 |

81 |

256 |

20,736 |

|

92 |

Chang-Claude |

2005 |

14 |

5 |

3 |

7 |

15 |

128 |

1,920 |

|

99 |

Tonstad |

2013 |

130 |

1 |

4 |

10 |

4 |

1,024 |

4,096 |

We note that while Willett’s early food frequency questionnaire (FFQ) studies investigated only 61 foods,133 these 15 base studies include FFQ−cohort studies examining as many as 280 foods134 and 32 different health outcomes.135

We present summary statistics of the 15 base study papers we randomly selected from 105 Vernooij et al. base study papers in Figure 5.

Figure 5: Summary statistics of 15 randomly selected papers from Vernooij et al.136

|

Statistic |

Foods |

Outcomes |

Causes (Predictors) |

Yes/no Adjustment Factors (Covariates) |

Tests |

Models |

Search Space |

|

minimum |

12 |

1 |

3 |

3 |

4 |

8 |

176 |

|

lower quartile |

40 |

1 |

8 |

7 |

18 |

96 |

1,728 |

|

median |

51 |

3 |

15 |

9 |

36 |

512 |

20,736 |

|

upper quartile |

129 |

5 |

25 |

11 |

71 |

2,048 |