Last week NAS spotlighted higher education’s widespread approval of the “outcomes assessment” movement—78% of colleges and universities now use “a common set of intended learning outcomes for all their undergraduate students,” according to a survey by the Association of American Colleges and Universities (AAC&U). After we posted our article, a reader wrote in:

I learned a lot about the "outcomes" excitement at this weekend's Education Writers Association conference. There's a growing U.S movement to replicate Europe's Bologna outcome standards, "bottom-up" (via interviews with faculty, alums, employers) rather than top-down (from the Feds) both generally and by discipline—identifying what outcomes a U.S. AA, BA, MA (or AS, BS, MS) in each field ought to be. See the folks in the "tuning" movement, who said at the conference that they are trying this out on a statewide level as pilot projects in a few disciplines in a few states.

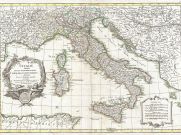

The Bologna Process was set in motion in 1999 when 29 countries met in the namesake city and signed the Bologna Declaration, “a binding commitment to an action programme.” Its two main objectives, with a 2010 deadline, are to adopt “a system of easily readable and comparable degrees,” and to adopt “a system essentially based on two main cycles, undergraduate and graduate.” In effect, the Bologna process is a homogenizing one. It aims to standardize European higher education requirements and make it easier for students to transfer to universities in other EU countries.

But as our reader pointed out, the European method begins, not with a government mandate, but with the universities themselves. The Declaration “invites the higher education community to contribute to the success of the process of reform and convergence, [...] recognises the fundamental values and the diversity of European higher education, [...and] clearly acknowledges the necessary independence and autonomy of universities.” In this way, “Universities and other institutions of higher education can choose to be actors, rather than objects, of this essential process of change.”

Letting universities be “actors of change” sounds sensible. And here is one place where diversity in higher education is used in the traditional sense, meaning the variety of institution types—public, private, large, small, technical, liberal arts, residential, community, etc. Of course, there is much less variety in European higher education than in the United States. In most European nations, higher education has felt the heavy hand of state centralization for hundreds of years. In the U.S., that hand is still searching for its grip.

But how are individual colleges and universities to arrive at mutually agreeable conclusions?

That’s where Tuning comes in. Tuning Educational Structures in Europe explains how the Bologna Process should actually be carried out. It identifies specific learning outcomes (what students should know, i.e. “basic geometry of curves and surfaces”) and competences (skills students should have, i.e. “capacity for quantitative thinking”). The nine subjects for which learning outcomes have been established are Business Administration, Chemistry, Education Sciences, European Studies, History,

Geology, Mathematics, Nursing, and Physics (notice that literature and other less quantifiable subjects haven’t yet made it to this list).

But let’s return to the other side of the Atlantic. The Lumina Foundation has decided to apply Europe’s Tuning approach to three states (Indiana, Minnesota, and Utah). Susan Robertson writing at GlobalHigherEd observes that bringing Tuning to the U.S. concords with the lofty education aims proposed recently by President Obama and others:

Similarly, the Tuning Process is regarded as a means for realizing one of the ‘big goals’ that Lumina Foundation President–Jamie Merisotis–had set for the Foundation soon after taking over the helm; to increase the proportion of the US population with degrees to 60% by 2025 so as to ensure the global competitiveness of the US.

According to the Chronicle of Higher Education (May 1st, 2009), Merisotis “gained the ear of the White House” during the transition days of the Obama administration in 2008 when he urged Obama “to make human capital a cornerstone of US economic policy.”

Merisotis was also one of the experts consulted by the US Department of Education when it sought to determine the goals for education, and the measures of progress toward those goals. [He was also a speaker at the Education Writers Association conference attended by our reader.]

By February 2009, President Obama had announced to Congress he wanted America to attain the world’s highest proportion of graduates by 2020. So while the ‘big goal’ had now been set, the question was how?

Yes, that’s a good question. NAS has remarked on such goals voiced by President Obama, the Lumina Foundation, the College Board, and the Carnegie Corporation. Their proposals, spurred by the nagging fear that the U.S. will get “left behind,” would require an incredible expansion of higher education—and would still not solve the “left behind” problem. As NAS president Peter Wood wrote, “We would have to massively redirect the nation’s resources to more than double the size of higher education in that time frame; virtually eliminate the possibility of students dropping out; and admit people to college study so grossly ill-equipped by lack of intelligence as well as lack of preparation that a college education would have to be radically defined downwards from its already low standard.”

President Obama and the others seem not to anticipate these troubles. Both the learning outcomes and the ‘big goals’ demonstrate an urge to produce measurable results—in hopes of eventually being able to point to the numbers and say, “See? All American graduates (that’s 60% of our population) know _________ and can do _________.” Right now the most we can say about college grads as a whole is that they graduate knowing too little.

The focus on learning outcomes, however, may only accentuate that problem. But that isn’t self-evident. Knowing what you want students to learn and making sure that most if not all of them actually learn it sounds pretty sensible. So where is the problem?

Let’s start with an example. NAS’s public relations director Glenn Ricketts, who teaches political science at Raritan Valley Community College, recalls his college’s efforts some twenty years ago to adopt learning outcomes assessment. In his view, “It was a colossal waste of the faculty’s time—constantly having to revise our course materials to resemble contractual agreements.” The idea was that each professor had to specify in advance exactly what his students would learn: a learning outcome. Professors knew they would be accountable to their administrations for these outcomes, so had a strong incentive to low-ball their estimates. Added to the nuisance of having to rework syllabi was the faculty’s fear of legal action if a student felt he had not received his promised “learning outcome.” This too prompted efforts to eliminate intellectually challenging parts of the courses. In the end, says Ricketts, outcomes assessment, “drags everyone down to the lowest common denominator.”

The practical outcome of outcomes assessment has often been an effort to establish bland goals that the teacher is pretty sure everyone can reach. The movement resembles compulsive to-do-list-making for the lazy—lists that include the academic equivalent of items like “be kind” or “eat well” or “spend time in nature.” (Let’s see, I only swore at five drivers on my way to work, instead of all six that made me mad. I had grape soda at lunch – that counts as fruit, right? And I noticed an ant on the kitchen sink this afternoon...ah, nature.”) Crossing each item off is satisfying, no matter how minimally the tasks were carried out.

But surely Ricketts’ experience is exceptional? Outcomes assessment, like list making, definitely works in some contexts. Lesson plans in grade school and high school are framed by desired outcomes. Why can’t this success be transferred to college? Isn’t that what the Bologna Process is about? And the Lumina Foundation’s effort in the U.S.?

There are two reasons why outcomes assessment works reasonably well in K-12 education but stumbles when it reaches college. The first is institutional diversity. Colleges in the United States have disparate curricula. They define similar-sounding subjects in dissimilar ways, use different texts, and favor different pedagogies. Most American colleges also encourage faculty members to put their own stamp on their courses. “Outcomes assessment” can be projected onto this teeming variety of courses, approaches, and individual styles, but doing so means tailoring each course to its own one-of-a-kind outcome. That’s not very efficient. It takes a lot of time, as Professor Ricketts observed, and it puts in place disincentives for intellectual ambition and inventiveness.

The Bologna Process, it should be remembered, aims at standardizing a system not nearly as diverse as the American one. That’s because, for all the differences among the member states of the EU, most European countries have long had well-defined national curricula.

The second reason why outcomes assessment stumbles in American colleges is we have traditionally given higher education a mission quite separate from high schools. College has been not so much about acquiring command of a basic body of knowledge and skills as it has been an effort to prepare students for “higher learning.” That means analysis, synthesis, confrontation with paradox, contemplation of unsolved and perhaps unsolvable problems, and the disciplined effort to see beyond what is already known. None of this is susceptible in any meaningful way to “outcomes assessment.” And to force outcomes assessment upon it is essentially to force higher education to lower itself—to make it more like high school, and less like college.

Of course, there are parts of a college education that fit that mold. Some subjects are best approached at the undergraduate level as the acquisition of more fixed facts, techniques, and skills. Outcomes assessment might not have the second problem—the problem of higher reasoning—when applied to these courses, though it would still have the first problem, of institutional diversity.

The outcomes assessment has been carving ruts in American higher education for two decades. One might think we have had plenty of opportunity to assess whether it has done any good. And indeed, during that time, it has won few friends outside the accreditation agencies and bureaucrats who run the programs. The Bologna Process, however, seems to have sparked new life in the old idea. The dream is to invent a new form of outcomes assessment that somehow circumnavigates the shoals of triviality and the cliffs of centralized control that seem the usual fate of these ventures.

Perhaps Bologna will achieve that safe passage. But Americans, who have much more experience with outcomes assessment, should wait and see. We think it is way too soon to bring Bologna to our table.