Preface and Acknowledgments

Peter W. Wood

President

National Association of Scholars

This report uses statistical analyses to provide further evidence that our country’s public health bureaucrats gravely mishandled the federal government’s response to the COVID-19 pandemic. Other competent observers have been documenting lapses by the Centers for Disease Control and Prevention (CDC), the National Institutes of Health (NIH), the Chief Medical Advisor to the President, and other authorities since the early days of the pandemic. This report adds to and substantiates many of the previously published criticisms.

The National Association of Scholars (NAS) has been publicizing the dangers of the irreproducibility crisis for years, and now the crisis has played a major role in a public health policy catastrophe. Why does the irreproducibility crisis matter? What, practically, has it affected? Now our Exhibit A is the government’s COVID-19 public health policy.

I don’t need to explain what COVID-19 is to the general reader, but I do need to explain the nature and the extent of the irreproducibility crisis. It has had an ever more deleterious effect on a vast number of the sciences and social sciences, from epidemiology to social psychology. What went wrong in COVID-19 public health policy has gone wrong in a great many other disciplines.

The irreproducibility crisis is the product of improper research techniques, a lack of accountability, disciplinary and political groupthink, and a scientific culture biased toward producing positive results. Other factors include inadequate or compromised peer review, secrecy, conflicts of interest, ideological commitments, and outright dishonesty.

Science has always had a layer of untrustworthy results published in respectable places, and “experts” who were eventually shown to have been sloppy, mistaken, or untruthful in their reported findings. Irreproducibility itself is nothing new. Science advances, in part, by learning how to discard false hypotheses, which sometimes means dismissing reported data that does not stand the test of independent reproduction.

But the irreproducibility crisis is something new. The magnitude of false (or simply irreproducible) results reported as authoritative in journals of record appears to have dramatically increased. “Appears” is a word of caution, since we do not know with any precision how much unreliable reporting occurred in the sciences in previous eras. Today, given the vast scale of modern science, even if the percentage of unreliable reports has remained fairly constant over the decades, the sheer number of irreproducible studies has grown vastly. Moreover, the contemporary practice of science, which depends on a regular flow of large governmental expenditures, means that the public is, in effect, buying a product rife with defects. On top of this, the regulatory state frequently builds both the justification and the substance of its regulations on the basis of unproven, unreliable, and, sometimes, false scientific claims.

In short, many supposedly scientific results cannot be reproduced reliably in subsequent investigations and offer no trustworthy insight into the way the world works. A majority of modern research findings in many disciplines may well be wrong.

That was how the National Association of Scholars summarized matters in our report The Irreproducibility Crisis of Modern Science: Causes, Consequences, and the Road to Reform (2018).1 Since then we have continued our work toward reproducibility reform through several different avenues. In February 2020, we co-sponsored with the Independent Institute an interdisciplinary conference on Fixing Science: Practical Solutions for the Irreproducibility Crisis, to publicize the irreproducibility crisis, exchange information across disciplinary lines, and canvass (as the title of the conference suggests) practical solutions for the irreproducibility crisis.2 We have also provided a series of public comments in support of the Environmental Protection Agency’s rule Strengthening Transparency in Pivotal Science Underlying Significant Regulatory Actions and Influential Scientific Information.3 We have publicized different aspects of the irreproducibility crisis by way of podcasts and short articles.4

And we have begun work on our Shifting Sands project. In May 2021 we published Keeping Count of Government Science: P-Value Plotting, P-Hacking, and PM2.5 Regulation.5 In July 2022 we published Flimsy Food Findings: Food Frequency Questionnaires, False Positives, and Fallacious Procedures in Nutritional Epidemiology. This report, The Confounded Errors of Public Health Policy Response to the COVID-19 Pandemic, is the third of four that we will publish as part of Shifting Sands, each of which will address the role of the irreproducibility crisis in different areas of federal and state policy. In these reports we address a central question that arose after we published The Irreproducibility Crisis.

You’ve shown that a great deal of science hasn’t been reproduced properly and may well be irreproducible. How much government regulation is actually built on irreproducible science? What has been the actual effect on government policy of irreproducible science? How much money has been wasted to comply with regulations that were founded on science that turned out to be junk?

This is the $64 trillion dollar question. It is not easy to answer. Because the irreproducibility crisis has so many components, each of which could affect the research that is used to inform regulatory policy, we are faced with many possible sources of misdirection.

The authors of Shifting Sands include these just to begin with:

malleable research plans;

legally inaccessible data sets;

opaque methodology and algorithms;

undocumented data cleansing;

inadequate or non-existent data archiving;

flawed statistical methods, including p-hacking;

publication bias that hides negative results; and

political or disciplinary groupthink.

Each of these could have far-reaching effects on government regulatory policy—and for each of these, the critique, if well-argued, would most likely prove that a given piece of research had not been reproduced properly, not that it had actually failed to reproduce. (Studies can be made to “reproduce,” even if they don’t really.) To answer the question thoroughly, one would need to reproduce, multiple times, to modern reproducibility standards, every piece of research that informs governmental regulatory policy.

This should be done. But it is not within our means to do so.

What the authors of Shifting Sands did instead was to reframe the question more narrowly. Governmental regulation is meant to clear a high barrier of proof. Regulations should be based on a very large body of scientific research, the combined evidence of which provides sufficient certainty to justify reducing Americans’ liberty with a governmental regulation. What is at issue is not any particular piece of scientific research, but, rather, whether the entire body of research provides so great a degree of certainty as to justify regulation. If the government issues a regulation based on a body of research that has been affected by the irreproducibility crisis so as to create the false impression of collective certainty (or extremely high probability), then, yes, the irreproducibility crisis has affected government policy by providing a spurious level of certainty to a body of research that justifies a governmental regulation.

The justifiers of regulations based on flimsy or inadequate research often cite a version of what is known as the “precautionary principle.” This means that, rather than basing a regulation on science that has withstood rigorous tests of reproducibility, they base the regulation on the possibility that a scientific claim is accurate. They do this with the logic that it is too dangerous to wait for the actual validation of a hypothesis, and that a lower standard of reliability is necessary when dealing with matters that might involve severely adverse outcomes if no action is taken.

This report does not deal with the precautionary principle, since the principle summons a conclusiveness that lies beyond the realm of actual science. We note, however, that an invocation of the precautionary principle is not only non-scientific but is also an inducement to accept meretricious scientific practice, and even fraud.

The authors of Shifting Sands addressed the more narrowly framed question posed above. They applied a straightforward statistical test, Multiple Testing and Multiple Modeling (MTMM), and applied it to a body of meta-analyses used to justify government research. MTMM provides a simple way to assess whether any body of research has been affected by publication bias, p-hacking, and/or HARKing (Hypothesizing After the Results were Known)—central components of the irreproducibility crisis. In this third report, the authors applied this MTMM method to portions of the research underlying two aspects of nonpharmaceutical-intervention response to the COVID-19 pandemic that were formally or informally promoted by the Centers for Disease Control and Prevention (CDC): lockdowns and masking. Both these interventions were intended to reduce COVID-19 infections and fatalities, but the authors found persuasive circumstantial evidence that lockdowns and masking had no proven benefit to public health outcomes. Their technical studies suggest a far greater frailty (failure) in the system of epidemiological modeling and policy recommendations. That system, generally, grossly overestimated the potential effects of COVID-19 and, particularly, overestimated the potential benefit of lockdowns and masking. Their technical studies support recommendations for policy change to restructure the entire system of government policy based on epidemiological modeling, and not simply to apply cosmetic reforms to the existing system.

Confounded Errors broadens our critique of federal agencies from the Environmental Protection Agency (EPA) and the Food and Drug Administration (FDA) to include the CDC. More importantly, it highlights a whole new aspect of the irreproducibility crisis. The CDC and associated professions now rely heavily on a combination of epidemiology, statistics, and mathematical modeling. They do so to alter all sorts of individual and collective behavior, in the name of public health. This is alarming in itself, because public health agencies have taken it upon themselves to shift, for example, how people eat and whether or not to smoke. Of course, there are public health justifications—but this also allows the state and its servants to determine how citizens should live. Even with this relatively narrow scope, it is an astonishing expansion of state authority over individual lives.

Epidemiology already concerns itself with “surveillance” in the health context. It is reasonable to worry about the conflation of public health modeling and the parallel work by computer scientists to establish a broader surveillance state, to fear the marriage of the epidemiological model and the computer science algorithm. Meme transmission can be modeled; so can “public health” efforts to inhibit the reproduction of memes.

Put another way, Gelman and Loken’s “garden of forking paths” applies peculiarly to the world of modeling public health interventions. Gelman and Loken wrote of the world of statistical analysis that,

When we say an analysis was subject to multiple comparisons or “researcher degrees of freedom,” this does not require that the people who did the analysis were actively trying out different tests in a search for statistical significance. Rather, they can be doing an analysis which at each step is contingent on the data. The researcher degrees of freedom do not feel like degrees of freedom because, conditional on the data, each choice appears to be deterministic. But if we average over all possible data that could have occurred, we need to look at the entire garden of forking paths and recognize how each path can lead to statistical significance in its own way. Averaging over all paths is the fundamental principle underlying p-values and statistical significance and has an analogy in path diagrams developed by Feynman to express the indeterminacy in quantum physics.6

Researcher degrees of freedom apply to mathematical modeling. But modeling public health interventions translates these degrees of freedom from understanding the world to recommending policy; researcher degrees of freedom become intervention degrees of freedom, a phrase coined by the authors of Confounded Errors.

We may add to this the critique that modeling, by its nature, is intended to facilitate state action and, generally, forecloses serious consideration of the advantages of doing nothing.7 Modeling justifies state action; modeling relies on intervention degrees of freedom.

In my previous introductions I have written of the economic consequences of the irreproducibility crisis—of the costs, rising to the hundreds of billions annually, of scientifically unfounded federal regulations issued by the EPA and the FDA. I also have written about how activists within the regulatory complex piggyback upon politicized groupthink and false-positive results to create entire scientific subdisciplines and regulatory empires. The authors of Confounded Errors now bring into focus the deep connection between the irreproducibility crisis and the radical-activist state by their focus on intervention degrees of freedom. Americans have ceded governmental authority to professionals who claim the mantle of scientific authority—Jekylls who have imbibed too much of the potion of power and have become Hydes. The irreproducibility crisis in government is the intervention crisis. Intervention degrees of freedom mean the freedom of radical activists in federal bureaucracies to make policy, unrestrained by law, prudence, consideration of collateral damage, off-setting priorities, our elected representatives, or public opinion.

The use of the techniques of epidemiological modeling and computer algorithms to control public opinion—to remove any check to radical activist policy—is even more alarming. The silver lining is that our would-be Svengalis may fool themselves with their own false-positives and disseminate inefficient propaganda. But we cannot rely on their errors to check their malice.

We base our critique of COVID-19 public health policy on the narrow grounds of its relationship to the irreproducibility crisis and the intervention crisis. I am keenly aware that there are far more profound grounds with which to criticize COVID-19 public health policy. These criticisms levy charges of bad faith, politicized misconduct, and hysteria at much of our public health establishment, from Anthony Fauci on down. I certainly agree with the authors of Confounded Errors that the federal government should establish a commission to undertake a full-scale investigation and report on the origins and nature of COVID-19, as well as of public health policy errors committed during the response to COVID-19 by the CDC. Commission reports, of course, come and go. Such a report by itself, no matter how rigorously carried out, will mean little if it fails to attract widespread attention and to intensify public indignation at the misdeeds perpetrated in the name of “science.” Gaining the necessary level of attention will be hindered by the complicity of much of the national press and much of the science press in reinforcing the government’s false narratives.

What, then, is to be done? We must rely on the slowly crystallizing public recognition that the COVID-19 shutdown and many of the related measures taken in the name of public health were ill-founded. The regime of falsehoods cannot stand forever, and its collapse is already evident in the efforts of leaders such as Anthony Fauci, Francis Collins, and Rochelle Walensky to present exculpatory stories about their previous actions or simply to deny saying or doing what the record plainly shows. Nothing shows their vulnerability to serious, fact-based criticism more than their eagerness to flee from the positions they once touted as either impregnable scientific truths or the most promising precautionary measures given the uncertainties of the time.

The NAS also has written to oppose errors in COVID-19 public health policy as they apply to higher education.8 I do not judge it appropriate at this moment for the NAS to levy such charges—and I am glad that the authors of Confounded Errors have focused the report on scientists’ errors rather than scientists’ motives. But it certainly would be appropriate for Confounded Errors’ readers to use the evidence it presents to inform their broader judgment about the American public health establishment’s implementation of COVID-19 policy. Americans justly may wonder, and make informed conclusions, about whether such an extended period of scientific incompetence is accidental or intended.

Confounded Errors bolsters the case for policy reforms that would strengthen federal agencies’ procedures to assess research results, especially those grounded on statistical analyses—in environmental epidemiology, in nutritional epidemiology, in public health epidemiology and modeling, and in every government regulatory agency that justifies its actions with scientific or social-scientific research. It also justifies a new approach to science policy more generally. Americans must work to understand fully the connections between the irreproducibility crisis and the radical-activist state, to recognize the wide-ranging intervention crisis as a political problem of the first magnitude, and to draft policy solutions that will reassert the primacy of law, elected representatives, and the public over the arbitrary actions of the Lysenkos in government service who pretend to be Lavoisiers. We must reform not only the procedures of scientific research but also the procedures and powers of government expertise.

The National Association of Scholars, informed by Shifting Sands, will work on this larger problem. I hope we will have many colleagues to join us in this vital work.

Confounded Errors puts into layman’s language the results of several technical studies by members of the Shifting Sands team of researchers, S. Stanley Young and Warren Kindzierski. Some of these studies have been accepted by peer-reviewed journals; others have been submitted and are under review. As part of the NAS’s own institutional commitment to reproducibility, Young and Kindzierski pre-registered the methods of their technical studies. And, of course, the NAS’s support for these researchers explicitly guaranteed their scholarly autonomy and the expectation that these scholars would publish freely, according to the demands of data, scientific rigor, and conscience.

Confounded Errors is the third of four scheduled reports, each critiquing different aspects of the scientific foundations of federal regulatory policy. We intend to publish these reports separately and then as one long report, which will eliminate some necessary duplication in the material of each individual report. The NAS intends these four reports, collectively, to provide a substantive, wide-ranging answer to the question What has been the actual effect on government policy of irreproducible science?

I am deeply grateful for the support of many individuals who made Shifting Sands possible. The Arthur N. Rupe Foundation provided Shifting Sands’ funding—and, within the Rupe Foundation, Mark Henrie’s support and goodwill got this project off the ground and kept it flying. Two readers invested considerable time and thought to improve this report with their comments: William M. Briggs and Douglas W. Allen. David Acevedo copyedited Confounded Error with exemplary diligence and skill. David Randall, the NAS’s director of research, provided staff coordination for Shifting Sands—and, of course, Stanley Young has served as director of the Shifting Sands Project. Reports such as these rely on a multitude of individual, extraordinary talents.

Executive Summary

Scientists’ use of flawed statistics and editors’ complaisant practices both contribute to the mass production and publication of irreproducible research in a wide range of scientific disciplines. Far too many researchers use unsound scientific practices. This crisis poses serious questions for policymakers. How many federal regulations reflect irreproducible, flawed, and unsound research? How many grant dollars have funded irreproducible research? How widespread are research integrity violations? Most importantly, how many government regulations based on irreproducible science harm the common good?

The National Association of Scholars’ (NAS) project Shifting Sands: Unsound Science and Unsafe Regulation examines how irreproducible science negatively affects select areas of government policy and regulation governed by different federal agencies. We also seek to demonstrate procedures which can detect irreproducible research. This third policy paper in the Shifting Sands project focuses on failures by the U.S. Centers for Disease Control and Prevention (CDC) and the National Institutes of Health (NIH) to consider empirical evidence available in the public domain early in the COVID-19 pandemic.

The COVID-19 virus is not the plague or the Spanish flu. In effect, it is a very ordinary, new respiratory virus. It has a rather low case fatality rate. Over time it has become less lethal and more infectious, in line with viral evolutionary thinking. Historical wisdom for dealing with a new virus was to protect the weak and let natural immunity lead to herd immunity. Whereas COVID-19 infections were lethal primarily to elderly persons with comorbidities, the virus was sold to us by public health officials as a lethal danger to one and all.

Technical studies in our paper focused on two aspects of nonpharmaceutical intervention response to the COVID-19 pandemic: lockdowns and masking, which were both meant to reduce COVID-19 infections and fatalities. We used a novel statistical technique—p-value plotting—as a severe test to study specific claims made about the benefit to public health outcomes of these responses.

We found persuasive circumstantial evidence that lockdowns and masking had no proven benefit to public health outcomes. Our technical studies suggest a far greater frailty (failure) in the system of epidemiological modeling and policy recommendations. That system, generally, grossly overestimated the potential effects of COVID-19 and, particularly, overestimated the potential benefit of lockdowns and masking. We believe our technical studies support recommendations for policy change to restructure the entire system of government policy based on epidemiological modeling, and not simply to apply cosmetic reforms to the existing system.

We offer several recommendations to the CDC in particular, to government more generally, to the modeling profession, and to Americans as a whole about public health interventions.

Regarding civil liberty:

- Congress and the president should jointly convene an expert commission to set boundaries on the areas of private life which may be the subject of public health interventions.

- This commission’s rules should explicitly limit the scope of public health interventions to physical health, narrowly and carefully defined.

- All such public health interventions should be required to receive explicit sanction from both houses of Congress.

Regarding epidemiological (mathematical) modeling that forms a basis for CDC policymaking:

- Require pre-registration of mathematical modeling studies.

- Require mathematical modeling transparency and reproducibility.

- Formulate rules to reduce intervention degrees of freedom (see definition below) for modeling public health interventions to limit state action.

- Formulate guidelines that make explicit that modeling is meant to quantify the uncertainty of action, and that the CDC should convey to policymakers a quantification of the uncertainty of action rather than a prescription of certainty to justify action.

- The CDC should charter a commission to advise it in how to achieve these goals.

Regarding further commissions:

- The federal government should establish a commission to undertake a full-scale investigation and report on the origins and nature of COVID-19, as well as of public health policy errors committed during the response to COVID-19 by the CDC.

- As public health modeling naturally aligns with the use of computer science algorithms, social media censorship of heterodox, COVID-19-related posts depended on both. The federal government should establish a commission to provide guidelines for the federal funding, conduct, and regulation of the use of computer science algorithms, particularly as they are used by the federal government and by social media companies.

We have subjected the science underpinning the COVID-19 nonpharmaceutical interventions of lockdowns and masking to serious scrutiny. We believe the CDC should take account of our methods as it considers pandemic responses. Yet we care even more about reforming the procedures the CDC uses in general to assess pandemic responses.

The government should use the very best science—whatever the regulatory consequences. Scientists should use the very best research procedures—whatever result they find to assess pandemic responses. Those principles are the twin keynotes of this report. The very best science and research procedures involve building evidence on the solid rock of transparent, reproducible, and actually reproduced scientific inquiry, not on shifting sands.

Introduction

On March 11, 2020, the World Health Organization (WHO) officially declared COVID-19 a pandemic. In the next years, public health professionals largely formed policy responses to the pandemic. The response in most developed countries was a strategy of “suppression”9 or establishing rigorous “control regimes.”10 This strategy included various combinations of:

- widespread COVID-19 virus testing, contact tracing, and isolating;

- use of face masks in public;

- physical distancing;

- “lockdowns,” closed schools, and stay-at-home orders;

- limitations on mass gatherings; and

- improved ventilation systems at workplaces.

It also included the most extreme “zero-COVID” strategy (as it was known in China), which involved completely locking down the population.11

Public health professionals’ consensus strategy, crucially, assumed that COVID-19 itself was the great driver of mortality, that COVID-19 fatality rates were very high across a broad range of population subsets, and that these policies could substantially alter COVID-19 mortality. These three assumptions were used to justify the recommendation that as much of the general population as possible isolate itself in individual and family groups indefinitely, regardless of other costs.

We should note that these assumptions were based on the putative success of China in eradicating COVID-19 by means of a draconian lockdown regime, and, significantly, on mathematical modeling.12 Dr. Neil Ferguson of the Imperial College of London played a particularly important role by developing a pandemic mathematical model that projected that there would be hundreds of millions of deaths worldwide unless governments undertook such extreme protective actions.13 This reliance on mathematical modeling partly was an attempt to calculate proper policy in a timely fashion based on limited data about COVID-19 itself. Partly it was a consequence of modern scientific culture and institutions’ increasing dependence on highly complex and insufficient-quality mathematical models.

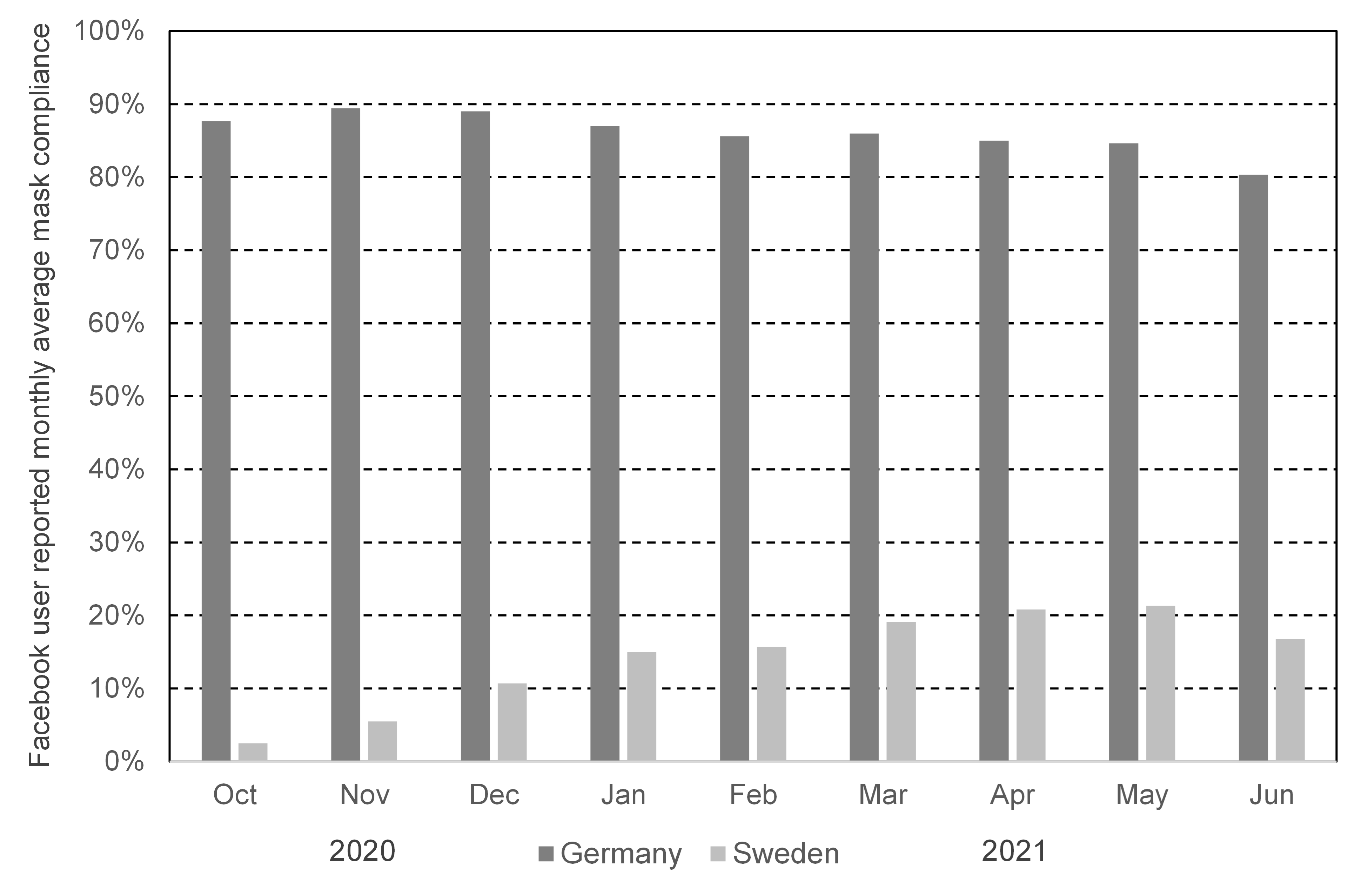

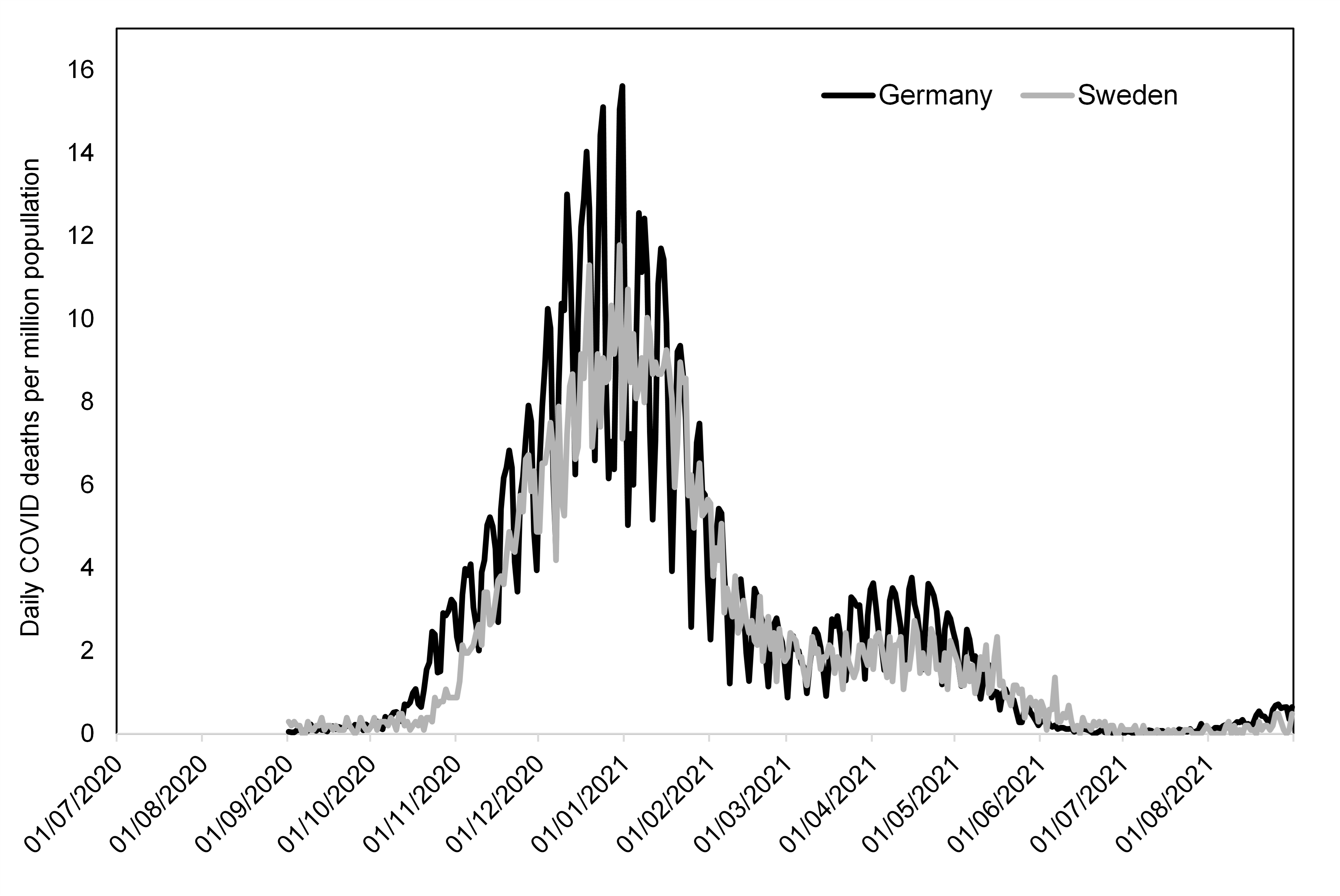

Sweden provided the most significant national departure from this strategy. Sweden also relied on public health professionals to determine its COVID-19 policy response, but these professionals, constrained by a constitution that did not allow for a state of emergency to be declared in peacetime,14 stuck to their own judgment rather than relying on an emerging quasi-consensus among their global peers. Sweden focused on protecting the most imperiled population sub-groups and allowed the population at large to interact freely and build up natural immunities.15 The Sweden strategy, we may note, essentially treated COVID-19 as a quasi-novel virus to which many had some prior immunity. Sweden persisted in its strategy despite substantial condemnation from the global public health establishment—condemnation which even extended to censorship of public justification of the strategy.16 Sweden ended up tied among Organisation for Economic Co-operation and Development countries for the lowest number of excess deaths from COVID-19.17

Within the United States, several states enacted policies that modified federal measures. Governor Ron DeSantis of Florida, notably, championed a strategy of protecting the most imperiled population sub-groups and preventing a continued total lockdown.18 As Sweden provided the most notable alternative on the world stage, Florida provided the most notable alternative strategy within the United States.

We now may conclude that Sweden and Florida enacted better public health policies than the world public health experts who relied on models such as Ferguson’s.

Implications of COVID-19 Suppression Strategies Used in the U.S.

Yet federal policy and CDC recommendations set the broad parameters for America’s COVID-19 public health policy. The suppression strategy that was enacted imposed severe costs on the American economy and society. What follows is a sampling of these costs, which were observed by November 2020:

- Between March 25 and April 10, 2020, nearly one-third of adults (31%) reported that their families could not pay the rent, mortgage, or utility bills; were food insecure; or went without medical care because of the cost.

- Q2 2020 GDP decreased at an annual rate of 32.9%, and Q1 2020 GDP decreased at an annual rate of 5%.

- Between March 25 and April 10, 2020, 41.5% of nonelderly adults reported having lost jobs, reduced work hours, or less income because of COVID-19.

- The unemployment rate increased to 14.7% in April 2020. This was the highest rate of increase (10.3%) and largest month-over-month increase in the history of available data (since 1948).

- In March, 39% of people with a household income of $40,000 and below reported a job loss.

- Mothers of children aged 12 and younger lost 2.2 million jobs between February and August (12% drop), while fathers of small children lost 870,000 jobs (4% drop).

- Preschool participation sharply fell from 71% pre-pandemic to 54% during the pandemic; the decline was steeper for young children in poverty.19

Similar consequences have been measured in later research.20 Such extraordinarily deleterious consequences require a very high public health justification—and the evidence that has emerged suggests that the assumptions used to justify the suppression-and-lockdown strategy were incorrect. More broadly, they suggest that the procedures of the public health establishment bear significant responsibility for their errors in judgment.

Reforming Government Regulatory Policy: The Shifting Sands Project

The National Association of Scholars’ (NAS) project Shifting Sands: Unsound Science and Unsafe Regulation examines how irreproducible science negatively affects select areas of government policy and regulation governed by different federal agencies.21 We also aim to demonstrate procedures which can detect irreproducible research. We believe government agencies should incorporate these procedures as they determine what constitutes “best available science”—the standard that judges which research should inform government regulation.22

In Shifting Sands we use an analysis strategy for all our policy papers⸺p-value plotting (a visual form of Multiple Testing and Multiple Modeling (MTMM) analysis)⸺as a way to demonstrate weaknesses in different agencies’ use of meta-analyses. MTMM corrects for statistical analysis strategies that produce a large number of false positive statistically significant results—and, since irreproducible results from base studies produce irreproducible meta-analyses, allows us to detect these irreproducible meta-analyses. (For a longer explanation of Multiple Testing Multiple Modeling and of statistical significance, see Appendixes 1 and 2.) Researchers doing epidemiological modeling studies should correct their work to take account of MTMM.23

Scientists generally are at least theoretically aware of this danger, albeit they have done far too little to correct their professional practices. Methods to adjust for MTMM have existed for decades. The Bonferroni method simply adjusts the p-value by multiplying the p-value by the number of tests. Westfall and Young provide a simulation-based method for correcting an analysis for MTMM.24

In practice, however, far too much “research” simply ignores the danger. Researchers can use MTMM until they find an exciting result to submit to the editors and referees of a professional journal—in other words, they can p-hack.25 Editors and referees have an incentive to trust, with too much complaisance, that researchers have done due statistical diligence, so they can publish exciting papers and have their journal recognized in the mass media.26 Some editors are part of the problem.27

The public health establishment’s practices are a component of the larger irreproducibility crisis, which has led to the mass production and publication of irreproducible research.28 Many improper scientific practices contribute to the irreproducibility crisis, including poor applied statistical methodology, bias in data reporting, publication bias (the skew toward publishing exciting, positive results), fitting the hypotheses to the data after looking at the data, and endemic groupthink.29 Far too many scientists use improper scientific practices, including an unfortunate portion who commit deliberate data falsification.30 The entire incentive structure of the modern complex of scientific research and regulation now promotes the mass production of irreproducible research.31 (For a longer discussion of the irreproducibility crisis, see Appendix 3.)

Many scientists themselves have lost overall confidence in the body of claims made in scientific literature.32 The ultimately arbitrary decision to declare p<0.05 as the standard of “statistical significance” has contributed extraordinarily to this crisis. Most cogently, Boos and Stefanski have shown that an initial result likely will not replicate at p<0.05 unless it possesses a p-value below 0.01, or even 0.001.33 Numerous other critiques about the p<0.05 problem have been published.34 Many scientists now advocate changing the definition of statistical significance to p<0.005.35 But even here, these authors assume only one statistical test and near-perfect study methods.

Researchers themselves have become increasingly skeptical of the reliability of claims made in contemporary published research.36 A 2016 survey found that 90% of surveyed researchers believed that research was subject to either a major (52%) or a minor (38%) crisis in reliability.37 Begley reported in Nature that 47 of 53 research results in experimental biology could not be replicated.38 A coalescing consensus of scientific professionals realizes that a large portion of “statistically significant” claims in scientific publications, perhaps even a majority in some disciplines, are false—and certainly should not be trusted until they are reproduced.39

Shifting Sands aims to demonstrate that the irreproducibility crisis has affected so broad a range of government regulation and policy that government agencies should now thoroughly modernize the procedures by which they judge “best available science.” Agency regulations should address all aspects of irreproducible research, including the inability to reproduce:

- the research processes of investigations;

- the results of investigations; and

- the interpretation of results.40

Our common approach supports a comparative analysis across different subject areas, while allowing for a focused examination of one dimension of the effect of the irreproducibility crisis on government agencies’ policies and regulations.

Keeping Count of Government Science: P-Value Plotting, P-Hacking, and PM2.5 Regulation focused on irreproducible research in environmental epidemiology that informs the Environmental Protection Agency’s policies and regulations.41

Keeping Count of Government Science: Flimsy Food Findings: Food Frequency Questionnaires, False Positives, and Fallacious Procedures in Nutritional Epidemiology focused on irreproducible research in nutritional epidemiology that informs much of the U.S. Food and Drug Administration’s nutrition policy.42

This third policy paper in the Shifting Sands project, Keeping Count of Government Science: The Confounded Errors of Public Health Policy Response to the COVID-19 Pandemic, focuses on failures by the U.S. Centers for Disease Control and Prevention (CDC) and the National Institutes of Health (NIH) to consider empirical evidence available in the public domain early in the pandemic.43 These mistakes eventually contributed to a public health policy that imposed substantial economic and social costs on the United States, with little or no public health benefit.

Confounded Error

Confounded Error provides an overview of the relevant history of the CDC and the NIH, of the history and character of the COVID-19 pandemic, and of the consequent public health policy response. We focus, however, on two aspects of COVID-19 and the related policy response:

- the effectiveness of lockdowns to reduce COVID-19 infections and fatalities; and

- the effectiveness of masking to reduce COVID-19 infections and fatalities.

We have applied Multiple Testing and Multiple Modeling analysis to both of these questions. P-value plots were used to independently assess the “reproducibility” of meta-analytical research claims made in literature for both cases (lockdowns, masks).

Informally, our report adopts Karl Popper’s empirical falsification approach, which underscores the importance to scientific theory of the falsification of hypotheses.44 CDC and NIH policy was predicated on the hypothesis that the United States’ suppression policy substantially benefited public health. We believe that our report provides substantial evidence, both collected from the existing literature and produced in our original research, to falsify this hypothesis.

In addition to presenting our research, other sections of this report include:

- discussion of our findings;

- our recommendations for policy changes; and

- appendixes.

Our policy recommendations include specific technical recommendations directly following from our technical analyses, with broader application for future federal regulatory pandemic policy response. They also include recommendations for a broader reform of the relation of professional expertise to policy formation.

COVID-19: Fumbling Forecasts and Ill-Planned Interventions

COVID-19 was an epidemic foretold. The 2002–2003 SARS epidemic presaged COVID-19 most closely, but, by 2019, epidemiologists had been engaged in contingency planning for a virulent outbreak of some sort for a generation—and in using modeling for several real disease outbreaks. The 9/11 terrorist attack, and the simultaneous use of anthrax as a bioweapon, made policymakers keenly aware of the need to plan for terrorist or state weaponization of infectious disease. The 2002–2003 SARS epidemic was followed by the possibility of an H5N1 influenza epidemic (2005), the H1N1 influenza pandemic (2009), the Ebola outbreak (2014–2016), and the Zika epidemic (2016–2017). The CDC and other epidemiologists used mathematical modeling throughout to estimate transmission, risks, and the effects of different public health interventions. Neil Ferguson’s work to model influenza directly influenced his later model for COVID-19.45

Forewarned, however, was not forearmed. Ferguson’s first COVID-19 model proved spectacularly misguided—and spectacularly influential, not least from the nightmare scenario it painted of COVID-19 response absent social distancing: “At one point, the [Ferguson] model projected over 2 million U.S. deaths by October 2020.” But even though models are supposed to be evaluated by their usefulness, scientists’ enthusiasm for Ferguson’s model was not dampened by its failure: “This model proved valuable not by showing us what is going to happen, but what might have been.”46 Even this encomium would appear to be misguided, since Ferguson’s model also predicted a nightmarishly high level of deaths, even with full lockdown policies enacted.

More precisely, Ferguson’s model failure, and the failures of other COVID-19 models, did not dampen enthusiasm among a large part of the professional community of epidemiological statisticians and modelers.47 This part of the professional community, which dominates the CDC and peer institutions, takes model failure to be a temporary shortcoming, data to be used to improve the next generation of models. Such professionals make carefully delimited suggestions for methodological reform: “It has been observed previously for other infectious diseases that an ensemble of forecasts from multiple models perform better than any individual contributing model.”48 They note the rationales for models whose simplicity led to profound policy errors, e.g., that modelers frequently prefer simple, parsimonious models, particularly to allow policy interventions to proceed quickly.49 Their retrospective on the history of COVID-19 modeling is one of bland, technocratic success:

In collaboration with academic, private sector, and US government modeling partners, the CDC rapidly built upon this modeling experience to support its coronavirus disease 2019 (COVID-19) response efforts. … The CDC Modeling Team collaborated with multiple academic groups to evaluate the potential impact of different reopening strategies in a simulated population. The evaluated strategies included: (1) closure throughout the 6-month prediction period; (2) reopening when cases decline below 5% of the peak daily caseload; (3) reopening 2 weeks after peak daily caseload; and (4) immediate reopening. This unique collaboration concluded that complete cessation of community spread of the disease was unlikely with any of these reopening strategies and that either additional stay-at-home orders or other interventions (eg, testing, contact tracing and isolation, wearing masks) would be needed to reduce transmission while allowing workplace reopening. This finding provided strong, timely evidence that control of the COVID-19 pandemic would require a balance of selected closure policies with other mitigation strategies to limit health impacts. The modeling results indicated that even moderate reductions in NPI [nonpharmaceutical intervention] adherence could undermine vaccination-related gains during the subsequent 2–3 months and that decreased NPI adherence, in combination with increased transmissibility of some SARS-CoV-2 variants, was projected to lead to surges in hospitalizations and deaths. These findings reinforced the need for continued public health messaging to encourage vaccination and the effective use of NPIs to prevent future increases in COVID-19.50

The policies that these researchers so blandly endorsed, meanwhile, were astonishingly and troublingly open-ended. In April 2020, for example, the WHO recommended that governments continue lockdowns until such time as they could achieve a set of six conditions alternately arbitrary or implausibly rigorous.

- Disease transmission is under control

- Health systems are able to “detect, test, isolate and treat every case and trace every contact”

- Hot spot risks are minimized in vulnerable places, such as nursing homes

- Schools, workplaces and other essential places have established preventive measures

- The risk of importing new cases “can be managed”

- Communities are fully educated, engaged and empowered to live under a new normal51

The last of these conditions left undefined “a new normal,” but it would seem to imply that governments should continue lockdowns until such time as the citizenry’s “fully educated” views and behavior coincided in all respects with the recommendations of public health experts. A technical model submitted to the public for judgment should not have the alteration of the public’s judgment as a component—much less hold the public hostage to continued lockdowns until they assent to supporting the lockdown policies.

Another part of the professional community has highlighted COVID-19 models’ methodological flaws, and their basic failure to predict events—presumably a sine qua non in a model.52 Collins and Wilkinson conducted a systematic review of 145 COVID-19 prediction models published or preprinted between January 3 and May 5, 2020, and discovered pervasive statistical flaws: different models suffered from small sample size, many predictors, arbitrarily discarded data and predictors, overfitted models, and a general lack of transparency about how they were created. These flaws frequently overlapped. In sum, “all models to date, with no exception, are at high risk of bias with concerns related to data quality, flaws in the statistical analysis, and poor reporting, and none are recommended for use.”53 Nixon et al. likewise stated of a sample of 136 papers that

a large fraction of papers did not evaluate performance (25%), express uncertainty (50%), or state limitations (36%). … Papers did not consistently state the precise objective of their model (unconditional forecast or assumption-based projection), detail their methodology, express uncertainty, evaluate performance across a long, varied timespan, and clearly list their limitations.54

Ioannidis et al. provided a scathing cumulative judgment:

Epidemic forecasting has a dubious track-record, and its failures became more prominent with COVID-19. Poor data input, wrong modeling assumptions, high sensitivity of estimates, lack of incorporation of epidemiological features, poor past evidence on effects of available interventions, lack of transparency, errors, lack of determinacy, consideration of only one or a few dimensions of the problem at hand, lack of expertise in crucial disciplines, groupthink and bandwagon effects, and selective reporting are some of the causes of these failures. … Even for short-term forecasting when the epidemic wave waned, models presented confusingly diverse predictions with huge uncertainty.55

Ioannidis et al. added to this judgment a larger critique of previous epidemiological modeling: “Predictions may work in ‘ideal’, isolated communities with homogeneous populations, not the complex current global world.”56

Ioannidis et al. address the argument that we need to consider the possibility of ‘doomsday pandemics’ with the sensible observation that we need to be sure that doomsday actually has arrived—and that we have tools available to help us make that assessment judiciously: “Upon acquiring solid evidence about the epidemiological features of new outbreaks, implausible, exaggerated forecasts should be abandoned. Otherwise, they may cause more harm than the virus itself.”57 They concluded with a catalogue of recommendations to modelers that constitute a devastating critique of standard operating practices among epidemiological modelers.

- Invest more on collecting, cleaning, and curating real, unbiased data, and not just theoretical speculations

- Model the entire predictive distribution, with particular focus on accurately quantifying uncertainty

- Continuously monitor the performance of any model against real data and either re-adjust or discard models based on accruing evidence

- Avoid unrealistic assumptions about the benefits of interventions; do not hide model failure behind implausible intervention effects

- Use up-to-date and well-vetted tools and processes that minimize the potential for error through auditing loops in the software and code

- Maintain an open-minded approach and acknowledge that most forecasting is exploratory, subjective, and non-pre-registered research

- Beware of unavoidable selective reporting bias58

Ioannidis, who has articulated his skepticism of many aspects of institutional COVID-19 research, and corollary policy, by appearing as an author in a very large body of scientific literature,59 is the foremost figure in the study of irreproducible research, and more generally of metaresearch, the study of scientific research’s “methods, reporting, reproducibility, evaluation, and incentives.”60 If Ioannidis says that epidemiological models are an irreproducible mess, and if thousands of epidemiologists assure the public that their models are excellent, a prudent man would give Ioannidis’ word greater weight.

The public ought to be able to do more than simply take the word of one scientist or another. Unfortunately, the very complexity of models makes it extraordinarily difficult to provide a standard by which to hold them accountable—aside from the common-sense standard, did they predict well? Then, too, while models are considered sufficiently solid to inform policy immediately, they are tentative enough in their claims that a disproven model can always be disclaimed with a shrug and a reply that we updated the data. The failure of one parameter informs a new parameterization, not a skepticism of parameters in general. The failure of one prediction can be ignored with resort to the general and the counterfactual: if you hadn’t followed our advice generally, millions would be dead. To say that a model failed is to invite the inevitable riposte, we’re doing it better now.

We focus our critique on two particular areas that speak to the accuracy of COVID-19 modeling: masks and lockdowns. We focus on these partly for their intrinsic importance.

- Lockdowns of the entire population were the most rigorous nonpharmaceutical intervention (NPI) response to the COVID-19 pandemic. Such lockdowns always were deeply controversial, and while China claimed to have successfully ended COVID-19 by means of particularly draconian COVID-19 policies, Sweden, Florida, and other entities rejected them in part or in whole.61 A substantial amount of political debate about COVID-19 policy turns upon the justification of lockdowns, and their efficacy.

- Masks, meanwhile, became a highly visual “condensed” symbol of the entire COVID-19 policy regime.62

We chose these two metrics in particular because Ioannidis published a critique of COVID-19 modeling on March 19, 2020, that focused on the effects of both lockdowns and masks: “Maintaining lockdowns for many months may have even worse consequences than an epidemic wave that runs an acute course. … randomized trials should evaluate also the real-world effectiveness of simple measures (eg face masks in different settings).”63 Ioannidis’ contemporary critique further justifies a retrospective critique of these aspects of COVID-19 modeling.

Technical Studies: Methods

Our technical studies include their own methods sections, written for a professional audience. We provide this methods section for a lay audience.

P-value Plots

Epidemiologists traditionally use confidence intervals instead of p-values from a hypothesis test to demonstrate or interpret statistical significance. Since researchers construct both confidence intervals and p-values from the same data, the one can be calculated from the other.64 We then develop p-value plots, a method for correcting Multiple Testing and Multiple Modeling (MTMM), to inspect the distribution of the set of p-values.65 (For a longer discussion of p-value plots, see Appendix 4.) The p-value is a random variable derived from a distribution of the test statistic used to analyze data and to test a null hypothesis.66 In a well-designed study, the p-value is distributed uniformly over the interval 0 to 1 regardless of sample size under the null hypothesis, and the distribution of true null hypothesis points in a p-value plot should form a straight line.67

A plot of p-values corresponding to a true null hypothesis, when sorted and plotted against their ranks, should conform to a near 45-degree line. Researchers can use the plot to assess the reliability of base study papers used in meta-analyses. (For a longer discussion of meta-analyses, see Appendix 5.)

We construct and interpret p-value plots as follows:

- We compute and order p-values from smallest to largest and plot them against the integers, 1, 2, 3, …

- If the points on the plot follow an approximate 45-degree line, we conclude that the p-values resulted from a random (chance) process, and that the data therefore supported the null hypothesis of no significant association.68

- If the points on the plot follow approximately a line with a flat/shallow slope, where most of the p-values were small (less than 0.05), then the p-values provide evidence for a real (statistically significant) association.

- If the points on the plot exhibit a bilinear shape (divided into two lines), then the p-values used for meta-analysis are consistent with a two-component mixture and a general (overall) claim is not supported; in addition, the p-value reported for the overall claim in the meta-analysis paper cannot be taken as valid.69

The formal meta-analysis process is strictly analytic. It computes an overall statistic for those test statistics combined, whereupon a research claim is made from the overall statistic. The meta-analysis computational method is flawed, given that, as Nelson et al. (2018) state, “if there is some garbage in, then there is only garbage out.”70 P-value plotting is an independent method to assess heterogeneity of the test statistics combined in meta-analysis to examine whether garbage is present.

P-value plotting is not by itself a cure-all. P-value plotting cannot detect every form of systematic error. P-hacking, research integrity violations, and publication bias will alter a p-value plot. But it is a useful tool which allows us to detect a strong likelihood that questionable research procedures, such as HARKing (see below) and p-hacking, have corrupted base studies used in meta-analysis and, therefore, have rendered the meta-analysis unreliable. We may also use it to plot “missing papers” in a body of research, and thus to infer that publication bias has affected a body of literature.

We may also use p-value plotting to plot “missing papers” in a body of research, and thus to infer that publication bias has affected a body of literature.

To HARK is to hypothesize after the results are known—to look at the data first and then come up with a hypothesis that has a statistically significant result.71 (For a longer discussion of HARKing, see Appendix 6.)

P-hacking involves the relentless search for statistical significance and comes in many forms, including MTMM without appropriate statistical correction.72

Irreproducible research hypotheses produced by HARKing and p-hacking send whole disciplines chasing down rabbit holes. This allows scientists to pretend their “follow-up” research is confirmatory research; but in reality, their research produces nothing more than another highly tentative piece of exploratory research.73

P-value plotting is not the only means available by which to detect questionable research procedures. Scientists have come up with a broad variety of statistical tests to account for frailties in base studies as they compute meta-analyses. Unfortunately, questionable research procedures in base studies severely degrade the utility of the existing means of detection.74 We proffer p-value plotting not as the first means to detect HARKing and p-hacking in meta-analysis, but as a better means than alternatives which have proven ineffective.

We proffer p-value plotting not as the first means to detect HARKing and p-hacking in meta-analysis, but as a better means than alternatives which have proven ineffective.

Public Health Interventions: Lockdowns

1. Introduction

On March 11, 2020, the WHO officially declared COVID-19 a pandemic.75 Many governments subsequently adopted aggressive pandemic policies.76 Examples of these policies, imposed as large-scale restrictions on people, included: quarantine (stay-at-home) orders; masking orders in community settings; nighttime curfews; closures of schools, universities, and many businesses; and bans on large gatherings.77

The objective of this study was to use a p-value plotting statistical method (after Schweder & Spjøtvoll) to independently evaluate specific research claims related to COVID-19 quarantine (stay-at-home) orders in published meta-analysis studies.78 This was done in an effort to illustrate the importance of the reproducibility of research claims arising from this nonpharmaceutical intervention in the context of the surge of COVID-19 papers in literature over the past few years.

2. Method

We first wanted to gauge the number of reports of meta-analysis studies cited in literature related to some aspect of COVID-19. To do this we again used the Advanced Search Builder capabilities of the PubMed search engine.79 Our search returned 3,204 listings in the National Library of Medicine database. This included 633 listings for 2020, 1,301 listings for 2021, and 1,270 listings thus far for 2022. We find these counts astonishing, in that a meta-analysis is a summary of available papers.

Given our understanding of the pre-COVID-19 research reproducibility of published literature discussed above, we speculated that there may be numerous meta-analysis studies relating to COVID-19 that are irreproducible. We prepared and posted a research plan on the Researchers.One platform.80 This plan can be accessed and downloaded without restrictions from the platform. Our plan was to use p-value plotting to independently evaluate four selected published meta-analysis studies specifically relating to possible health outcomes of COVID-19 quarantine (stay-at-home) orders—also referred to as ‘lockdowns’ or ‘shelter-in-place’ in literature.

2.1 Data sets

As stated in our research plan,81 we considered four meta-analysis studies in our evaluation:

- Herby et al. (2022) – mortality82

- Prati & Mancini (2021) – psychological effects (specifically, mental health symptoms)83

- Piquero et al. (2021) – reported incidents of domestic violence84

- Zhu et al. (2022) – suicidal ideation (thoughts of killing yourself)85

We downloaded and read electronic copies of each meta-analysis study (and any corresponding electronic supplementary information files).

Herby et al. (2022)86 – The Herby et al. (2022) meta-analysis examined the effect of COVID-19 quarantine (stay-at-home) orders implemented in 2020 on mortality based on available empirical evidence. These orders were defined as the imposition of at least one compulsory, non-pharmaceutical intervention. Herby et al. initially identified 19,646 records that could potentially address their purpose.

After three levels of screening by Herby et al., 32 studies qualified. Of these, estimates from 22 studies could be converted to standardized measures for inclusion in their meta-analysis. For our evaluation, we could only consider results for 20 of the 22 studies (data they provided for two studies could not be converted to p-values). Their research claim was: “lockdowns in the spring of 2020 had little to no effect on COVID-19 mortality.”

Prati & Mancini (2021)87 – The Prati & Mancini (2021) meta-analysis examined the psychological effects of COVID-19 quarantine (stay-at-home) orders on the general population. These included: mental health symptoms (such as anxiety and depression), positive psychological functioning (such as well-being and life-satisfaction), and feelings of loneliness and social support as ancillary outcomes.

Prati & Mancini initially identified 1,248 separate records that could potentially address their purpose. After screening, they identified and assessed 63 studies for eligibility and ultimately considered 25 studies for their meta-analysis. For our evaluation, we used all 20 results they reported on for mental health symptoms. Their research claim was: “lockdowns do not have uniformly detrimental effects on mental health and most people are psychologically resilient to their effects.”

Piquero et al. (2021)88 – The Piquero et al. (2021) meta-analysis examined the effect of COVID-19 quarantine (stay-at-home) orders on reported incidents of domestic violence. They used the following search terms to identify suitable papers with quantitative data to include in their meta-analysis: “domestic violence,” “intimate partner violence,” or “violence against women.”

Piquero et al. initially identified 22,557 records that could potentially address their purpose. After screening, they assessed 132 studies for eligibility and ultimately considered 18 studies in their meta-analysis. For our evaluation, we used all 17 results (effect sizes) that they presented from the 18 studies. Their research claim was: “incidents of domestic violence increased in response to stay-at-home/lockdown orders.”

Zhu et al. (2021)89 – The Zhu et al. (2021) meta-analysis examined the effect of COVID-19 quarantine (stay-at-home) orders on suicidal ideation and suicide attempts among psychiatric patients in any setting (e.g., home, institution, etc.). They used the following search terms to identify suitable papers with quantitative data to include in their meta-analysis: “suicide,” “suicide attempt,” “suicidal ideation,” or “self-harm”; “psychiatric patients,” “psychiatric illness,” “mental disorders,” “psychiatric hospitalization,” “psychiatric department,” “depressive symptoms,” or “obsessive-compulsive disorder.”

Zhu et al. initially identified 728 records that could potentially address their purpose. After screening, they assessed 83 studies for eligibility and ultimately considered 21 studies in their meta-analysis. For our evaluation, we used all 12 results that they reported on for suicidal ideation among psychiatric patients. Their research claim was: “estimated prevalence of suicidal ideation within 12 months [during COVID] was … significantly higher than a world Mental Health Survey conducted by the World Health Organization (WHO) in 21 countries [conducted 2001−2007].”

2.2 P-value plots

Epidemiologists traditionally use risk ratios and confidence intervals instead of p-values from a hypothesis test to demonstrate or interpret statistical significance. Altman & Bland show that both confidence intervals and p-values are constructed from the same data, that they are inter-changeable, and that one can be calculated from the other.90

Using JMP statistical software (SAS Institute, Cary, NC), we estimated p-values from risk ratios and confidence intervals for all data in each of the meta-analysis studies. In the Herby et al. meta-analysis, standard error (SE) was presented instead of confidence intervals. Where SE values were not reported, we used the median SE of the other base studies used in the meta-analysis (6.8). The p-values for each meta-analysis are summarized in an Excel file (.xlsx format) that can be downloaded at our posted Researchers.One research plan.91

We then created p-value plots after Schweder & Spjøtvoll to inspect the distribution of the set of p-values for each meta-analysis study.92

3. Results

3.1 Mortality

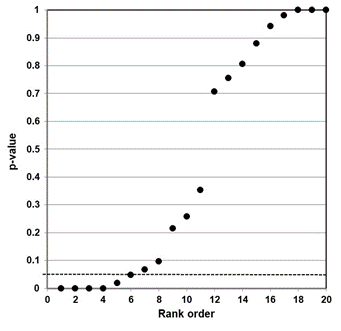

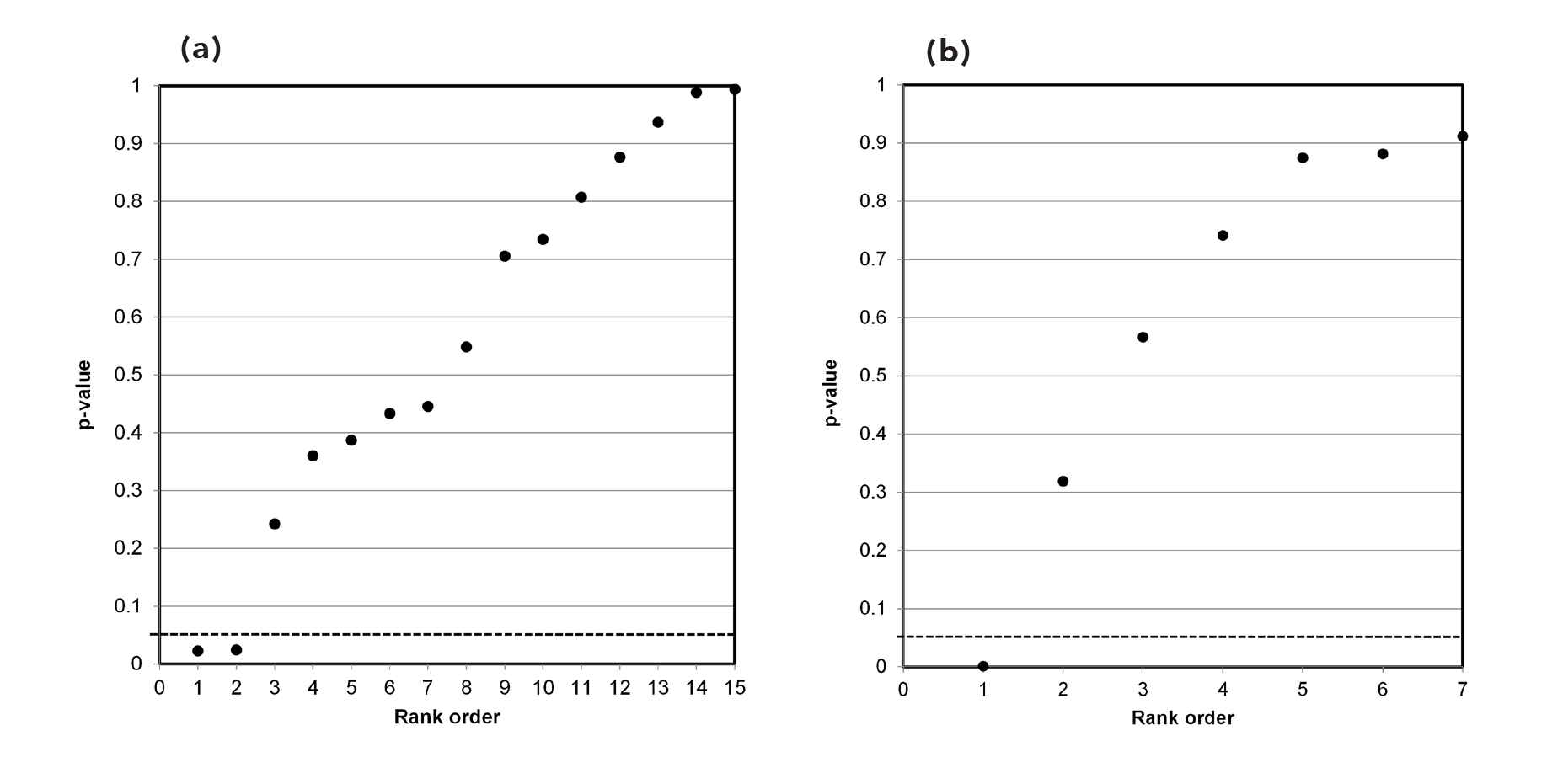

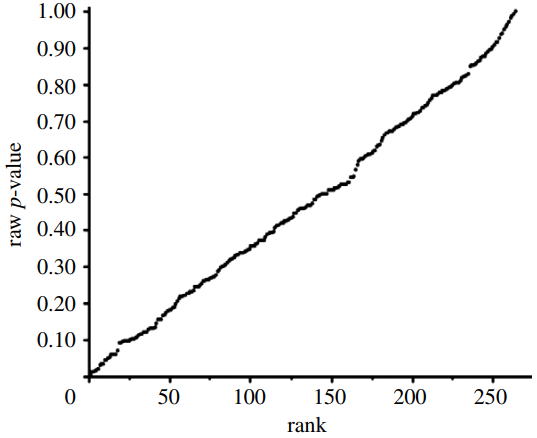

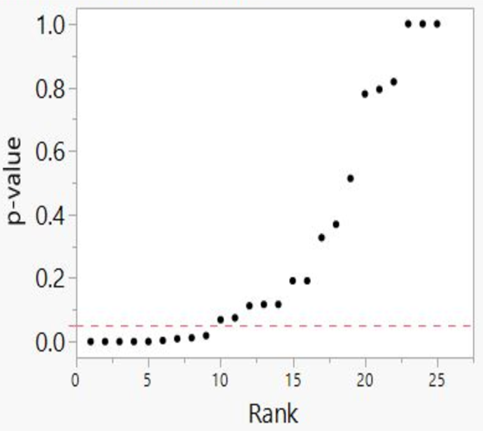

Our independent evaluation of the effect of COVID-19 quarantine (stay-at-home) orders on mortality—the Herby et al. (2022) meta-analysis—is shown in Figure 1. There are 20 studies that we included in the figure. Six of the 20 studies had p-values below 0.05, while four of the studies had p-values close to 1.00. Ten studies fell roughly on a 45-degree line, implying random results.

This data set comprises mostly null associations (14), as well as five or six possible non-null associations (1-in-20 of these could be a chance, i.e., false positive, association). While not perfect, this data set is a closer fit to a sample distribution for a true null association between two variables. Our interpretation of the p-value plot is that COVID-19 quarantine (stay-at-home) orders are not supported for reducing mortality, consistent with Herby et al.’s claim.

3.2 Psychological effects (mental health symptoms)

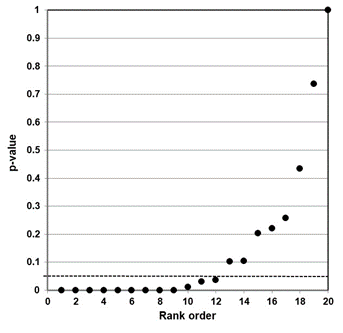

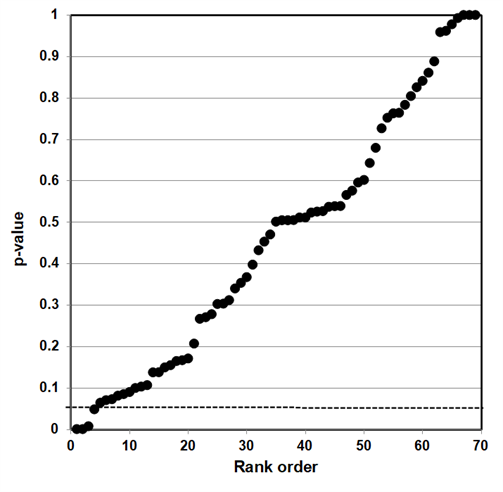

Our independent evaluation of the effect of COVID-19 quarantine (stay-at-home) orders on mental health symptoms—the Prati & Mancini (2021) meta-analysis—is shown in Figure 2. Figure 2 data present as a bilinear shape showing a two-component mixture. This data set clearly does not represent a distinct sample distribution for either true null associations or true effects between two variables. Our interpretation of the p-value plot is that COVID-19 quarantine (stay-at-home) orders have an ambiguous (uncertain) effect on mental health symptoms. However, as discussed below, there are questions about their research claim.

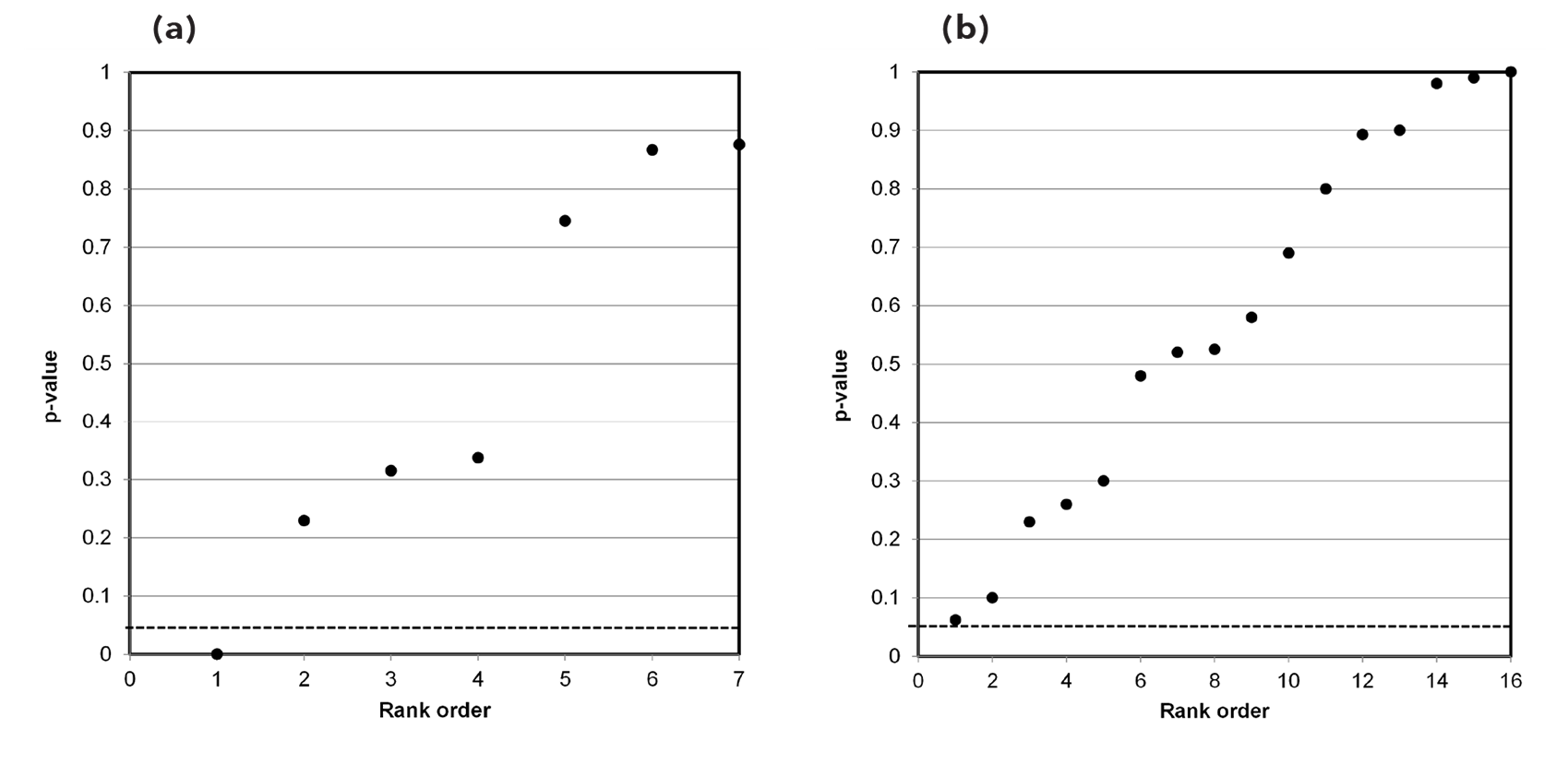

Figure 1. P-value plot (p-value versus rank) for Herby et al. (2022) meta-analysis of the effect of COVID-19 quarantine (stay-at-home) orders implemented in 2020 on mortality. Symbols (circles) are p-values ordered from smallest to largest (n=20).

Figure 2. P-value plot (p-value versus rank) for Prati & Mancini (2021) meta-analysis of the effect of COVID-19 quarantine (stay-at-home) orders on mental health symptoms. Symbols (circles) are p-values ordered from smallest to largest (n=20).

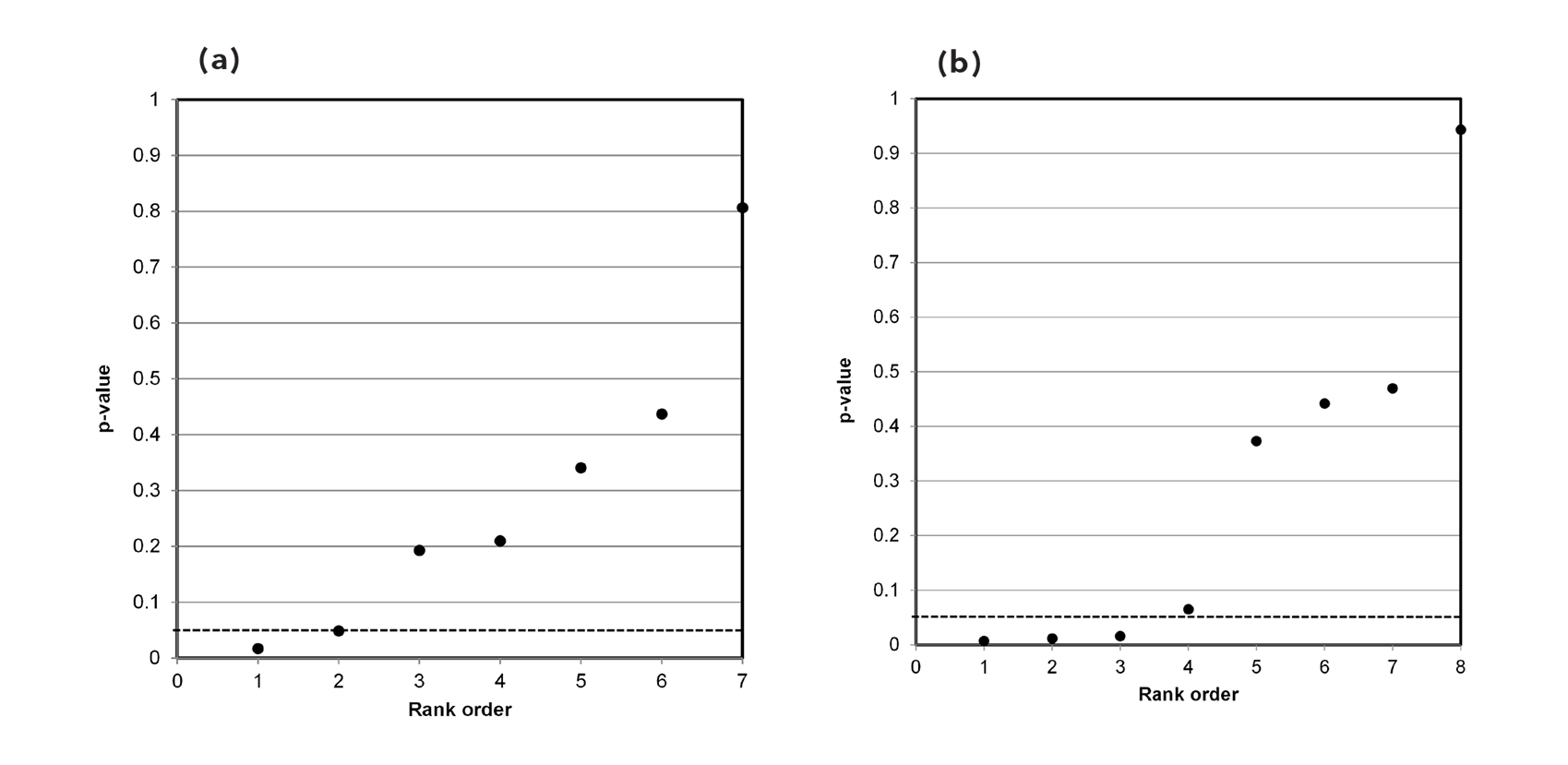

3.3 Incidents of domestic violence

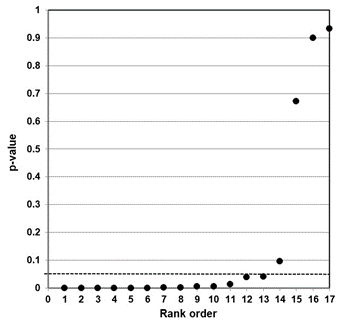

Our independent evaluation of the effect of COVID-19 quarantine (stay-at-home) orders on reported incidents of domestic violence—the Piquero et al. (2021) meta-analysis—is shown in Figure 3. Thirteen of the 17 studies had p-values less than 0.05. While not shown in the figure, eight of the p-values were small (<0.001).

This data set comprises mostly non-null associations (13), as well as four possible null associations. While not perfect, this data set is a closer fit to a sample distribution for a true alternative association between two variables. Our interpretation of the p-value plot is that COVID-19 quarantine (stay-at-home) orders have a negative effect (increase) in reported incidents of domestic violence.

Figure 3. P-value plot (p-value versus rank) for Piquero et al. (2021) meta-analysis of the effect of COVID-19 quarantine (stay-at-home) orders on reported incidents of domestic violence. Symbols (circles) are p-values ordered from smallest to largest (n=17).

3.4 Suicidal ideation

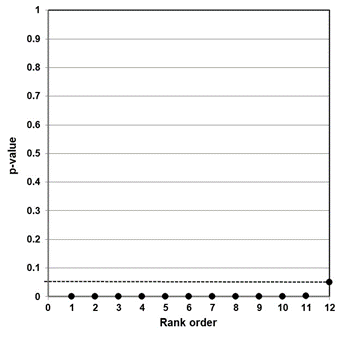

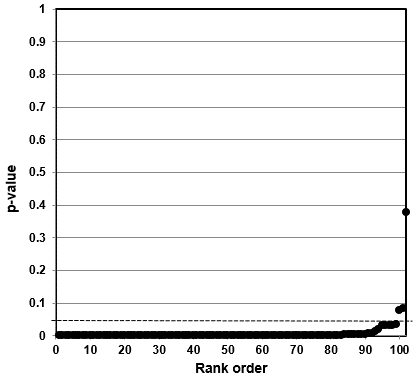

Our independent evaluation of the effect of COVID-19 quarantine (stay-at-home) orders on suicidal ideation—the Zhu et al. (2021) meta-analysis—is shown in Figure 4. The p-values for all 12 studies were less than 0.05. Ten of the 12 studies had p-values less than 0.005. While not shown in the figure, eight of the p-values were small (<0.001).

This data set presents as a distinct sample distribution for true effects between two variables. Our interpretation of the p-value plot is that COVID-19 quarantine (stay-at-home) orders have an effect on suicidal ideation (thoughts of killing yourself). However, as discussed below, there are valid questions about how the meta-analysis was formulated.

Figure 4. P-value plot (p-value versus rank) for Zhu et al. (2021) meta-analysis of the effect of COVID-19 quarantine (stay-at-home) orders on suicidal ideation (thoughts of killing yourself). Symbols (circles) are p-values ordered from smallest to largest (n=12).

4. Discussion and Implications

As stated previously, an independent evaluation of published meta-analyses on a common research question can be used to assess the reproducibility of a claim coming from that field of research. We evaluated four meta-analysis studies of COVID-19 quarantine (stay-at-home) orders implemented in 2020 and corresponding health benefits and/or harms. Our intent was to illustrate the importance of reproducibility of research claims arising from this nonpharmaceutical intervention in the context of the surge of COVID-19 papers in literature over the past few years.

4.1 Mortality

The Herby et al. meta-analysis examined the effect of COVID-19 quarantine orders on mortality. Their research claim was: “lockdowns in the spring of 2020 had little to no effect on COVID-19 mortality.” Here, they imply that the intervention (COVID-19 quarantine orders) had little or no effect on the reduction of mortality. To put their findings into perspective, Herby et al. estimated that the average lockdown in the United States (Europe) in the spring of 2020 avoided 16,000 (23,000) deaths. In contrast, they report that there are about 38,000 (72,000) flu deaths each year in the United States (Europe).93

Our evidence agrees with their claim. Our p-value plot (Figure 1) is not consistent with the expected behavior of a distinct sample distribution for a true effect between the intervention (quarantine) and the outcome (reduction in mortality). More importantly, our plot shows considerable randomness (many null associations, p-values > 0.05), supporting no consistent effect. Herby et al. further stated: “costs to society must be compared to the benefits of lockdowns, which our meta-analysis has shown are little to none.”

4.2 Psychological effects (mental health symptoms)

The Prati & Mancini meta-analysis examined the psychological effects of COVID-19 quarantine orders on the general population. Their research claim was: “lockdowns do not have uniformly detrimental effects on mental health and most people are psychologically resilient to their effects.” We evaluated a component of psychological effects—i.e., whether COVID-19 quarantine orders affect mental health symptoms (Figure 2). Figure 2 clearly exhibits a two-component mixture, implying an ambiguous (uncertain) effect on mental health symptoms. However, our evidence does not necessarily support their claim.94

Digging deep into their study reveals an interesting finding. Their study looked at a variety of psychological symptoms that differed from study to study. Although not shown here, when they examined these symptoms separately—a meta-analysis of each symptom—there was a strong signal for anxiety (p-value less than 0.0001). This is less than the Boos & Stefanski–proposed p-value action level of 0.001 for expected replicability.95 Here, the term ‘action level’ means that if a study is replicated, the replication will give a p-value less than 0.05. We note with interest that, at the height of the pandemic, news coverage of COVID-19 was constantly saying you could die of the virus. It should be no wonder that there was a strong signal for anxiety.

We also note that Prati & Mancini appear to take the absence of evidence of a negative mental health effect of COVID-19 quarantine orders in their meta-analysis as implying that it does not affect mental health. But “absence of evidence does not imply evidence of absence.”96 Just because meta-analysis failed to find an effect, it does not imply that “most people are psychologically resilient to their [lockdowns’] effects.” A more plausible and valid inference is that this statement of claim is insufficiently researched at this point.

4.3 Incidents of domestic violence

The Piquero et al. meta-analysis examined the effect of COVID-19 quarantine orders on reported incidents of domestic violence. Their research claim was: “incidents of domestic violence increased in response to stay-at-home/lockdown orders.” Our evidence suggests agreement with this claim. Our p-value plot (Figure 3) is more consistent with the expected behavior of a distinct sample distribution for a true effect between the intervention (quarantine) and the outcome (increase in incidents of domestic violence).97

We note that Figure 3 has 13 of 17 p-values less than 0.05, with eight of these less than 0.001, and only a few null association studies (4). Our evidence supports that COVID-19 quarantine orders likely increased incidents of domestic violence.

4.4 Suicidal ideation

The Zhu et al. meta-analysis examined COVID-19 quarantine orders on suicidal ideation (thoughts of killing yourself). Their research claim was: “estimated prevalence of suicidal ideation within 12 months [during COVID] was … significantly higher than a world Mental Health Survey conducted by the World Health Organization (WHO) in 21 countries [conducted 2001−2007].”98

The p-value plot (Figure 4) strongly supports their claim. The plot is very consistent with the expected behavior of a distinct sample distribution for a true effect between the intervention (quarantine) and the outcome (increased prevalence of suicidal ideation). However, digging deep into their study reveals a problem in the formulation of their meta-analysis.

In strong science, a research question is measured against a control. Zhu et al. effectively ignore controls in their meta-analysis. They compared the incidence of suicidal ideation to a zero standard and not to control groups. The issue is that a pre-COVID-19 (i.e., background) suicidal ideation signal is ignored in their meta-analysis.

Indeed, in their Table 1 they present results from the base papers where data for control groups is available. For example, the Seifert et al. (2021) base paper notes suicidal ideation presented in 123 of 374 patients in the psychiatric emergency department of Hannover Medical School during the pandemic, and in 141 of 476 in the same department before the pandemic—32.9% versus 29.6%. The difference is not significant.99

Comparing their Table 1 data set with their Figure 1 forest plot, Zhu et al. only carried 32.9% into their meta-analysis for the Seifert et al. (2021) base paper; in effect, they ignored the control data. All data-set entries in their Figure 1 suffer from this problem. Zhu et al. only considered pandemic incidence in their meta-analysis; they ignored any control data. This approach calls their claims into serious question. We conclude that the Zhu et al. results are unreliable.

4.5 Implications

COVID-19 quarantine orders were implemented on the notion that this nonpharmaceutical intervention would delay and flatten the epidemic peak and benefit public health outcomes overall. P-value plots for three of four meta-analyses that we evaluated do not support public health benefits of this form of nonpharmaceutical intervention. The fourth meta-analysis study is unreliable.

One meta-analysis that we evaluated—Herby et al. (2022)—questions the benefits of this form of intervention for preventing mortality. Our p-value plot supports their finding that COVID-19 quarantine orders had little or no effect on the reduction of mortality.

A second meta-analysis—Prati & Mancini (2021)—offers conflicting evidence. Our p-value plot clearly exhibits a two-component mixture implying an ambiguous (uncertain) effect between COVID-19 quarantine orders and mental health symptoms. However, data for a component of mental health symptoms (anxiety) suggests a negative effect from COVID-19 quarantine orders. Further, Prati & Mancini (2021) lack evidence to claim that “most people are psychologically resilient to their [lockdowns’] effects.”

Our evaluation of the Piquero et al. (2021) meta-analysis—assessment of domestic violence incidents—supports a true effect between the intervention (quarantine) and the outcome (increase in incidents of domestic violence), with additional confirmatory research needed. Finally, the meta-analysis of Zhu et al. (2021) on suicidal ideation (thoughts of killing yourself) is wrongly formulated. Their results should be disregarded until or unless controls are properly included in their analysis.

Stepping back and looking at the overall findings of these studies, the claim that COVID-19 quarantine orders reduce mortality is unproven.

Also, the risks (negative public health consequences) of this intervention cannot be ruled out for mental health symptoms and incidents of domestic violence. Given that the base studies and the meta-analyses themselves were, for the most part, rapidly conducted and published, we acknowledge that confirmatory research for some of these outcomes is needed.

Our interpretations of COVID-19 quarantine benefits/risks are consistent, for example, with the research of James (2020) and conventional wisdom on disease mitigation measures used for the control of pandemic influenza.100 James holds that is it unclear whether there were benefits from this intervention relative to less restrictive measures aimed at controlling “risky” personal interactions (e.g., mass gatherings and large clusters of individuals in enclosed spaces).

James (2020) also noted numerous economic and public health harms in the United States as of May 1, 2020:

- Over 20 million people newly unemployed.

- State-wide school closures across the country.

- Increased spouse and child abuse reports.

- Increased divorces.

- Increased backlog of patient needs for mental health services, cancer treatments, dialysis treatments, and everyday visits for routine care.

- Increased acute emergency services.101

This is consistent with interim quantitative data as of September 2020 presented by the American Institute of Economic Research (2020) on the cost and negative public health implications of pandemic restrictions in the United States and around the world.102

Public Health Interventions: Masks

1. Introduction and Background

The World Health Organization (WHO) declared COVID-19 a pandemic on March 11, 2020.103 Early in the pandemic, the U.S. Centers for Disease Control and Prevention (CDC) recommended that patients in health-care settings under investigation for symptoms of suspected COVID-19 infection should wear a medical mask as soon as they were identified.104 On April 30, 2020, the CDC recommended that all people wear a mask outside of their home.105