Preface by Peter Wood

The study you have before you is an examination of the use and abuse of statistics in the sciences. Its natural audience is members of the scientific community who use statistics in their professional research. We hope, however, to reach a broader audience of intelligent readers who recognize the importance to our society of maintaining integrity in the sciences.

Statistics, of course, is not an inviting topic for most people. If we had set out with the purpose of finding a topic less likely to attract broad public attention, a study of statistical methods might well have been the first choice. It would have come in ahead of a treatise on trilobites or a rumination on rust. I know that because I have before me popular books on trilobites and rust: copies of Riccardo Levi-Setti’s Trilobites and Jonathan Waldman’s Rust: The Longest War on my bookshelf. Both books are, in fact, fascinating for the non-specialist reader.

Efforts to interest general readers in statistics are not rare, though it is hard to think of many successful examples. Perhaps the most successful was Darrell Huff’s 1954 semi-classic, How to Lie with Statistics, which is still in print and has sold more than 1.5 million copies in English. That success was not entirely due to a desire on the part of readers to sharpen their mendacity. Huff’s short introduction to common statistical errors became a widely assigned textbook in introductory statistics courses.

The challenge for the National Association of Scholars in putting together this report was to address in a serious way the audience of statistically literate scientists while also reaching out to readers who might quail at the mention of p-values and the appearance of sentences which include symbolic statements such as defining “statistical significance as p < .01 rather than as p < .05.”

This preface is intended mainly for those general readers. It explains why the topic is important and it includes no further mention of p-values.

Disinterested Inquiry and Its Opponents

The National Association of Scholars (NAS) has long been interested in the politicization of science. We have also long been interested in the search for truth—but mainly as it pertains to the humanities and social sciences. The irreproducibility crisis brings together our two long-time interests, because the inability of science to discern truth properly and its politicization go hand in hand.

The NAS was founded in 1987 to defend the vigorous liberal arts tradition of disciplined intellectual inquiry. The need for such a defense had become increasingly apparent in the previous decade and is benchmarked by the publication of Allan Bloom’s The Closing of the American Mind in January 1987. The founding of the NAS and the publication of Bloom’s book were coincident but unrelated except that both were responses to a deep shift in the temperament of American higher education. An older ideal of disinterested pursuit of truth was giving way to views that there was no such thing. All academic inquiry, according to this new view, served someone’s political interests, and “truth” itself had to be counted as a questionable concept.

The new, alternative view, was that college and universities should be places where fresh ideas untrammeled by hidden connections to the established structures of power in American society should have the chance to develop themselves. In practice this meant a hearty welcome to neo- Marxism, radical feminism, historicism, post-colonialism, deconstructionism, post-modernism, liberation theology, and a host of other ideologies. The common feature of these ideologies was their comprehensive hostility to the core traditions of the academy. Some of these doctrines have now faded from the scene, but the basic message—out with disinterested inquiry, in with leftist political nostrums—took hold and has become higher education’s new orthodoxy.

To some extent the natural sciences held themselves exempt from the epistemological and social revolution that was tearing the humanities (and the social sciences) apart. Most academic scientists believed that their disciplines were immune from the idea that facts are “socially constructed.” Physicists were disinclined to credit the claim that there could be a feminist, black, or gay physics. Astronomers were not enthusiastic about the concept that observation is inevitably a reflex of the power of the socially privileged.

The Pre-History of This Report

The report’s authors, David Randall and Christopher Welser, are gentle about the intertwining of the irreproducibility crisis, politicized groupthink among scientists, and advocacy-driven science. But the NAS wishes to emphasize how important the tie is between the purely scientific irreproducibility crisis and its political effects. Sloppy procedures don’t just allow for sloppy science. They allow, as opportunistic infections, politicized groupthink and advocacy-driven science. Above all, they allow for progressive skews and inhibitions on scientific research, especially in ideologically driven fields such as climate science, radiation biology, and social psychology (marriage law). Not all irreproducible research is progressive advocacy; not all progressive advocacy is irreproducible; but the intersection between the two is very large. The intersection between the two is a map of much that is wrong with modern science.

When the progressive left’s “long march through the university” began, the natural sciences believed they would be exempt, but the complacency of the scientific community was not total. Some scientists had already run into obstacles arising from the politicization of higher education. And soon after its founding, the NAS was drawn into this emerging debate. In the second issue of NAS’s journal, Academic Questions, published in Spring 1988, NAS ran two articles criticizing a report by the American Physical Society, that took strong exception to the quality of science in that report. One of the articles, written by Frederick Seitz, who was the former president of both the American Physical Society and the National Academy of Sciences, accused the Council of the American Physical Society of issuing a statement based on the report that abandoned “all pretense to being based on scientific factors.” The report and the advocacy based on it (dealing with missile defense) were, in Seitz’s view, “political” in nature.

I cite this long-ago incident as part of the pedigree of this report, The Irreproducibility Crisis. In the years following the Seitz article, NAS took up a great variety of “academic questions.” The integrity of the sciences was seldom treated as among the most pressing matters, but it was regularly examined, and NAS’s apprehensions about misdirection in the sciences were growing. In 1992, Paul Gross contributed a keynote article, “On the Gendering of Science.” In 1993, Irving M. Klotz wrote on “‘Misconduct’ in Science,” taking issue with what he saw as an overly expansive definition of misconduct promoted by the National Academy of Sciences. Paul Gross and Norman Levitt presented a broader set of concerns in 1994, in “The Natural Sciences: Trouble Ahead? Yes.” Later that year, Albert S. Braverman and Brian Anziska wrote on “Challenges to Science and Authority in Contemporary Medical Education.” That same year NAS held a national conference on the state of the sciences. In 1995, NAS published a symposium based on the conference, “What Do the Natural Sciences Know and How Do They Know It?”

For more than a decade NAS published a newsletter on the politicization of the sciences, and we have continued a small stream of articles on the topic, such as “Could Science Leave the University?” (2011) and “Short-Circuiting Peer-Review in Climate Science” (2014). When the American Association of University Professors published a brief report assailing the Trump administration as “anti-science,” (“National Security, the Assault on Science, and Academic Freedom,” December 2017), NAS responded with a three-part series, “Does Trump Threaten Science?” (To be clear, we are a non-partisan organization, interested in promoting open inquiry, not in advancing any political agenda.)

The Irreproducibility Crisis builds on this history of concern over the threats to scientific integrity, but it is also a departure. In this case, we are calling out a particular class of errors in contemporary science. Those errors are sometimes connected to the politicization of the sciences and scientific misconduct, but sometimes not. The reforms we call for would make for better science in the sense of limiting needless errors, but those reforms would also narrow the opportunities for sloppy political advocacy and damaging government edicts.

Threat Assessment

Over the thirty-one year span of NAS’s work, we have noted both the triumphs of contemporary science—and they are many—but also rising threats. Some of these threats are political or ideological. Some are, for lack of a better word, epistemic. The former include efforts to enforce an artificial “consensus” on various fields of inquiry, such as climate science. The ideological threats also include the growing insistence that academic positions in the sciences be filled with candidates chosen partly on the basis of race and sex. These ideological impositions, however, are not the topic of The Irreproducibility Crisis.

This report deals with an epistemic problem, which is most visible in the large numbers of articles in reputable peer-reviewed journals in the sciences that have turned out to be invalid or highly questionable. Findings from experimental work or observational studies turn out, time and again, to be irreproducible. The high rates of irreproducibility are an ongoing scandal that rightly has upset a large portion of the scientific community. Estimates of what percentage of published articles present irreproducible results vary by discipline. Randall and Welser cite various studies, some of them truly alarming. A 2012 study, for example, aimed at reproducing the results of 53 landmark studies in hematology and oncology, but succeeded in replicating only six (11 percent) of those studies.

Irreproducibility can stem from several causes, chief among them fraud and incompetence. The two are not always easily distinguished, but The Irreproducibility Crisis deals mainly with the kinds of incompetence that mar the analysis of data and that lead to insupportable conclusions. Fraud, however, is also a factor to be weighed.

Outright Fraud

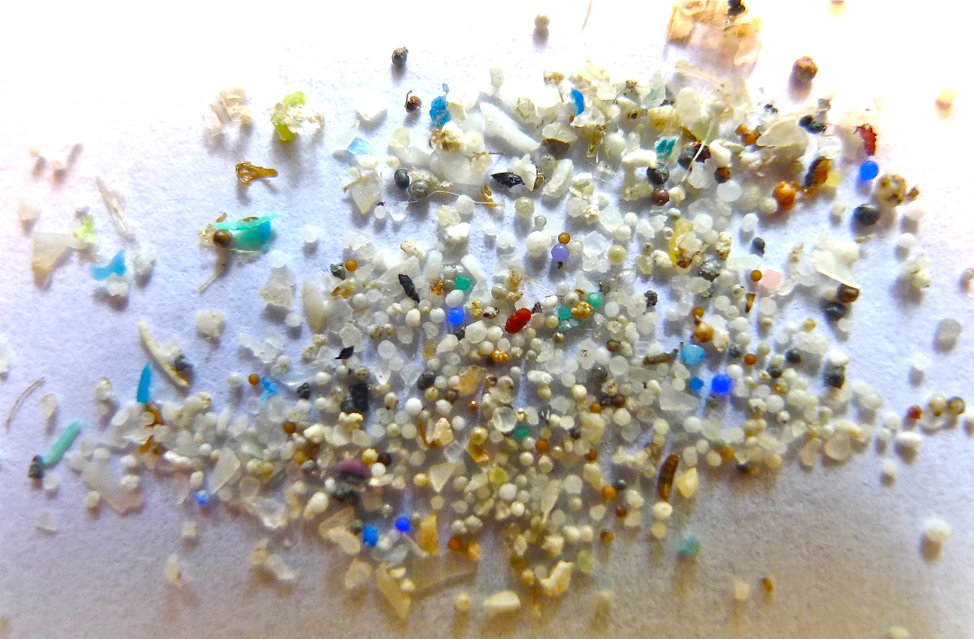

Actual fraud on the part of researchers appears to be a growing problem. Why do scientists take the risk of making things up when, over the long term, it is almost certain that the fraud will be detected? No doubt in some cases the researchers are engaged in wishful thinking. Even if their research does not support their hypothesis, they imagine the hypothesis will eventually be vindicated, and publishing a fictitious claim now will help sustain the research long enough to vindicate the original idea. Perhaps that is what happened in the recent notorious case of postdoc Oona Lönnstedt at Uppsala University. She and her supervisor, Peter Eklöv, published a paper in Science in June 2016, warning of the dangers of microplastic particles in the ocean. The microplastics, they reported, endangered fish. It turns out that Lönnstedt never performed the research that she and Eklöv reported.

The initial June 2016 article achieved worldwide attention and was heralded as the revelation of a previously unrecognized environmental catastrophe. When doubts about the research integrity began to emerge, Uppsala University investigated and found no evidence of misconduct. Critics kept pressing and the University responded with a second investigation that concluded in April 2017 and found both Lönnstedt and Eklöv guilty of misconduct. The university then appointed a new Board for Investigation of Misconduct in Research. In December 2017 the Board announced its findings: Lönnstedt had intentionally fabricated her data and Eklöv had failed to check that she had actually carried out her research as described.

The microplastics case illustrates intentional scientific fraud. Lönnstedt’s motivations remain unknown, but the supposed findings reported in the Science article plainly turned her into an environmentalist celebrity. Fear of the supposedly dire consequences of microplastic pollution had already led to the U.S. banning plastic microbeads in personal care products. The UK was holding a parliamentary hearing on the same topic when the Science article appeared. Microplastic pollution was becoming a popular cause despite thin evidence that the particles were dangerous. Lönnstedt’s contribution was to supply the evidence.

In this case, the fraud was suspected early on and the whistleblowers stuck with their accusations long enough to get past the early dismissals of their concerns. That kind of self-correction in the sciences is highly welcome but hardly reliable. Sometimes highly questionable declarations made in the name of science remain un-retracted and ostensibly unrefuted despite strong evidence against them. For example, Edward Calabrese in the Winter 2017 issue of Academic Questions recounts the knowing deception by Nobel physicist Hermann J. Muller, who promoted what is called the “linear no-threshold” (LNT) dose response model for radiation’s harmful effects. That meant, in layman’s terms, that radiation at any level is dangerous. Muller had seen convincing evidence that the LNT model was false—that there are indeed thresholds below which radiation is not dangerous—but he used his 1946 Nobel Prize Lecture to insist that the LNT model be adopted. Calabrese writes that Muller was “deliberately deceptive.”

It was a consequential deception. In 1956 the National Academy of Sciences Committees on Biological Effects of Atomic Radiation (BEAR) recommended that the U.S. adopt the LNT standard. BEAR, like Muller, misrepresented the research record, apparently on the grounds that the public needed a simple heuristic and the actual, more complicated reality would only confuse people. The U.S. Government adopted the LNT standard in evaluating risks from radiation and other hazards. Calabrese and others who have pointed out the scientific fraud on which this regulatory apparatus rests have been brushed aside and the journal Science, which published the BEAR report, has declined to review that decision.

Which is to say that if a deception goes deep enough or lasts long enough, the scientific establishment may simply let it lie. The more this happens, presumably the more it emboldens other researchers to gamble that they may also get away with making up data or ignoring contradictory evidence.

Renovation

Incompetence and fraud together create a borderland of confusion in the sciences. Articles in prestigious journals appear to speak with authority on matters that only a small number of readers can assess critically. Non-specialists generally are left to trust that what purports to be a contribution to human knowledge has been scrutinized by capable people and found trustworthy. Only we now know that a very significant percentage of such reports are not to be trusted. What passes as “knowledge” is in fact fiction. And the existence of so many fictions in the guise of science gives further fuel to those who seek to politicize the sciences. The Lönnstedt and Muller cases exemplify not just scientific fraud, but also efforts to advance political agendas. All of the forms of intellectual decline in the sciences thus tend to converge. The politicization of science lowers standards, and lower standards invite further politicization.

The NAS wants to foster among scientists the old ethic of seeking out truth by sticking with procedures that rigorously sift and winnow what scientific experiment can say confidently from what it cannot. We want science to seek out truth rather than to engage in politicized advocacy. We want science to do this as the rule and not as the exception. This is why we call for these systemic reforms.

The NAS also wants to banish the calumny of progressive advocates, that anyone who criticizes their political agenda is ‘anti-science.’ This was always hollow rhetoric, but the irreproducibility crisis reveals that it is precisely the reverse of the situation. The progressive advocates, deeply invested in the sloppy procedures, the politicized groupthink, and the too-frequent outright fraud, are the anti-science party. The banner of good science—disinterested, seeking the truth, reproducible—is ours, not theirs.

We are willing to put this contention to the experiment. We call for all scientists to submit their science to the new standards of reproducibility—and we will gladly see what truths we learn and what falsehoods we will unlearn.

For all that, The Irreproducibility Crisis deals with only part of a larger problem. Scientists are only human and are prey to the same temptations as anyone else. To the extent that American higher education has become dominated by ideologies that scoff at traditional ethical boundaries and promote an aggressive win-at-all-costs mentality, reforming the technical and analytic side of science will go only so far towards restoring the integrity of scientific inquiry. We need a more comprehensive reform of the university that will instill in students a lifelong fidelity to the truth. This report, therefore, is just one step towards the necessary renovation of American higher education. The credibility of the natural sciences is eroding. Let’s stop that erosion and then see whether the sciences can, in turn, teach the rest of the university how to extract itself from the quicksand of political advocacy.

Executive Summary

The Nature of the Crisis

A reproducibility crisis afflicts a wide range of scientific and social-scientific disciplines, from epidemiology to social psychology. Improper research techniques, lack of accountability, disciplinary and political groupthink, and a scientific culture biased toward producing positive results together have produced a critical state of affairs. Many supposedly scientific results cannot be reproduced reliably in subsequent investigations, and offer no trustworthy insight into the way the world works.

In 2005, Dr. John Ioannidis argued, shockingly and persuasively, that most published research findings in his own field of medicine were false. Contributing factors included 1) the inherent limitations of statistical tests; 2) the use of small sample sizes; 3) reliance on small numbers of studies; 4) willingness to publish studies reporting small effects; 5) the prevalence of fishing expeditions to generate new hypotheses or explore unlikely correlations; 6) flexibility in research design; 7) intellectual prejudices and conflicts of interest; and 8) competition among researchers to produce positive results, especially in fashionable areas of research. Ioannidis demonstrated that when you accounted for all these factors, a majority of research findings in medicine—and in many other scientific fields—were probably wrong.

Ioannidis’ alarming article crystallized the scientific community’s awareness of the reproducibility crisis. Subsequent evidence confirmed that the crisis of reproducibility had compromised entire disciplines. In 2012 the biotechnology firm Amgen tried to reproduce 53 “landmark” studies in hematology and oncology, but could only replicate six. In that same year the director of the Center for Drug Evaluation and Research at the Food and Drug Administration estimated that up to three-quarters of published biomarker associations could not be replicated. A 2015 article in Science that presented the results of 100 replication studies of articles published in prominent psychological journals found that only 36% of the replication studies produced statistically significant results, compared with 97% of the original studies.

Many common forms of improper scientific practice contribute to the crisis of reproducibility. Some researchers look for correlations until they find a spurious “statistically significant” relationship. Many more have a poor understanding of statistical methodology, and thus routinely employ statistics improperly in their research. Researchers may consciously or unconsciously bias their data to produce desired outcomes, or combine data sets in such a way as to invalidate their conclusions. Researchers able to choose between multiple measures of a variable often decide to use the one which provides a statistically significant result. Apparently legitimate procedures all too easily drift across a fuzzy line into illegitimate manipulations of research techniques.

Many aspects of the professional environment in which researchers work enable these distortions of the scientific method. Uncontrolled researcher freedom makes it easy for researchers to err in all the ways described above. The fewer the constraints on their research designs, the more opportunities for them to go astray. Lack of constraints allows researchers to alter their methods midway through a study as they pursue publishable, statistically significant results. Researchers often justify midstream alteration of research procedures as “flexibility,” but in practice such flexibility frequently justifies researchers’ unwillingness to accept a negative outcome. A 2011 article estimated that providing four “degrees of researcher freedom”—four ways to shift the design of the experiment while it is in progress—can lead to a 61% false-positive rate.

The absence of openness in much scientific research poses a related problem. Researchers far too rarely share data and methodology once they complete their studies. Scientists ought to be able to check and critique one another’s work, but a great deal of research can’t be evaluated properly because researchers don’t always make their data and study protocols available to the public. Sometimes unreleased data sets simply vanish because computer files are lost or corrupted, or because no provision is made to transfer data to up-to-date systems. In these cases, other researchers lose the ability to examine the data and verify that it has been handled correctly.

Another factor contributing to the reproducibility crisis is the premium on positive results. Modern science’s professional culture prizes positive results far above negative results, and also far above attempts to reproduce earlier research. Scientists therefore steer away from replication studies, and their negative results go into the file drawer. Recent studies provide evidence that this phenomenon afflicts such diverse fields as climate science, psychology, sociology, and even dentistry.

Groupthink also inhibits attempts to check results, since replication studies can undermine comfortable beliefs. An entire academic discipline can succumb to groupthink and create a professional consensus with a strong tendency to dismiss results that question its foundations. The overwhelming political homogeneity of academics has also created a culture of groupthink that distorts academic research, since researchers may readily accept results that confirm a liberal world-view while rejecting “conservative” conclusions out of hand. Political groupthink particularly affects those fields with obvious policy implications, such as social psychology and climate science.

Just the financial consequences of the reproducibility crisis are enormous. A 2015 study estimated that researchers spent around $28 billion annually in the United States alone on irreproducible preclinical research into new drug treatments. Irreproducible research in several disciplines distorts public policy and public expenditure in areas such as public health, climate science, and marriage and family law. The gravest casualty of all is the authority that science ought to have with the public, but which it has been forfeiting through its embrace of practices that no longer serve to produce reliable knowledge.

Many researchers and interested laymen have already started to improve the practice of science. Scientists, journals, foundations, and the government have all taken concrete steps to alleviate the crisis of reproducibility. But there is still much more to do. The institutions of modern science are enormous, not all scientists accept the nature and extent of the crisis, and the public has scarcely begun to realize the crisis’s gravity. Fixing the crisis of reproducibility will require a great deal of work. A long-term solution will need to address the crisis at every level: technical competence, institutional practices, and professional culture.

The National Association of Scholars proposes the following list of 40 specific reforms that address all levels of the reproducibility crisis. These suggested reforms are not comprehensive—although we believe they are more comprehensive than any previous set of recommendations. Some of these reforms have been proposed before; others are new. Some will elicit broad assent from the scientific community; we expect others to arouse fierce disagreement. Some are meant to provoke constructive critique.

We do not expect every detail of these proposed reforms to be adopted. Yet we believe that any successful reform program must be at least as ambitious as what we present here. If not these changes, then what? We proffer this program of reform to spark an urgently needed national conversation on how precisely to solve the crisis of reproducibility.

STATISTICAL STANDARDS

- Researchers should avoid regarding the p-value as a dispositive measure of evidence for or against a particular research hypothesis.

- Researchers should adopt the best existing practice of the most rigorous sciences and define statistical significance as p < .01 rather than as p < .05.

- In reporting their results, researchers should consider replacing either-or tests of statistical significance with confidence intervals that provide a range in which a variable’s true value most likely falls.

DATA HANDLING

- Researchers should make their data available for public inspection after publication of their results.

- Researchers should experiment with born-open data—data archived in an open-access repository at the moment of its creation, and automatically time-stamped.

RESEARCH PRACTICES

- Researchers should pre-register their research protocols, filing them in advance with an appropriate scientific journal, professional organization, or government agency.

- Researchers should adopt standardized descriptions of research materials and procedures.

PEDAGOGY

- Disciplines that rely heavily upon statistics should institute rigorous programs of education that emphasize the ways researchers can misunderstand and misuse statistical concepts and techniques.

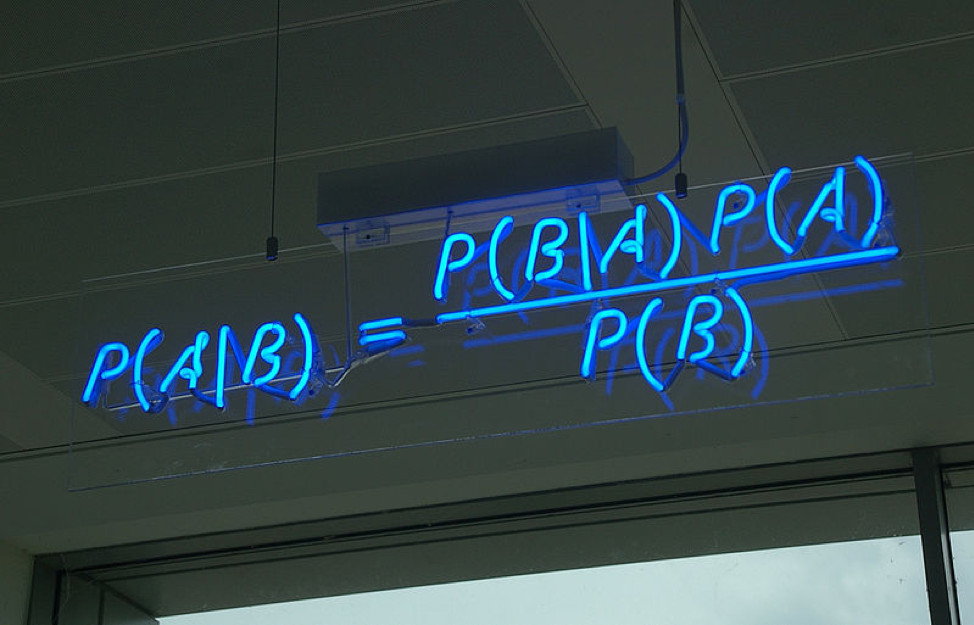

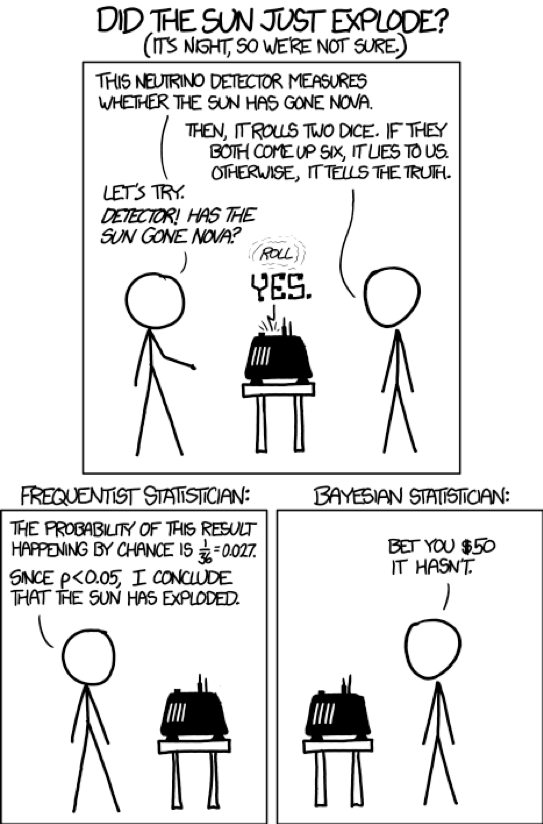

- Disciplines that rely heavily upon statistics should educate researchers in the insights provided by Bayesian approaches.

- Basic statistics should be integrated into high school and college math and science curricula, and should emphasize the limits to the certainty that statistics can provide.

UNIVERSITY POLICIES

- Universities judging applications for tenure and promotion should require adherence to best-existing-practice standards for research techniques.

- Universities should integrate survey-level statistics courses into their core curricula and distribution requirements.

PROFESSIONAL ASSOCIATIONS

- Each discipline should institutionalize regular evaluations of its intellectual openness by committees of extradisciplinary professionals.

PROFESSIONAL JOURNALS

- Professional journals should make their peer review processes transparent to outside examination.

- Some professional journals should experiment with guaranteeing publication for research with pre-registered, peer-reviewed hypotheses and procedures.

- Every discipline should establish a professional journal devoted to publishing negative results.

SCIENTIFIC INDUSTRY

- Scientific industry should advocate for practices that minimize irreproducible research, such as Transparency and Openness Promotion (TOP) guidelines for scientific journals.

- Scientific industry, in conjunction with its academic partners, should formulate standard research protocols that will promote reproducible research.

PRIVATE PHILANTHROPY

- Private philanthropy should fund scientists’ efforts to replicate earlier research.

- Private philanthropy should fund scientists who work to develop better research methods.

- Private philanthropy should fund university chairs in “reproducibility studies.”

- Private philanthropy should establish an annual prize, the Michelson-Morley Award, for the most significant negative results in various scientific fields.

- Private philanthropy should improve science journalism by funding continuing education for journalists about the scientific background to the reproducibility crisis.

GOVERNMENT FUNDING

- Government agencies should fund scientists’ efforts to replicate earlier research.

- Government agencies should fund scientists who work to develop better research methods.

- Government agencies should prioritize grant funding for researchers who pre-register their research protocols and who make their data and research protocols publicly available.

- Government granting agencies should immediately adopt the National Institutes of Health (NIH) standards for funding reproducible research.

- Government granting agencies should provide funding for programs to broaden statistical literacy in primary, secondary, and post-secondary education.

GOVERNMENT REGULATION

- Government agencies should insist that all new regulations requiring scientific justification rely solely on research that meets strict reproducibility standards.

- Government agencies should institute review commissions to determine which existing regulations are based on reproducible research, and to rescind those which are not.

FEDERAL LEGISLATION

- Congress should pass an expanded Secret Science Reform Act to prevent government agencies from making regulations based on irreproducible research.

- Congress should require government agencies to adopt strict reproducibility standards by measures that include strengthening the Information Quality Act.

- Congress should provide funding for programs to broaden statistical literacy in primary, secondary, and post-secondary education.

STATE LEGISLATION

- State legislatures should reform K-12 curricula to include courses in statistics literacy.

- State legislatures should use their funding and oversight powers to encourage public university administrations to add statistical literacy requirements.

GOVERNMENT STAFFING

- Presidents, governors, legislative committees, and individual legislators should employ staff trained in statistics and reproducible research techniques to advise them on scientific issues.

JUDICIARY REFORMS

- Federal and state courts should adopt a standard approach, which explicitly accounts for the crisis of reproducibility, for the use of science and social science in judicial decision-making.

- Federal and state courts should adopt a standard approach to overturning precedents based on irreproducible science and social science.

- A commission of judges should recommend that law schools institute a required course on science and statistics as they pertain to the law.

- A commission of judges should recommend that each state incorporate a science and statistics course into its continuing legal education requirements for attorneys and judges.

Introduction

Brian Wansink’s Disastrous Blog Post

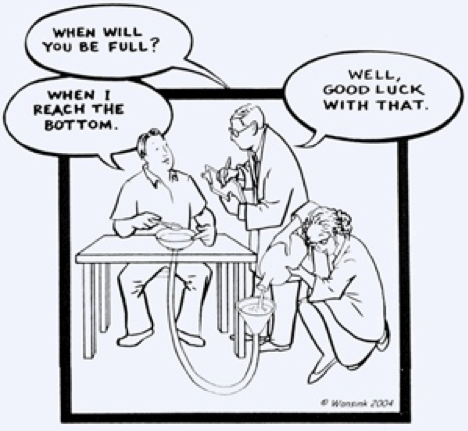

In November 2016, Brian Wansink got himself into trouble.1 Wansink, the head of Cornell University’s Food and Brand Lab and a professor at the Cornell School of Business, has spent more than twenty-five years studying “eating behavior”—the social and psychological factors that affect how people eat. He’s become famous for his research on the psychology of “mindless eating.” Wansink argues that science shows we’ll eat less on smaller dinner plates,2 and pour more liquid into short, wide glasses than tall, narrow ones.3 In August 2016 he appeared on ABC News to claim that people eat less when they’re told they’ve been served a double portion.4 In March 2017, he came onto Rachael Ray’s show to tell the audience that repainting your kitchen in a different color might help you lose weight.5

But Wansink garnered a different kind of fame when, giving advice to Ph.D. candidates on his Healthier and Happier blog, he described how he’d gotten a new graduate student researching food psychology to be more productive:

When she [the graduate student] arrived, I gave her a data set of a self-funded, failed study which had null results (it was a one month study in an all-you-can-eat Italian restaurant buffet where we had charged some people ½ as much as others). I said, “This cost us a lot of time and our own money to collect. There’s got to be something here we can salvage because it’s a cool (rich & unique) data set.” I had three ideas for potential Plan B, C, & D directions (since Plan A [the one-month study with null results] had failed). I told her what the analyses should be and what the tables should look like. I then asked her if she wanted to do them. … Six months after arriving, … [she] had one paper accepted, two papers with revision requests, and two others that were submitted (and were eventually accepted).6

Over the next several weeks, Wansink’s post prompted outrage among the community of internet readers who care strongly about statistics and the scientific method.7 “This is a great piece that perfectly sums up the perverse incentives that create bad science,” wrote one.8 “I sincerely hope this is satire because otherwise it is disturbing,” wrote another.9 “I have always been a big fan of your research,” wrote a third, “and reading this blog post was like a major punch in the gut.”10 And the controversy didn’t die down. As the months passed, the little storm around this apparently innocuous blog post kicked up bigger and bigger waves.

But what had Wansink done wrong? In essence, his critics accused him of abusing statistical procedures to create the illusion of successful research. And thereby hangs a cautionary tale—not just about Brian Wansink, but about the vast crisis of reproducibility in all of modern science.

The words reproducibility and replicability are often used interchangeably, as in this essay. When they are distinguished, replicability most commonly refers to whether an experiment’s results can be obtained in an independent study, by a different investigator with different data, while reproducibility refers to whether different investigators can use the same data, methods, and/or computer code to come up with the same conclusion.11 Goodman, Fanelli, and Ioannidis suggested in 2016 that scientists should not only adopt a standardized vocabulary to refer to these concepts but also further distinguish between methods reproducibility, results reproducibility, and inferential reproducibility.12 We use the phrase “crisis of reproducibility” to refer without distinction to our current predicament, where much published research cannot be replicated or reproduced.

The crisis of reproducibility isn’t just about statistics—but to understand how modern science has gone wrong, you have to understand how scientists use, and misuse, statistical methods.

How Researchers Use Statistics

Much of modern scientific and social-scientific research seeks to identify relationships between different variables that seem as if they ought to be linked. Researchers may want to know, for example, whether more time in school correlates with higher levels of income,13 whether increased carbohydrate intake tends to be associated with a greater risk of heart disease,14 or whether scores for various personality dimensions on psychometric tests help predict voting behavior.15

But it isn’t always easy for scientists to establish the existence of such relationships. The world is complicated, and even a real relationship—one that holds true for an entire population—may be difficult to observe. Schooling may generally have a positive effect on income, but some Ph.D.s will still work as baristas and some high school dropouts will become wealthy entrepreneurs. High carbohydrate intake may increase the risk of heart disease on average, but some paleo-dieters will drop dead of heart attacks at forty and some junk food addicts will live past ninety on a regime of doughnuts and French fries. Researchers want to look beneath reality’s messy surface and determine whether the relationships they’re interested in will hold true in general.

In dozens of disciplines ranging from epidemiology16 to environmental science17 to psychology18 to sociology,19 researchers try to do this by gathering data and applying hypothesis tests, also called tests of statistical significance. Many such tests exist, and researchers are expected to select the test that is most appropriate given both the relationship they wish to investigate and the data they have managed to collect.

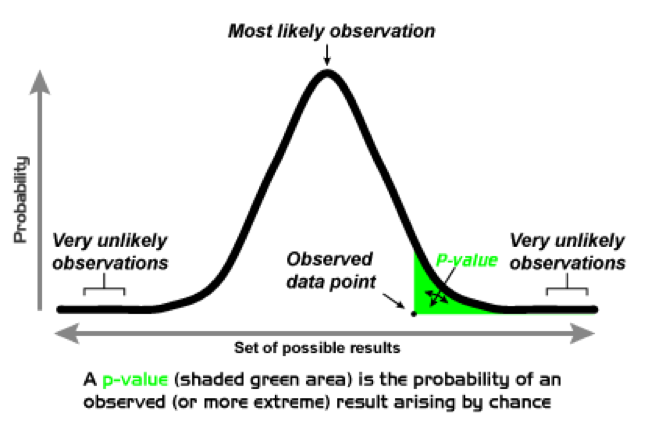

In practice, the hypothesis that forms the basis of a test of statistical significance is rarely the researcher’s original hypothesis that a relationship between two variables exists. Instead, scientists almost always test the hypothesis that no relationship exists between the relevant variables. Statisticians call this the null hypothesis. As a basis for statistical tests, the null hypothesis is usually much more convenient than the researcher’s original hypothesis because it is mathematically precise in a way that the original hypothesis typically is not. Each test of statistical significance yields a mathematical estimate of how well the data collected by the researcher supports the null hypothesis. This estimate is called a p-value.

The p-value is a number between zero and one, representing a probability based on the assumption that the null hypothesis is actually true. Given that assumption, the p-value indicates the frequency with which the researcher, if he repeated his experiment by collecting new data, would expect to obtain data less compatible with the null hypothesis than the data he actually found. A p-value of .2, for example, means that if the researcher repeated his research over and over in a world where the null hypothesis is true, only 20% of his results would be less compatible with the null hypothesis than the results he actually got.

A very low p-value means that, if the null hypothesis is true, the researcher’s data are rather extreme. It should be rare for data to be so incompatible with the null hypothesis. But perhaps the null hypothesis is not true, in which case the researcher’s data would not be so surprising. If nothing is wrong with the researcher’s procedures for data collection and analysis, then the lower the p-value, the less likely it becomes that the null hypothesis is correct.

In other words: the lower the p-value, the more reasonable it is to reject the null hypothesis, and conclude that the relationship originally hypothesized by the researcher does exist between the variables in question. Conversely, the higher the p-value, and the more typical the researcher’s data would be in a world where the null hypothesis is true, the less reasonable it is to reject the null hypothesis. Thus, the p-value provides a rough measure of the validity of the null hypothesis—and, by extension, of the researcher’s “real hypothesis” as well.

Say a scientist gathers data on schooling and income and discovers that in his sample each additional year of schooling corresponds, on average, to an extra $750 of annual income. The scientist applies the appropriate statistical test to the data, where the null hypothesis is that there is no relation between years of schooling and subsequent income, and obtains a p-value of .55. This means that more than half the time he would expect to see a correspondence at least as strong as this one even if there were no underlying relationship between time in school and income. A p-value of .01, on the other hand, would indicate a much greater probability that some relationship of the sort the scientist originally hypothesized actually exists. If there is no truth in the original hypothesis, and the null hypothesis is true instead, the sort of correspondence the scientist observed should occur only a small fraction of the time.

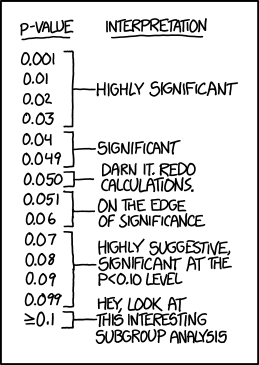

p < .05

Scientists can interpret results like these fairly easily: p = .55 means a researcher hasn’t found good evidence to support his original hypothesis, while p = .01 means the data seems to provide his original hypothesis with strong support. But what about p-values in between? What about p = .1, a 10% probability of data even less supportive of the null hypothesis occurring just by chance, without an underlying relationship?

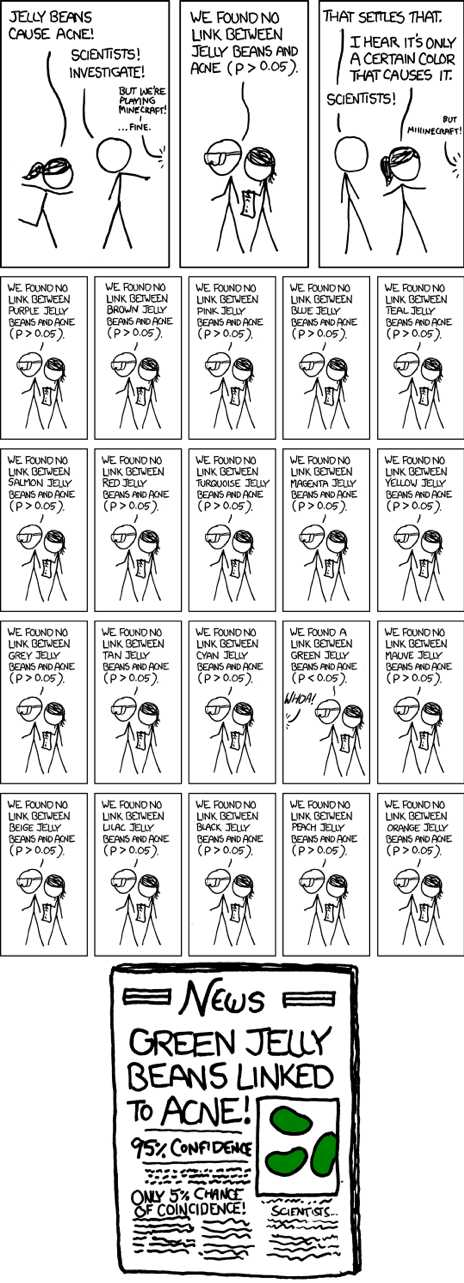

Over time, researchers in various disciplines decided to adopt clear cutoffs that would separate strong evidence against the null hypothesis from weaker evidence against the null hypothesis. The idea was to ensure that the results of statistical tests weren’t used too loosely, in support of unsubstantiated conclusions. Different disciplines settled on different cutoffs: some adopted p < .1, some p < .05, and the most rigorous adopted p < .01. Nowadays, p < .05 is the most common cutoff. Scientists in most disciplines call results that meet that criterion “statistically significant.” p < .05 provides a pretty rigorous standard, which should ensure that researchers will incorrectly reject the null hypothesis—incorrectly infer that they have found evidence for their original hypothesis—no more than 5% of the time.

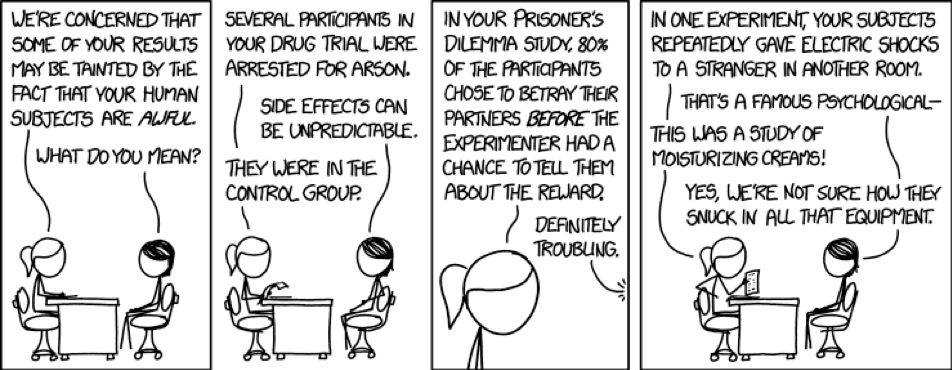

But no more than 5% of the time is still some of the time. A scientist who runs enough statistical tests can expect to get “statistically significant” results one time in twenty just by chance alone. And if a researcher produces a statistically significant result—if it meets that rigorous p < .05 standard established by professional consensus—it’s far too easy to present that result as publishable, even if it’s just a fluke, an artifact of the sheer number of statistical tests the researcher has applied to his data.

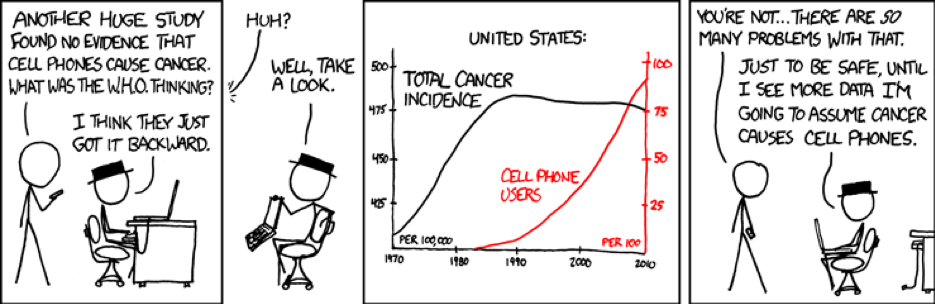

A strip from Randall Munroe’s webcomic xkcd illustrates the problem.20 A scientist who tries to correlate the incidence of acne with consumption of jelly beans of a particular color, and who runs the experiment over and over with different colors of jelly beans, will eventually get a statistically significant result. That result will almost certainly be meaningless—in Munroe’s version, the experimenters come up with p < .05 one time out of twenty, which is exactly how often a scientist would expect to see a “false positive” as a result of repeated tests. An unscrupulous researcher, or a careless one, can keep testing pairs of variables until he gets that statistically significant result that will convince people to pay attention to his research. Statisticians use the term “p-hacking” to describe the process of using repeated statistical tests to produce a result with spurious statistical significance.21 Which brings us back to Brian Wansink.

Wansink’s Dubious Science

Wansink admitted that his data provided no support in terms of statistical significance for his original research hypothesis. So he gave his data set to a graduate student and encouraged her to run more tests on the data with new research hypotheses (“Plan B, C, & D”) until she came up with statistically significant results. Then she submitted these results for publication—and they were accepted. But how many tests of statistical significance did she run, relative to the number of statistically significant results she got? And how many “backup plans” should researchers be allowed? Researchers who use the scientific method are supposed to formulate hypotheses based on existing data and then gather new data to put their hypotheses to the test. But a scientist whose original hypothesis doesn’t pan out isn’t supposed to use the data he’s gathered to come up with a new hypothesis that he can “support” using that same data. A scientist who does that is like the Texan who took pot shots at the side of his barn and then painted targets around the places where he saw the most bullet holes.22

It’s easy to be a sharpshooter that way, which is why the procedure that Wansink urged on his graduate student outraged so many commenters. As one of them wrote: “What you describe Brian does sound like p-hacking and HARKing [hypothesizing after the results are known].”23 Wansink’s procedures had hopelessly compromised his research. He had, in effect, altered his research procedures in the middle of his experiment and authorized p-hacking to obtain a publishable result.

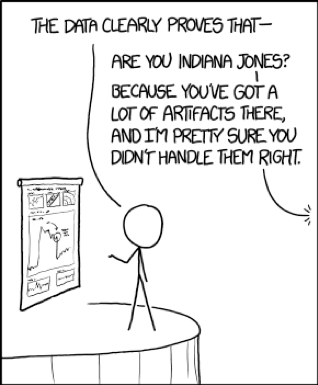

That wasn’t all Wansink had done wrong. Wansink’s inadvertent admissions led his critics to look closely at all aspects of his published research, and they soon found basic statistical mistakes throughout his work. Wansink had made more than 150 statistical errors in four papers alone, including “impossible sample sizes within and between articles, incorrectly calculated and/or reported test statistics and degrees of freedom, and a large number of impossible means and standard deviations.” He’d made further errors as he described his data and constructed the tables that presented his results.24 Put simply, a lot of Wansink’s numbers didn’t add up.

Wansink’s critics found more problems the closer they looked. In March 2017 a graduate student named Tim van der Zee calculated that critics had already made serious, unrebutted allegations about the reliability of 45 of Wansink’s publications. Collectively, these publications spanned twenty years of research, had appeared in twenty-five different journals and eight books, and— most troubling of all—had been cited more than 4,000 times.25 Wansink’s badly flawed research tainted the far larger body of scientific publications that had relied on the accuracy of his results.

Wansink seems oddly unfazed by this criticism.26 He acts as if his critics are accusing him of trivial errors, when they’re really saying that his mistakes invalidate substantial portions of his published research. Statistician Andrew Gelman,27 the director of Columbia University’s Applied Statistics Center,28 wondered on his widely-read statistics blog what it would take for Wansink to see there was a major problem.

Let me put it this way. At some point, there must be some threshold where even Brian Wansink might think that a published paper of his might be in error—by which I mean wrong, really wrong, not science, data not providing evidence for the conclusions. What I want to know is, what is this threshold? We already know that it’s not enough to have 15 or 20 comments on Wansink’s own blog slamming him for using bad methods, and that it’s not enough when a careful outside research team finds 150 errors in the papers. So what would it take? 50 negative blog comments? An outside team finding 300 errors? What about 400? Would that be enough? If the outsiders had found 400 errors in Wansink’s papers, then would he think that maybe he’d made some serious errors[?]29

Wansink and his employer, Cornell University, have not even fully addressed the first round of criticism about Wansink’s work,30 much less the graver follow-up critiques.31

But Wansink’s apparent insouciance may reflect a real feeling that he hasn’t done anything much wrong. After all, lots of scientists conduct their research in much the same way.

Wansink is Legion

Wansink acted like many of his peers. Even if most researchers aren’t as careless as Wansink, the research methods that landed Wansink in hot water are standard operating practice across a range of scientific and social-scientific disciplines. So too are many other violations of proper research methodology. In recent years a growing chorus of critics has called attention to the existence of a “reproducibility crisis”—a situation in which many scientific results are artifacts of improper research techniques, unlikely to be obtained again in any subsequent investigation, and therefore offering no reliable insight into the way the world works.

In 2005, Dr. John Ioannidis, then a professor at the University of Ioannina Medical School in Greece, made the crisis front-page news among scientists. He argued, shockingly and persuasively, that most published research findings in his own field of biomedicine probably were false. Ioannidis’ argument applied to everything from epidemiology to molecular biology to clinical drug trials.32 Ioannidis began with the known risk of a false positive any time researchers employed a test of statistical significance; he then enumerated a series of additional factors that tended to increase that risk. These included 1) the use of small sample sizes;33 2) a willingness to publish studies reporting small effects; 3) reliance on small numbers of studies; 4) the prevalence of fishing expeditions to generate new hypotheses or explore unlikely correlations;34 5) flexibility in research design; 6) intellectual prejudices and conflicts of interest; and 7) competition among researchers to produce positive results, especially in fashionable areas of research. Ioannidis demonstrated that when you accounted for all the factors that compromise modern research, a majority of new research findings in biomedicine—and in many other scientific fields—were probably wrong.

Ioannidis accompanied his first article, which provided theoretical arguments for the existence of a reproducibility crisis, with a second article that provided convincing evidence of its reality. Ioannidis compared 49 highly cited articles in clinical research to later studies on the same subjects. 45 of these articles had claimed an effective intervention, but “7 (16%) were contradicted by subsequent studies, 7 others (16%) had found effects that were stronger than those of subsequent studies, 20 (44%) were reproduced, and 11 (24%) remained largely unchallenged.” In other words, subsequent investigations provided support for fewer than half of these influential publications.35 A 2014 article co-authored by Ioannidis on 37 reanalyses of data from randomized clinical trials also found, with laconic understatement, that 13 of the reanalyses (35%) “led to interpretations different from that of the original article.”36 Perhaps Ioannidis had put it too strongly back in 2005 when he wrote that a majority of published research findings might be false. In medicine, the proportion may be more like one third. But that number would still be far too high—especially given the huge and expanding costs of medical research—and it still suggests the crisis is real.

The Scope of the Crisis

Ioannidis’ alarming papers crystallized the scientific community’s awareness of the reproducibility crisis— and not just among scientists conducting medical research. Ioannidis said that his arguments probably applied to “many current scientific fields.” Did they? To the same extent? If so many findings from clinical trials didn’t reproduce, what did that suggest for less rigorous disciplines, such as psychology, sociology, or economics?

Scientists scrutinizing their own fields soon discovered that many widely reported results didn’t replicate.37 In the field of psychology, researchers’ reexamination of “power posing”—stand more confidently and you will be more successful—suggested that the original result had been a false positive.38 In sociology, reexamination brought to light major statistical flaws in a study that claimed that beautiful people have more daughters.39 Andrew Gelman judged that a study of the economic effects of climate change contained so many errors that “the whole analysis [is] close to useless as it stands.”40

Some of the research that failed to reproduce had been widely touted in the media. “Stereotype threat” as an explanation for poor academic performance? Didn’t reproduce.41 “Social priming,” which argues that unnoticed stimuli can significantly change behavior? Didn’t reproduce that well,42 and one noted researcher in the field was an outright fraud.43 Tests of implicit bias as predictors of discriminatory behavior? The methodology turned out to be dubious,44 and the test of implicit bias may have been biased itself.45 Oxytocin (and therefore hugs, which stimulate oxytocin production) making people more trusting? A scientist conducting a series of oxytocin experiments came to believe that he had produced false positives—but he had trouble publishing his new findings.46

Deep-rooted “perceptual” racial bias? The argument depended on several research reports all producing positive results, and a statistical analysis revealed that the probability that such a series of experiments would all yield positive results was extremely low, even if the effects in question were real.

The probability that five studies like these would all be uniformly successful is ... 0.070; and the low value suggests that the reported degree of success is unlikely to be replicated by future studies with the same sample sizes and design. Indeed, the probability is low enough that scientists should doubt the validity of the experimental results and the theoretical ideas presented.47

Not every famous study failed to reproduce. Scholars have criticized the Milgram Experiment (1963)48—in which Stanley Milgram induced large numbers of study participants to give electric shocks (they believed) to unseen “experimental subjects,” up to the point of torture and death— for both shoddy research techniques and data manipulation.49 Yet the experiment substantially reproduced twice, in 2009 and 2015.50 The Milgram Experiment seemed too amazing to be true, and it may have been conducted sloppily the first time around—but replication provided significant confirmation. The crisis of reproducibility doesn’t mean that all recent research findings are wrong—just a large number of them.51

Recent evidence suggests that the crisis of reproducibility has compromised entire disciplines. In 2012 the biotechnology firm Amgen tried to reproduce 53 “landmark” studies in hematology and oncology, but could only replicate 6 (11%).52 That same year Janet Woodcock, director of the Center for Drug Evaluation and Research at the Food and Drug Administration, “estimated that as much as 75 per cent of published biomarker associations are not replicable.”53 A 2015 article in Science that presented the results of an attempt to replicate 100 articles published in three prominent psychological journals in 2008 found that only 36% of the replication studies produced statistically significant results, compared with 97% of the original studies—and on average the effects found in the replication studies were half the size of those found in the original research.54 Another study in 2015 could not reproduce a majority of a sample of 67 reputable economics articles.55 A different study in the economics field successfully reproduced a larger proportion of research, but a great deal still failed to reproduce: 61% of the replication efforts (11 out of 18) showed a significant effect in the same direction as the original research, but with an average effect size reduced by one-third.56

In 2005, scientists could say that Ioannidis’ warnings needed more substantiation. But we now have a multitude of professional studies that corroborate Ioannidis. Wansink provides a particularly vivid illustration of Ioannidis’ argument.

Why does so much research fail to replicate? Bad methodology, inadequate constraints on researchers, and a professional scientific culture that creates incentives to produce new results—innovative results, trailblazing results, exciting results—have combined to create the reproducibility crisis.

Problematic Science

Flawed Statistics

The reproducibility crisis has revealed many kinds of technical problems in medical studies; and Wansink committed a large number of them in his behavioral research. Several researchers have narrowed their focus and studied the effects of p-hacking on scientific research. Megan Head’s 2015 study looked at p-values in papers across a range of disciplines and found evidence that p-hacking is “widespread throughout science.”57 However, Head and her co-authors downplayed the significance of that finding and argued that most p-hacking probably just confirmed hypotheses that were fundamentally true. A 2016 paper coauthored by Ioannidis seemed to demolish those reassurances,58 but another paper revisiting Head’s study argued that she and her co-authors overestimated the evidence for p-hacking.59 A separate paper that examined social science data found “encouragingly little evidence of false-positives or p-hacking in rigorous policy research,”60 but the qualifier “rigorous” sidesteps the question of how much policy research does not meet rigorous standards. Still, these initial results suggest that while p-hacking significantly afflicts many disciplines, it is not pervasive in any of them.

P-hacking may not be as widespread as one might fear, but it appears that many scientists who routinely use p-values and statistical significance testing misunderstand those concepts, and therefore employ them improperly in their research.61 In March 2016, the Board of Directors of the American Statistical Association issued a “Statement on Statistical Significance and p-Values” to address common misconceptions. The Statement’s six enunciated principles included the admonition that “by itself, a p-value does not provide a good measure of evidence regarding a model or hypothesis.”62

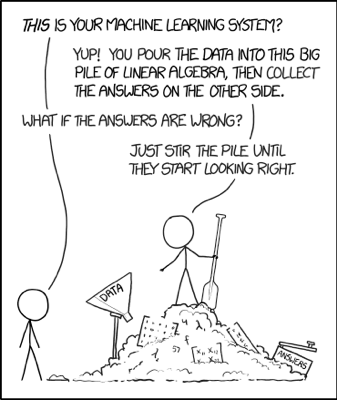

Such warnings are vital, but, as the Wansink affair illustrates, scientists also make many other sorts of errors in their use of statistical tests.63 The mathematics of advanced statistical methods are difficult, and many programs of study do not adequately train their graduates to master them.64 The development of powerful statistical software also makes it easy for scientists who don’t fully understand statistics to let their computers perform statistical tests for them. Jeff Leek, one of the authors of the popular blog Simply Statistics, put it bluntly in 2014: “The problem is not that people use p-values poorly, it is that the vast majority of data analysis is not performed by people properly trained to perform data analysis.”65

Faulty Data

Statistical analysis isn’t the only way research goes wrong. Scientists also produce supportive statistical results from recalcitrant data by fiddling with the data itself. Researchers commonly edit their data sets, often by excluding apparently bizarre cases (“outliers”) from their analyses. But in doing this they can skew their results: scientists who systematically exclude data that undermines their hypotheses bias their data to show only what they want to see.

Scientists can easily bias their data unintentionally, but some deliberately reshape their data set to produce a particular outcome. One anonymized survey of more than 2,000 psychologists found that 38% admitted to “deciding whether to exclude data after looking at the impact of doing so on the results.”67 Few researchers have published studies of this phenomenon, but anecdotal evidence suggests it is widespread. In neuroscience,

there may be (much) worse things out there, like the horror story someone (and I have reason to believe them) told me of a lab where the standard operating mode was to run a permutation analysis by iteratively excluding data points to find the most significant result. … The only difference from [sic] doing this and actually making up your data from thin air ... is that it actually uses real data – but it might as well not for all the validity we can expect from that.68

Researchers can also bias their data by ceasing to collect data at an arbitrary point, perhaps the point when the data that has already been collected finally supports their hypothesis. Conversely, a researcher whose data doesn’t support his hypothesis can decide to keep collecting additional data until it yields a more congenial result. Such practices are all too common. The survey of 2,000 psychologists noted above also found that 36% of those surveyed “stopped data collection after achieving the desired result.”69

Another sort of problem arises when scientists try to combine, or “harmonize,” multiple preexisting data sets and models in their research—while failing to account sufficiently for how such harmonization magnifies the uncertainty of their conclusions. Claudia Tebaldi and Reto Knutti concluded in 2007 that the entire field of probabilistic climate projection, which often relies on combining multiple climate models, had no verifiable relation to the actual climate, and thus no predictive value. Absent “new knowledge about the [climate] processes and a substantial increase in computational resources,” adding new climate models won’t help: “our uncertainty should not continue to decrease when the number of models increases.”70

Pervasive Pitfalls

Necessary and legitimate research procedures drift surprisingly easily across the line into illegitimate manipulations of the techniques of data collection and analysis. Researcher decisions that seem entirely innocent and justifiable can produce “junk science.” In a 2014 article in the American Scientist, Andrew Gelman and Eric Loken called attention to the many ways researchers’ decisions about how to collect, code, analyze, and present data can vitiate the value of statistical significance.71 Gelman and Loken cited several researchers who failed to find a hypothesized effect for a population as a whole, but did find the effect in certain subgroups. The researchers then formulated explanations for why they found the postulated effect among men but not women, the young but not the old, and so on. These researchers’ procedures amounted not only to p-hacking but also to the deliberate exclusion of data and hypothesizing after the fact: they were guaranteed to find significance somewhere if they examined enough subgroups.

Researchers allowed to choose between multiple measures of an imperfectly defined variable often decide to use the one which provides a statistically significant result. Gelman and Loken called attention to a study that purported to find a relationship between women’s menstrual cycles and their choice of what color shirts to wear.72 They pointed out that the researchers framed their hypothesis far too loosely:

Even though Beall and Tracy did an analysis that was consistent with their general research hypothesis—and we take them at their word that they were not conducting a “fishing expedition”—many degrees of freedom remain in their specific decisions: how strictly to set the criteria regarding the age of the women included, the hues considered as “red or shades of red,” the exact window of days to be considered high risk for conception, choices of potential interactions to examine, whether to combine or contrast results from different groups, and so on.73

Would Beall and Tracy’s hypothesis have produced statistically significant results if they had made different choices in analyzing their data? Perhaps. But a belief in the very hypothesis whose validity they were attempting to confirm could have subtly influenced at least some of their choices.

Facilitating Falsehood

The Costs of Researcher Freedom

Why do researchers get away with sloppy science? In part because, far too often, no one is watching and no one is there to stop them. We think of freedom as a good thing, but in the realm of scientific experimentation, uncontrolled researcher freedom makes it easy for scientists to err in all the ways described above.74 The fewer the constraints on scientists’ research designs, the more opportunities for malfeasance—and, as it turns out, a lot of scientists will go astray, deliberately or accidentally. For example, lack of constraints allows researchers to alter their methods midway through a study—changing hypotheses, stopping or recommencing data collection, redefining variables, “fine-tuning” statistical models—as they pursue publishable, statistically significant results. Researchers often justify midstream alteration of research procedures as flexibility or openness to new evidence75—but in practice such “flexibility” frequently subserves scientists’ unwillingness to accept a negative result.

Researchers sometimes have good reasons to alter a research design before a study is complete—for example, if a proposed drug in a clinical trial appears to be causing harm to the experimental subjects.76 (Though scientists can take even this sort of decision too hastily.77) But researchers also stop some clinical trials early on the grounds that a treatment’s benefits are already apparent and that it would be wrong to continue denying that treatment to the patients in the control group. Such truncated clinical trials pose grave ethical hazards: as one discussion put it, truncated trials “systematically overestimate treatment effects” and can violate “the ethical research requirement of scientific validity.”78 Moreover, a 2015 article in the Journal of Clinical Epidemiology indicated that “most discontinuations of clinical trials were not based on preplanned interim analyses or stopping rules.”79 In other words, most decisions to discontinue were done on the fly, without regard for the original research design. The researchers changed methodology midstream.

A now-famous 2011 article by Simmons, Nelson, and Simonsohn estimated that providing four “degrees of researcher freedom”—four ways to shift the design of an experiment while it is in progress—can lead to a 61% false-positive rate. Or, as the subtitle of the article put it, “Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant.” Simmons and his co-authors demonstrated their point by running an experiment to see if listening to selected songs will make you, literally, younger. Their flexible research design produced data that revealed an effect of 18 months, with p = .040.80

Absence of Openness

Lack of openness also contributes to the reproducibility crisis. Investigators far too rarely share data and methodology once they complete their studies. Scientists ought to be able to check and critique one another’s work, but many studies can’t be evaluated properly because researchers don’t make their data and procedures available to the public. We’ve seen that small changes in research design can have large effects on researchers’ conclusions. Yet once scientists publish their research, those small changes vanish from the record, and leave behind only the statistically significant result. For example, the methods used in meta-analyses to harmonize cognitive measures across data sets “are rarely reported.”81 But someone reading the results of a meta-analysis can’t understand it properly without a detailed description of the harmonization methods and of the codes used in formatting the data.

Moreover, data sets often come with privacy restrictions, usually to protect personal, commercial, or medical information. Some restrictions make sense—but others don’t. Sometimes unreleased data sets simply vanish—for example, those used in environmental science.82 Data sets can disappear because of archival failures, or because of a failure to plan how to transfer data into new archival environments that will provide reliable storage and continuing access. In either case, other researchers lose the ability to examine the underlying data and verify that it has been handled properly.

In February 2017, a furor that highlighted the problem of limited scientific openness erupted in the already contentious field of climate science. John Bates, a climate scientist who had recently retired from the National Oceanic and Atmospheric Administration (NOAA), leveled a series of whistleblowing accusations at his colleagues.83 He focused on the failure by Tom Karl, the head of NOAA’s National Centers for Environmental Information, to archive properly the dataset that substantiated Karl’s 2015 claim to refute evidence of a global warming hiatus since the early 2000s.84 Karl’s article had been published shortly before the Obama administration submitted its Clean Power Plan to the 2015 Paris Climate Conference, and it had received extensive press coverage.85 Yet Karl’s failure to archive his dataset violated NOAA’s own rules—and also the guidelines of Science, the prestigious journal that had published the article. Bates’ criticisms touched off a political argument about the soundness of Karl’s procedures and conclusions, but the data’s disappearance meant that no scientist could re-examine Karl’s work. Supporters and critics of Karl had to conduct their argument entirely in terms of their personal trust in Karl’s professional reliability. Practically, the polarized nature of climate debate meant that most disputants believed or disbelieved Karl depending upon whether they believed or disbelieved his conclusions. Science should not work that way—but without the original data, scientific inquiry could not work at all.

Both scientists and the public should regard skeptically research built upon private data. Gelman responded appropriately, if sarcastically, to Wansink’s refusal to share his data on privacy grounds:

Some people seem to be upset that Wansink isn’t sharing his data. If he doesn’t want to share the data, there’s no rule that he has to, right? It seems pretty simple to me: Wansink has no obligation whatsoever to share his data, and we have no obligation to believe anything in his papers. No data, no problem, right?86

The Wages of Sin: the Professional Culture of Science

The crisis of reproducibility arises at the nuts-and-bolts level from the technical mishandling of data and statistics. Uncontrolled researcher freedom and a lack of openness enable scientific malfeasance or the innocent commission of serious methodological mistakes. At the highest level, however, the crisis of reproducibility also derives from science’s professional culture, which provides incentives to handle statistics and data sloppily and to replace rigorous research techniques with a results-oriented framework. The two most dangerous aspects of this professional culture are the premium on positive results and groupthink.

The Premium on Positive Results

Modern science’s professional culture prizes positive results, and offers relatively few rewards to those who fail to find statistically significant relationships in their data. It also esteems apparently groundbreaking results far more than attempts to replicate earlier research. Ph.D.s, grant funding, publications, promotions, lateral moves to more prestigious universities, professional esteem, public attention—they all depend upon positive results that seem to reveal something new. A scientist who tries to build his career on checking old findings or publishing negative results isn’t likely to get very far. Scientists therefore steer away from replication studies, and they often can’t help looking for ways to turn negative results into positive ones. If those ways can’t be found, the negative results go into the file drawer.

Common sense says as much to any casual observer of modern science, but a growing body of research has documented the extent of the problem. As far back as 1987, a study of the medical literature on clinical trials showed a publication bias toward positive results.87 Later studies provided further evidence that the phenomenon affects an extraordinarily wide range of fields, including the social sciences generally,88 climate science,89 psychology,90 sociology,91 research on drug education,92 research on informational technology in education,93 research on “mindfulness-based mental health interventions,”94 and even dentistry.95

Groupthink

Public knowledge about the pressure to publish is fairly widespread. The effects of groupthink on scientific research are less widely known, less obvious, and far more insidious.

Academic psychologist Irving Janis invented the concept of groupthink—“a psychological drive for consensus at any cost that suppresses dissent and appraisal of alternatives in cohesive decision making groups.”96 Ironically, groupthink afflicts academics themselves, and contributes significantly to science’s crisis of reproducibility. Groupthink inhibits attempts to reproduce results that provide evidence for what scientists want to believe, since replication studies can undermine congenial conclusions. When a result appears to confirm its professional audience’s preconceptions, no one wants to go back and double-check whether it’s correct.

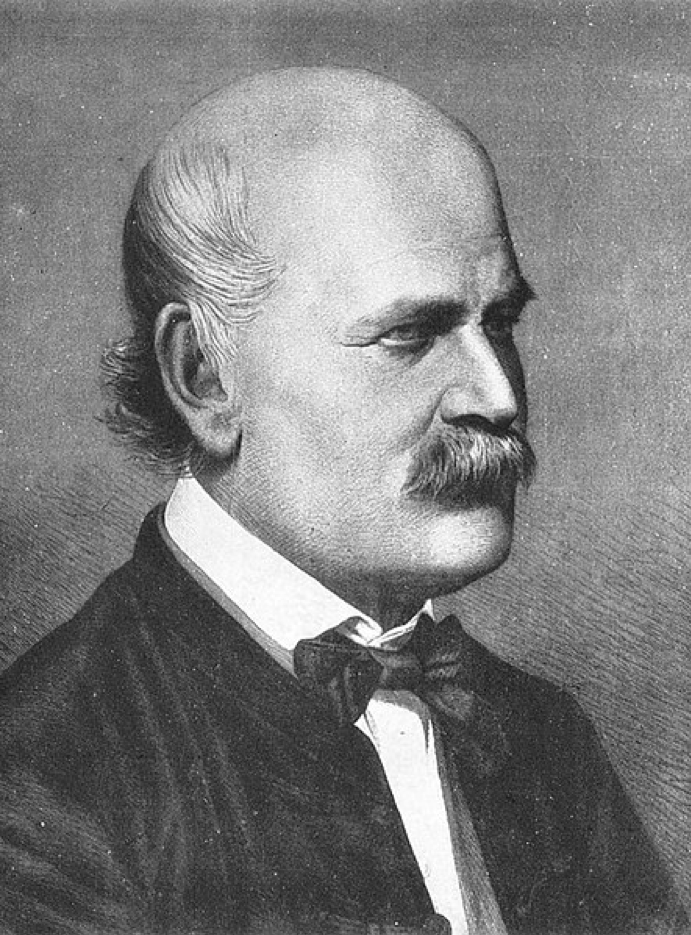

An entire academic discipline can succumb to groupthink, and create a professional consensus with a strong tendency to reinforce itself, reject results that question its foundations, and dismiss dissenters as troublemakers and cranks.97 Examples of groupthink can be found throughout the history of science. A generation of obstetricians ignored Ignaz Semmelweis’ call for them to wash their hands before delivering babies.98 Groupthink also contributed to the consensus among nutritionists that saturated fats cause heart disease, and to their refusal to consider the possibility that sugar was the real culprit.99

Some of the groupthink afflicting scientific research is political. Numerous studies have shown that the majority of academics are liberals and progressives, with relatively few moderates and scarcely any conservatives among their ranks.100 Social psychologist Jonathan Haidt made this point vividly at the Society for Personality and Social Psychology’s annual conference in 2011, when he asked the audience to indicate their political affiliations.

[Haidt began] by asking how many considered themselves politically liberal. A sea of hands appeared, and Dr. Haidt estimated that liberals made up 80 percent of the 1,000 psychologists in the ballroom. When he asked for centrists and libertarians, he spotted fewer than three dozen hands. And then, when he asked for conservatives, he counted a grand total of three.101

The Heterodox Academy, which Haidt helped found in 2015, argues that the overwhelming political homogeneity of academics has created groupthink that distorts academic research.102 Scientists readily accept results that confirm liberal political arguments,103 and frequently reject contrary results out of hand. Political groupthink particularly affects some fields with obvious political implications, such as social psychology104 and climate science.105 Climatologist Judith Curry testified before Congress in 2017 about the pervasiveness of political groupthink in her field:

The politicization of climate science has contaminated academic climate research and the institutions that support climate research, so that individual scientists and institutions have become activists and advocates for emissions reductions policies. Scientists with a perspective that is not consistent with the consensus are at best marginalized (difficult to obtain funding and get papers published by ‘gatekeeping’ journal editors) or at worst ostracized by labels of ‘denier’ or ‘heretic.’106

But politicized groupthink can bias scientific and social-scientific research in any field that acquires political coloration.

Like-minded academics’ ability to define their own discipline by controlling publication, tenure, and promotions exacerbates groupthink. These practices silence and purge dissenters, and force scientists who wish to be members of a field to give “correct” answers to certain questions. The scientists who remain in the field no longer realize that they are participating in groupthink, because they have excluded any peers who could tell them so.

Dire Consequences

Just the financial consequences of the reproducibility crisis are enormous: a 2015 study estimated that researchers spent around $28 billion annually in the United States alone on irreproducible preclinical research for new drug treatments.107 Drug research inevitably will proceed down some blind alleys—but the money isn’t wasted so long as scientists know they came up with negative results. Yet it is waste, and waste on a massive scale, to spend tens of billions of dollars on research that scientists mistakenly believe produced positive results.

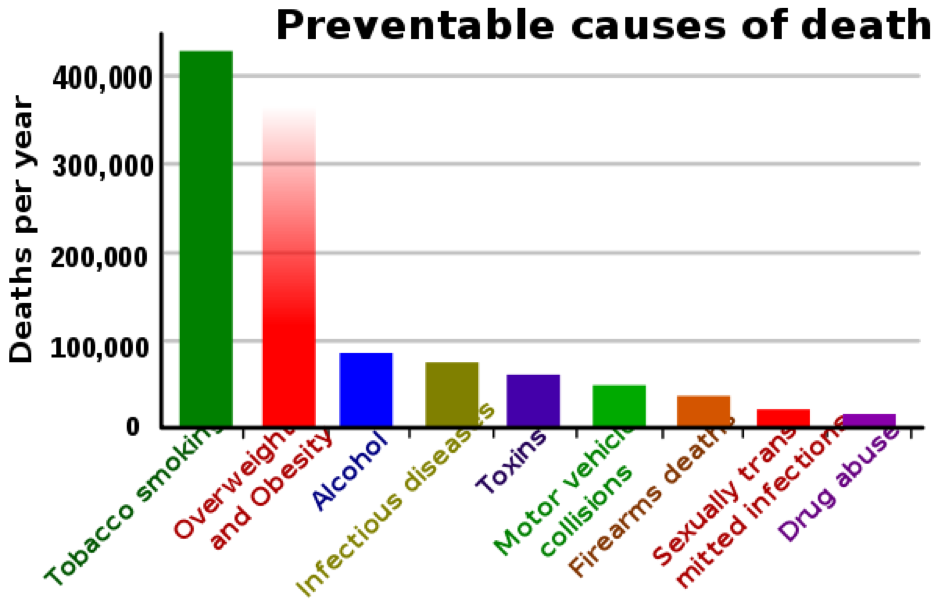

Beyond the dollars and cents, ordinary citizens, policymakers, and scientists make an immense number of harmful decisions on the basis of irreproducible research. Individuals cumulatively waste large amounts of money and time as they practice “power poses” or follow Brian Wansink’s weight-loss advice. The irreproducible research of entire disciplines distorts public policy and public expenditure in areas such as public health, climate science, and marriage and family law. The gravest casualty of all is the authority that science ought to have with the public, but which it begins to forfeit when it no longer produces reliable knowledge.

Modern science must reform itself to redeem its credibility.

What Is To Be Done?

What Has Been Done

Why didn’t Brian Wansink change his lab procedures back in 2005, when John Ioannidis published his seminal articles? Why didn’t all the other Wansinks heed the same warnings? Scientists don’t change how they conduct research overnight, and many still use the same techniques they used a generation ago. Some of their caution was reasonable—research procedures shouldn’t change on a dime. Yet a flood of evidence provides compelling confirmation that modern science must reform. A critical mass of scientists now realizes that research cannot go on in the old way.